Hi All,

We are just getting started moving all out machines to a proxmox VE.

We are using VMware converter on our machines to grab a vmdk disk .

When I was testing out proxmox and learning how to use it, I had the storage setup as an LVM-thin as I didnt have enough disks on hand to do a RaidZ2 like we were going to in production. With the LVM-thin setup, I was able to create a virtual machine with the parameters that matched the machine I was converting then run a qemu convert command on my vmdk and replace the disk that was created during the VM setup process. I could then boot and be good to go. I could also copy the virtual disk off the server as a backup or to move to a test box.

Clearly, this process is much different on zfs. Can someone explain like Im five what the process is to achieve the same goal as I was doing before with LVM-thin? I have a bunch of machines I made vmdk disks, converted them to RAW, and I need to import them as vms into my zfs based proxmox. I would also like to learn how to pull a copy of my virtual disk manually if I need to for some reason.

I know there is the backup and restore options that creates VMA files that I can decompress and convert. That only helps me though in normal operation and I would like to know how to do this manually. It also doesnt help me get the VMs into the machine in the first place.

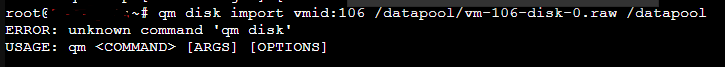

I understand that I need to use send, recv, and snapshot commands for this but my syntax must be off or something because I cannot seem to make it work right.

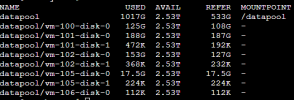

The current machine I am trying to import shows as vm-106-disk-0. My zfs pool is named datapool. Also I have /dev/datapool and /dev/zvol/datapool is this normal as they seem to contain the same contents. Also, some of my vms have multiple disks. I assume the procedures are the same and I need to just copy both out separately.

Im sorry for my noobishness and I very much appreciate the help

We are just getting started moving all out machines to a proxmox VE.

We are using VMware converter on our machines to grab a vmdk disk .

When I was testing out proxmox and learning how to use it, I had the storage setup as an LVM-thin as I didnt have enough disks on hand to do a RaidZ2 like we were going to in production. With the LVM-thin setup, I was able to create a virtual machine with the parameters that matched the machine I was converting then run a qemu convert command on my vmdk and replace the disk that was created during the VM setup process. I could then boot and be good to go. I could also copy the virtual disk off the server as a backup or to move to a test box.

Clearly, this process is much different on zfs. Can someone explain like Im five what the process is to achieve the same goal as I was doing before with LVM-thin? I have a bunch of machines I made vmdk disks, converted them to RAW, and I need to import them as vms into my zfs based proxmox. I would also like to learn how to pull a copy of my virtual disk manually if I need to for some reason.

I know there is the backup and restore options that creates VMA files that I can decompress and convert. That only helps me though in normal operation and I would like to know how to do this manually. It also doesnt help me get the VMs into the machine in the first place.

I understand that I need to use send, recv, and snapshot commands for this but my syntax must be off or something because I cannot seem to make it work right.

The current machine I am trying to import shows as vm-106-disk-0. My zfs pool is named datapool. Also I have /dev/datapool and /dev/zvol/datapool is this normal as they seem to contain the same contents. Also, some of my vms have multiple disks. I assume the procedures are the same and I need to just copy both out separately.

Im sorry for my noobishness and I very much appreciate the help