Hello,

I am currently trying to setup a WIndows 11 VM which requires tmp support. I have a proxmox 7.4-3 cluster which uses a gluster filesystem to store all vm data and images so that each of our nodes can take over the vms as failover. I tried setting this up, but the tmp setup fails with:

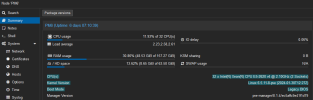

This is my setup for the VM:

I am using a glusterfs replicate setup with this configuration:

I am not sure how to debug this problem properly. Can someone please help me to figure out how to proceed here in order to find out what is going wrong?

I am currently trying to setup a WIndows 11 VM which requires tmp support. I have a proxmox 7.4-3 cluster which uses a gluster filesystem to store all vm data and images so that each of our nodes can take over the vms as failover. I tried setting this up, but the tmp setup fails with:

Code:

/bin/swtpm exit with status 256:

TASK ERROR: start failed: command 'swtpm_setup --tpmstate file://gluster://vm-host-01.fes/gv_vmdata/images/107/vm-107-disk-0.raw --createek --create-ek-cert --create-platform-cert --lock-nvram --config /etc/swtpm_setup.conf --runas 0 --not-overwrite --tpm2 --ecc' failed: exit code 1This is my setup for the VM:

I am using a glusterfs replicate setup with this configuration:

Bash:

# gluster volume info

Volume Name: gv_vmdata

Type: Replicate

Volume ID: 7085d24d-c7cd-4fc0-9013-10056de09057

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: 192.168.40.11:/data/proxmox/vm_data

Brick2: vm-host-01.fes:/data/proxmox/vm_data

Brick3: vm-data-01.fes:/data/proxmox/vm_data

Options Reconfigured:

performance.client-io-threads: off

nfs.disable: on

transport.address-family: inet

storage.fips-mode-rchecksum: on

cluster.granular-entry-heal: on

cluster.self-heal-daemon: enableI am not sure how to debug this problem properly. Can someone please help me to figure out how to proceed here in order to find out what is going wrong?