I understand, that the Setup on the host side is not optimal.

My goal is to fix the Windows driver side first. I can move the VM onto other disk later.

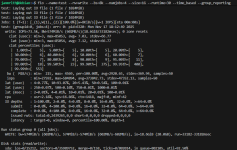

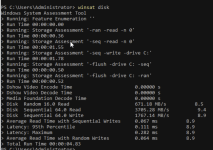

But the speed is

far away from what it should be . Even with ZFS slowing us down.

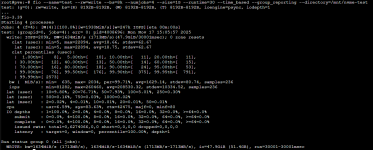

root@pve:~# smartctl -a /dev/nvme0n1

smartctl 7.4 2024-10-15 r5620 [x86_64-linux-6.14.8-2-pve] (local build)

Copyright (C) 2002-23, Bruce Allen, Christian Franke,

www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Number: MK000800KWWFE

Serial Number: EJ03N4235I0504J2N

Firmware Version: HPK1

PCI Vendor ID: 0x1c5c

PCI Vendor Subsystem ID: 0x1590

IEEE OUI Identifier: 0xace42e

Total NVM Capacity: 800,166,076,416 [800 GB]

Unallocated NVM Capacity: 0

Controller ID: 0

NVMe Version: 1.3

Number of Namespaces: 16

Namespace 1 Size/Capacity: 800,166,076,416 [800 GB]

Namespace 1 Formatted LBA Size: 512

Namespace 1 IEEE EUI-64: ace42e 000560953b

Local Time is: Mon Nov 17 15:40:28 2025 CET

Firmware Updates (0x14): 2 Slots, no Reset required

Optional Admin Commands (0x005e): Format Frmw_DL NS_Mngmt Self_Test MI_Snd/Rec

Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat Timestmp

Log Page Attributes (0x0e): Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg

Maximum Data Transfer Size: 64 Pages

Warning Comp. Temp. Threshold: 65 Celsius

Critical Comp. Temp. Threshold: 68 Celsius

Namespace 1 Features (0x04): Dea/Unw_Error

Supported Power States

St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat

0 + 11.00W 0.00W - 0 0 0 0 30000 30000

1 + 11.00W 0.00W - 1 1 1 1 30000 30000

2 + 9.00W 0.00W - 2 2 2 2 30000 30000

3 + 9.00W 0.00W - 2 2 2 2 30000 30000

4 - 6.00W - - 3 3 3 3 30000 30000

Supported LBA Sizes (NSID 0x1)

Id Fmt Data Metadt Rel_Perf

0 - 512 0 2

1 - 4096 0 0

=== START OF SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

SMART/Health Information (NVMe Log 0x02)

Critical Warning: 0x00

Temperature: 35 Celsius

Available Spare: 100%

Available Spare Threshold: 10%

Percentage Used: 0%

Data Units Read: 1,056,885 [541 GB]

Data Units Written: 1,966,346 [1.00 TB]

Host Read Commands: 10,896,589

Host Write Commands: 22,115,094

Controller Busy Time: 43

Power Cycles: 1

Power On Hours: 147

Unsafe Shutdowns: 0

Media and Data Integrity Errors: 0

Error Information Log Entries: 4

Warning Comp. Temperature Time: 0

Critical Comp. Temperature Time: 0

Temperature Sensor 2: 43 Celsius

Temperature Sensor 4: 59 Celsius

Error Information (NVMe Log 0x01, 16 of 256 entries)

Num ErrCount SQId CmdId Status PELoc LBA NSID VS Message

0 4 0 0xf010 0x4004 0x004 0 1 - Invalid Field in Command

1 3 0 0xa016 0x4004 0x004 0 1 - Invalid Field in Command

2 2 0 0xd011 0x4004 0x004 0 1 - Invalid Field in Command

3 1 0 0x001c 0x4004 0x028 0 0 - Invalid Field in Command

Read Self-test Log failed: Invalid Field in Command (0x2002)