ballooning/ksm/cpu thin provisioning makes sense when you want to overprovision your resources. the more resources you have the more sense it can make.

e.g. wanting to save mem on an 8GB system, most likely it is not worth the trouble and smarter to get another memory stick.

but if you are an ISP and have hundreds of servers with +1TB of mem each. you can literally save millions if you do a little overprovisioning instead of buying hardware.

you now how it is, the IT asks the devs how much resources the new app will need (they overestimate a little), they ask product management how many millions of users are expected (they are always way overoptimistic), so usually you can get away with overprove in this bigger environments just fine.

for small systems i think containers are better if you want to get the most use out of your last bit of memory instead of using vm.

also the host can not "take" memory from the vm, he asks the vm if it can have back some memory and the VM decides if it will hand out memory or not.

this is usually not one single interaction, most likely they do many iterations and only a few kb/mb are returned each round, that is why it is slow.

(if the host runs out of free mem then it has to swap or oom some processes which usually are the biggest ones -> your kvm/qemu vms)

also the other way around if the vm wants it's dynamic memory back it has to ask the host for it, the host has to decide if it will give memory back or not. (if it does not get the mem fast enough, it has to swap or oom some processes)

to the question about the minimum size setting, there are programs that have their own memory scheduler and require the whole range when starting.

e.g. java: if you have the java app set to use 8GB and have a vm with currently 6GB free and an possible additional 4GB via balloon, the java app will crash while starting due to out of memory, ballooning in this case is not fast enough... see that happen all the time

in this cases the minimum setting makes sense to get right.

also right sizing your vm's is better then overprov, but really hard to get right..

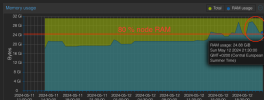

we usually get away with 120% mem and 350% cpu overprov, but each environment is different.

and for some reason it rubs me the wrong way that it is called ballooning with kvm..

ballooning is from ESX where you have an process inside the vm that gets inflated (like a balloon, hence the name), those pages are then mapped out by the host. also this balloon is backed by it's own swap file, so on esxi a VM might get really slow in low mem conditions but will never be oom by the host.

on kvm/qemu the memory size of your vm will shrink and grow.. so dynamic/flexible memory would have been a much better name for this and if the host does not have enough swap space it may trigger an oom depending on how crazy you go with overprov.