Hello.

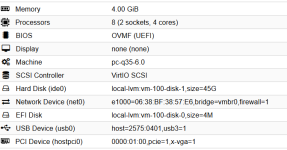

I did a fresh install of PVE 9.0.1 and tried to make a passthrough of the GTX1650:

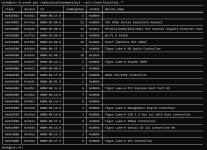

But with dmesg command At the start I don't see any "vfio":

If you have any ideas, feel free

I did a fresh install of PVE 9.0.1 and tried to make a passthrough of the GTX1650:

Code:

root@pve:~# lspci -nnk -s 01:00.0

01:00.0 VGA compatible controller [0300]: NVIDIA Corporation TU117 [GeForce GTX 1650] [10de:1f82] (rev a1)

Subsystem: Gigabyte Technology Co., Ltd Device [1458:4026]

Kernel modules: nvidiafb, nouveau

root@pve:~# lspci -nnk -s 01:00.1

01:00.1 Audio device [0403]: NVIDIA Corporation Device [10de:10fa] (rev a1)

Subsystem: Gigabyte Technology Co., Ltd Device [1458:4026]

Kernel modules: snd_hda_intel

root@pve:~# cat /etc/modprobe.d/vfio.conf

options vfio-pci ids=10de:1b80,10de:10f0 disable_vga=1

root@pve:~# cat /etc/modprobe.d/

blacklist-nvidia.conf pve-blacklist.conf zfs.conf

intel-microcode-blacklist.conf vfio.conf

root@pve:~# cat /etc/modprobe.d/

root@pve:~# cat /etc/modprobe.d/blacklist-nvidia.conf

blacklist nouveau

blacklist nvidia

blacklist nvidiafb

blacklist rivafb

blacklist snd_hda_intel

blacklist iwlwifi

blacklist input

root@pve:~#But with dmesg command At the start I don't see any "vfio":

Code:

[ 16.308443] vmbr0: port 1(enp4s0) entered forwarding state

[ 17.148471] vmbr0: port 1(enp4s0) entered disabled state

[ 19.796336] r8169 0000:04:00.0 enp4s0: Link is Up - 1Gbps/Full - flow control rx/tx

[ 19.796357] vmbr0: port 1(enp4s0) entered blocking state

[ 19.796360] vmbr0: port 1(enp4s0) entered forwarding state

root@pve:~# dmesg | grep nvidia

root@pve:~# dmesg | grep nouveau

root@pve:~# dmesg | grep vfio

root@pve:~#If you have any ideas, feel free