Feb 01 19:28:12 pve3 kernel: </TASK>

Feb 01 19:28:12 pve3 kernel: R13: 0000000000000001 R14: 0000000000000000 R15: 0000000000000000

Feb 01 19:28:12 pve3 kernel: R10: 0000000000000000 R11: 0000000000000246 R12: 00006061e5e21080

Feb 01 19:28:12 pve3 kernel: RBP: 000000000000000b R08: 0000000000000000 R09: 0000000000000000

Feb 01 19:28:12 pve3 kernel: RDX: 0000000000000000 RSI: 0000000000000000 RDI: 000000000000000b

Feb 01 19:28:12 pve3 kernel: RAX: ffffffffffffffda RBX: 0000726d4cc67500 RCX: 0000726d4f0a59ee

Feb 01 19:28:12 pve3 kernel: RSP: 002b:00007ffdc481f778 EFLAGS: 00000246 ORIG_RAX: 0000000000000003

Feb 01 19:28:12 pve3 kernel: RIP: 0033:0x726d4f0a59ee

Feb 01 19:28:12 pve3 kernel: entry_SYSCALL_64_after_hwframe+0x76/0x7e

Feb 01 19:28:12 pve3 kernel: ? exc_page_fault+0x90/0x1b0

Feb 01 19:28:12 pve3 kernel: ? irqentry_exit+0x43/0x50

Feb 01 19:28:12 pve3 kernel: ? irqentry_exit_to_user_mode+0x2e/0x290

Feb 01 19:28:12 pve3 kernel: ? do_user_addr_fault+0x2f8/0x830

Feb 01 19:28:12 pve3 kernel: ? handle_mm_fault+0x254/0x370

Feb 01 19:28:12 pve3 kernel: ? count_memcg_events+0xd7/0x1a0

Feb 01 19:28:12 pve3 kernel: ? __handle_mm_fault+0x62a/0xfd0

Feb 01 19:28:12 pve3 kernel: ? numa_rebuild_single_mapping.isra.0+0x13f/0x1c0

Feb 01 19:28:12 pve3 kernel: ? mpol_misplaced+0x69/0x230

Feb 01 19:28:12 pve3 kernel: ? task_numa_fault+0x68/0xb90

Feb 01 19:28:12 pve3 kernel: ? node_is_toptier+0x42/0x60

Feb 01 19:28:12 pve3 kernel: do_syscall_64+0x80/0xa30

Feb 01 19:28:12 pve3 kernel: x64_sys_call+0x1742/0x2330

Feb 01 19:28:12 pve3 kernel: __x64_sys_close+0x3e/0x90

Feb 01 19:28:12 pve3 kernel: fput_close_sync+0x3d/0xa0

Feb 01 19:28:12 pve3 kernel: __fput+0xed/0x2d0

Feb 01 19:28:12 pve3 kernel: blkdev_release+0x11/0x20

Feb 01 19:28:12 pve3 kernel: bdev_release+0x171/0x1b0

Feb 01 19:28:12 pve3 kernel: filemap_write_and_wait_range+0xd5/0x130

Feb 01 19:28:12 pve3 kernel: __filemap_fdatawait_range+0x87/0xf0

Feb 01 19:28:12 pve3 kernel: folio_wait_writeback+0x2b/0xa0

Feb 01 19:28:12 pve3 kernel: folio_wait_bit+0x18/0x30

Feb 01 19:28:12 pve3 kernel: ? __pfx_wake_page_function+0x10/0x10

Feb 01 19:28:12 pve3 kernel: folio_wait_bit_common+0x124/0x2f0

Feb 01 19:28:12 pve3 kernel: io_schedule+0x4c/0x80

Feb 01 19:28:12 pve3 kernel: schedule+0x27/0xf0

Feb 01 19:28:12 pve3 kernel: ? asm_sysvec_apic_timer_interrupt+0x1b/0x20

Feb 01 19:28:12 pve3 kernel: __schedule+0x468/0x1310

Feb 01 19:28:12 pve3 kernel: <TASK>

Feb 01 19:28:12 pve3 kernel: Call Trace:

Feb 01 19:28:12 pve3 kernel: task:qemu-img state:D stack:0 pid:54031 tgid:54031 ppid:53944 task_flags:0x400100 flags:0x00004002

Feb 01 19:28:12 pve3 kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Feb 01 19:28:12 pve3 kernel: Tainted: P IO 6.17.4-2-pve #1

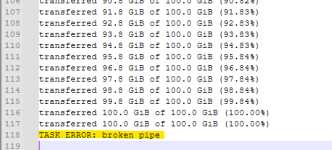

Feb 01 19:28:12 pve3 kernel: INFO: task qemu-img:54031 blocked for more than 122 seconds.