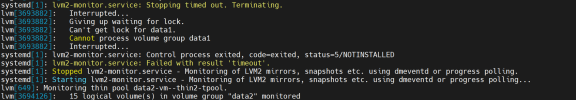

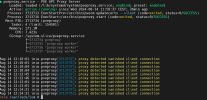

We found still machine with a migration hanging on one of the two machines, the VM was sunnig properly but the hypervisor was having all sort of question marks. We did stop one of the VM (215) but now it does not want to start. At first it seemed a lock issue but now I get the error ---unable to read tail (got 0 byted instead)

a /var/lock/qemu-server # qm showcmd 215 --pretty

/usr/bin/kvm \

-id 215 \

-name 'glpi--SV36-127--web-helpdesk,debug-threads=on' \

-no-shutdown \

-chardev 'socket,id=qmp,path=/var/run/qemu-server/215.qmp,server=on,wait=off' \

-mon 'chardev=qmp,mode=control' \

-chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' \

-mon 'chardev=qmp-event,mode=control' \

-pidfile /var/run/qemu-server/215.pid \

-daemonize \

-smbios 'type=1,uuid=b350704f-e282-4461-83e3-7fe86f6ab037' \

-smp '4,sockets=1,cores=4,maxcpus=4' \

-nodefaults \

-boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' \

-vnc 'unix:/var/run/qemu-server/215.vnc,password=on' \

-cpu kvm64,enforce,+kvm_pv_eoi,+kvm_pv_unhalt,+lahf_lm,+sep \

-m 8192 \

-device 'pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e' \

-device 'pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f' \

-device 'piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2' \

-device 'usb-tablet,id=tablet,bus=uhci.0,port=1' \

-device 'VGA,id=vga,bus=pci.0,addr=0x2' \

-chardev 'socket,path=/var/run/qemu-server/215.qga,server=on,wait=off,id=qga0' \

-device 'virtio-serial,id=qga0,bus=pci.0,addr=0x8' \

-device 'virtserialport,chardev=qga0,name=org.qemu.guest_agent.0' \

-device 'virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3,free-page-reporting=on' \

-iscsi 'initiator-name=iqn.1993-08.org.debian:01:a27619c4c6c1' \

-drive 'if=none,id=drive-ide2,media=cdrom,aio=io_uring' \

-device 'ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2,bootindex=200' \

-drive 'file=/dev/data2/vm-215-disk-0,if=none,id=drive-virtio0,format=raw,cache=none,aio=io_uring,detect-zeroes=on' \

-device 'virtio-blk-pci,drive=drive-virtio0,id=virtio0,bus=pci.0,addr=0xa,bootindex=100' \

-netdev 'type=tap,id=net0,ifname=tap215i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' \

-device 'virtio-net-pci,mac=7E:49:E5:1D:50

E,netdev=net0,bus=pci.0,addr=0x12,id=net0,rx_queue_size=1024,tx_queue_size=256,bootindex=300' \

-machine 'type=pc+pve0'