PVE 8.3.0, VM running Ubuntu 24.04.1, fully updated. Docker, portainer-agent installed.

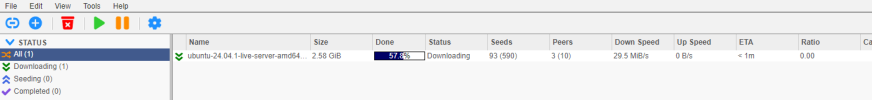

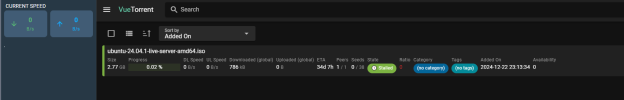

I have a docker container of binhex/arch-qbittorrentvpn. It's been running fine for years, but recently nothing downloads. I checked a few days ago and current speed was zero. I added a bunch of linux ISOs and the top seeded torrents just to have something that will defiantly download at max speed. Restarting makes a bunch of torrents start and immediately shoot up in speed for a single '3 second refresh cycle' before everything drops to zero. I tested a bunch of those torrents on my desktop and they all download at full speed (500Mbps), so the specific torrents aren't the problem.

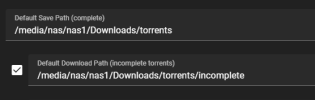

The last time this happened, it was because i ran out of space in the VM (no room left to download to, makes sense why everything drops to zero DL speed), when that happened last year, i extended the lvm from 100G to 200G and moved the incomplete to the NAS so it should never run out of space again...

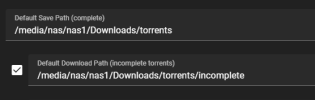

Everything i can see shows plenty of space left. It's configured to have both incomplete and complete downloads to save to my NAS (with about 13TB free)

all of these run inside the VM:

I've given it a 200G lvm, and it's using 24G (13%). ncdu -x / doesn;t show anything untoward, just where the 24G are.

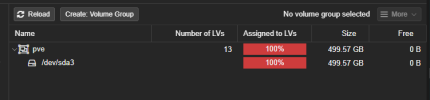

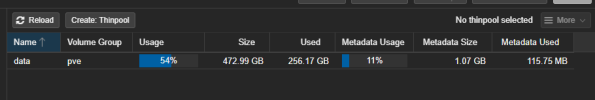

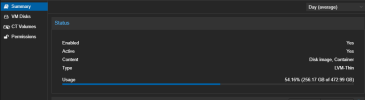

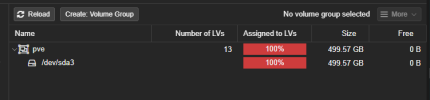

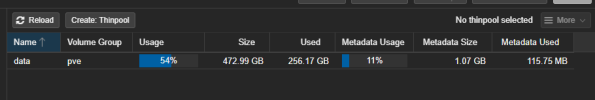

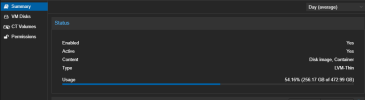

As for the host, i have over allocated to VMs/CTs, but i've always done that and never run into issues as long as i'm not a the limit of the drive (please correct me if i'm wrong. [is there a command to run on the PVE1 host to show this better?]

I'm at a loss of why the torrent downloads are not working, and all symptoms are pointing to running out of space, but i can for the life of me see where or why.

I have a docker container of binhex/arch-qbittorrentvpn. It's been running fine for years, but recently nothing downloads. I checked a few days ago and current speed was zero. I added a bunch of linux ISOs and the top seeded torrents just to have something that will defiantly download at max speed. Restarting makes a bunch of torrents start and immediately shoot up in speed for a single '3 second refresh cycle' before everything drops to zero. I tested a bunch of those torrents on my desktop and they all download at full speed (500Mbps), so the specific torrents aren't the problem.

The last time this happened, it was because i ran out of space in the VM (no room left to download to, makes sense why everything drops to zero DL speed), when that happened last year, i extended the lvm from 100G to 200G and moved the incomplete to the NAS so it should never run out of space again...

Everything i can see shows plenty of space left. It's configured to have both incomplete and complete downloads to save to my NAS (with about 13TB free)

all of these run inside the VM:

Code:

austempest@ubuntu-server:~$ df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 1.2G 1.6M 1.2G 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 196G 24G 164G 13% /

tmpfs 5.4G 0 5.4G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 974M 182M 725M 21% /boot

192.168.1.120:/volume1/nas1 42T 29T 13T 70% /media/nas/nas1

192.168.1.120:/volume2/nas2 11T 1.6T 9.0T 15% /media/nas/nas2

tmpfs 1.1G 12K 1.1G 1% /run/user/1000I've given it a 200G lvm, and it's using 24G (13%). ncdu -x / doesn;t show anything untoward, just where the 24G are.

Code:

austempest@ubuntu-server:~$ sudo lvdisplay

--- Logical volume ---

LV Path /dev/ubuntu-vg/ubuntu-lv

LV Name ubuntu-lv

VG Name ubuntu-vg

LV UUID uNeOgK-xYju-8sFh-U774-mcM7-aRIG-5MJHNI

LV Write Access read/write

LV Creation host, time ubuntu-server, 2021-01-12 22:41:14 +1100

LV Status available

# open 1

LV Size <199.00 GiB

Current LE 50943

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:0

Code:

austempest@ubuntu-server:~$ sudo vgdisplay

--- Volume group ---

VG Name ubuntu-vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 9

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 1

Act PV 1

VG Size <199.00 GiB

PE Size 4.00 MiB

Total PE 50943

Alloc PE / Size 50943 / <199.00 GiB

Free PE / Size 0 / 0

VG UUID zbiwgw-DStT-f44L-VGiI-1ma6-jfDp-uGKFrdAs for the host, i have over allocated to VMs/CTs, but i've always done that and never run into issues as long as i'm not a the limit of the drive (please correct me if i'm wrong. [is there a command to run on the PVE1 host to show this better?]

Code:

root@pve1:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 7.8G 0 7.8G 0% /dev

tmpfs 1.6G 2.6M 1.6G 1% /run

/dev/mapper/pve-root 16G 11G 4.0G 73% /

tmpfs 7.8G 66M 7.7G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

efivarfs 128K 116K 7.6K 94% /sys/firmware/efi/efivars

/dev/sda2 511M 328K 511M 1% /boot/efi

/dev/fuse 128M 36K 128M 1% /etc/pve

//192.168.1.120/proxmox 42T 29T 13T 70% /mnt/pve/nas

tmpfs 1.6G 0 1.6G 0% /run/user/0I'm at a loss of why the torrent downloads are not working, and all symptoms are pointing to running out of space, but i can for the life of me see where or why.