Logging this here (or does the team prefer bug reports for test items?)

Edit TL;DR - this is a hung guest VM - no idea why it hung, nothing in dmesg etc - happy to try and figure it out, looking at the log lower down it seems the freeze command was issued and processed.

Then the thaw command was issues and that was not processed.

I assume something hung in the VM or at a KVM/QEMU level.

I cleared this by stopping the node.

----original post---

I thought disabling the pvetest deb would let me go back to previous versions - but i guess not - what ths apt command to revert to preivous version (or do i just uninstall and re-install the backup client and server packages with the pvetest deb disabled)

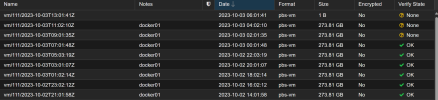

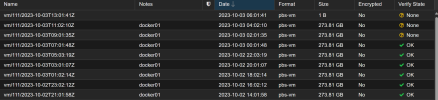

interestingly everything was fine until 6am this morning.... before that it was backing up fine.... now it has a weird stalled 1B thing...

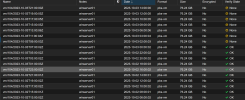

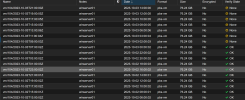

no issue with the backup of another VM on the same node to the same pbs:

ahh, this seems to be the first backup that failed and was more details

Package list

Edit TL;DR - this is a hung guest VM - no idea why it hung, nothing in dmesg etc - happy to try and figure it out, looking at the log lower down it seems the freeze command was issued and processed.

Then the thaw command was issues and that was not processed.

Code:

INFO: attaching TPM drive to QEMU for backup

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

ERROR: VM 111 qmp command 'guest-fsfreeze-thaw' failed - got timeout

ERROR: VM 111 qmp command 'backup' failed - got timeoutI assume something hung in the VM or at a KVM/QEMU level.

I cleared this by stopping the node.

----original post---

I thought disabling the pvetest deb would let me go back to previous versions - but i guess not - what ths apt command to revert to preivous version (or do i just uninstall and re-install the backup client and server packages with the pvetest deb disabled)

Code:

INFO: status = running

INFO: VM Name: docker01

VM 111 qmp command 'query-status' failed - unable to connect to VM 111 qmp socket - timeout after 51 retries

ERROR: Backup of VM 111 failed - VM 111 qmp command 'query-version' failed - unable to connect to VM 111 qmp socket - timeout after 51 retries

INFO: Failed at 2023-10-03 14:01:17

INFO: Backup job finished with errorsinterestingly everything was fine until 6am this morning.... before that it was backing up fine.... now it has a weird stalled 1B thing...

no issue with the backup of another VM on the same node to the same pbs:

ahh, this seems to be the first backup that failed and was more details

Code:

INFO: Starting Backup of VM 111 (qemu)

INFO: Backup started at 2023-10-03 06:01:41

INFO: status = running

INFO: VM Name: docker01

INFO: include disk 'scsi0' 'local-lvm:vm-111-disk-2' 127G

INFO: include disk 'scsi1' 'local-lvm:vm-111-disk-3' 128G

INFO: include disk 'efidisk0' 'local-lvm:vm-111-disk-0' 4M

INFO: include disk 'tpmstate0' 'local-lvm:vm-111-disk-1' 4M

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/111/2023-10-03T13:01:41Z'

INFO: attaching TPM drive to QEMU for backup

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

ERROR: VM 111 qmp command 'guest-fsfreeze-thaw' failed - got timeout

ERROR: VM 111 qmp command 'backup' failed - got timeout

INFO: aborting backup job

ERROR: VM 111 qmp command 'backup-cancel' failed - unable to connect to VM 111 qmp socket - timeout after 5986 retries

INFO: resuming VM again

ERROR: Backup of VM 111 failed - VM 111 qmp command 'cont' failed - unable to connect to VM 111 qmp socket - timeout after 450 retriesPackage list

Code:

proxmox-ve: 8.0.2 (running kernel: 6.2.16-15-pve)

pve-manager: 8.0.6 (running version: 8.0.6/57490ff2c6a38448)

pve-kernel-6.2: 8.0.5

proxmox-kernel-helper: 8.0.3

proxmox-kernel-6.2.16-15-pve: 6.2.16-15

proxmox-kernel-6.2: 6.2.16-15

proxmox-kernel-6.2.16-14-pve: 6.2.16-14

proxmox-kernel-6.2.16-12-pve: 6.2.16-12

pve-kernel-6.2.16-3-pve: 6.2.16-3

ceph: 18.2.0-pve2

ceph-fuse: 18.2.0-pve2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx5

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.26-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.1

libpve-access-control: 8.0.5

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.9

libpve-guest-common-perl: 5.0.5

libpve-http-server-perl: 5.0.4

libpve-rs-perl: 0.8.6

libpve-storage-perl: 8.0.3

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 3.0.3-1

proxmox-backup-file-restore: 3.0.3-1

proxmox-kernel-helper: 8.0.3

proxmox-mail-forward: 0.2.0

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.0.8

pve-cluster: 8.0.4

pve-container: 5.0.5

pve-docs: 8.0.5

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.3

pve-firmware: 3.8-2

pve-ha-manager: 4.0.2

pve-i18n: 3.0.7

pve-qemu-kvm: 8.0.2-6

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.7

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.13-pve1

Last edited: