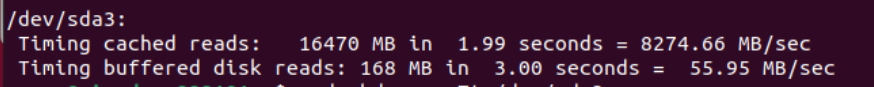

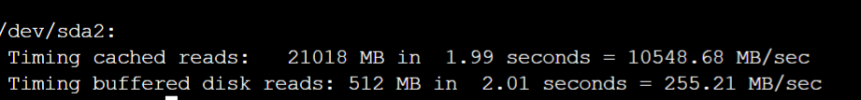

Was looking today and noticed significant slower speeds on ubuntu guest VM for hdparam -Tt /dev/sda2 than for that same partition drive on the host node directly on console.

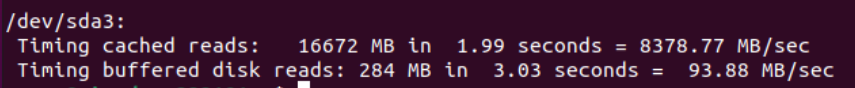

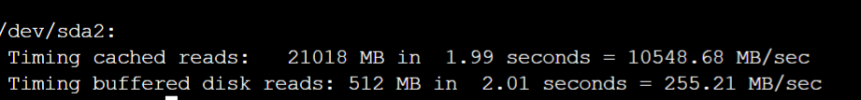

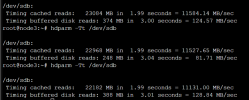

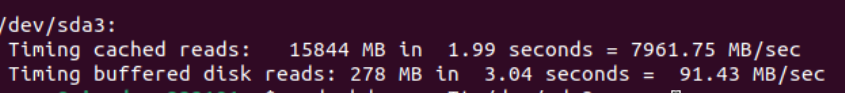

This is directly on proxmox node to the attached SSD /sda2

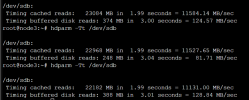

This is directly on proxmox node to the attached SATA HDD /dev/sdb

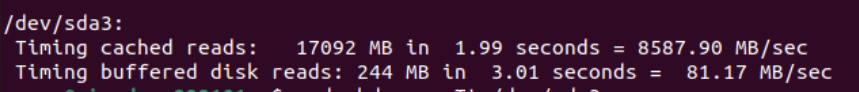

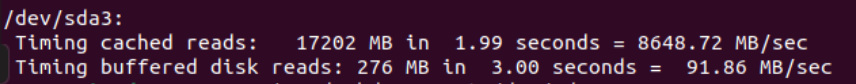

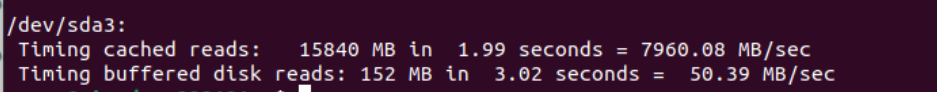

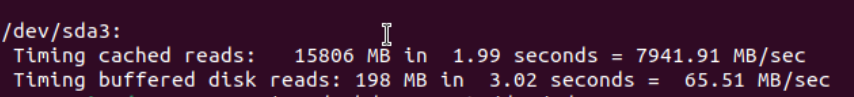

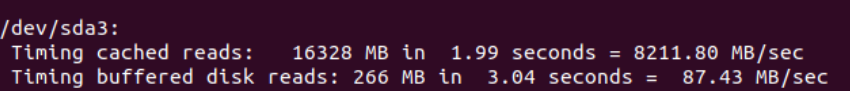

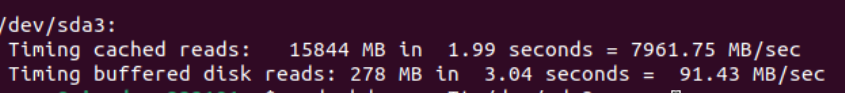

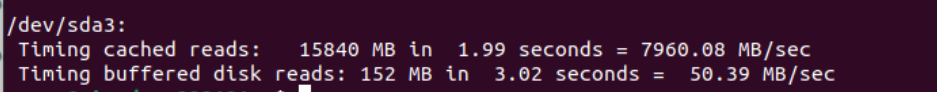

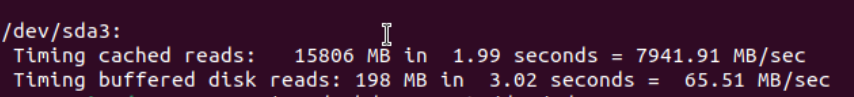

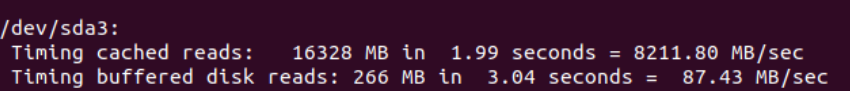

This is on Ubuntu guest VM on that same node installed to a ceph

Funny how the buffered read keeps getting better ...

Anyhow - the performance for the guest VM read from that same HDD seems off... maybe its because the host node is reading direct to the attached HDD and the VM is going thru the OSD layer..?

Seems significant reduction though...

Any places I can check to learn more and figure out how to speed access up a little for the VM's?

Ideas?

Using the entire host 1TB drive as OSD for CephPool1

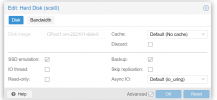

Created VM using CephPool1 for HDD - VirtIO SCSI - default, no cache on the VM HDD setup.

This is directly on proxmox node to the attached SSD /sda2

This is directly on proxmox node to the attached SATA HDD /dev/sdb

This is on Ubuntu guest VM on that same node installed to a ceph

Funny how the buffered read keeps getting better ...

Anyhow - the performance for the guest VM read from that same HDD seems off... maybe its because the host node is reading direct to the attached HDD and the VM is going thru the OSD layer..?

Seems significant reduction though...

Any places I can check to learn more and figure out how to speed access up a little for the VM's?

Ideas?

Using the entire host 1TB drive as OSD for CephPool1

Created VM using CephPool1 for HDD - VirtIO SCSI - default, no cache on the VM HDD setup.