Hi everyone,

I have a problem that I can't find a solution to.

Often during the night I have a VM that shuts down and in the morning I have to turn it on manually.

This happens every Sunday night, I thought it was a problem with concurrent backups but tonight it happened again.

The PVE version is 7.1-7 and on the host there are two VMs. The VM that goes down is the one with windows.

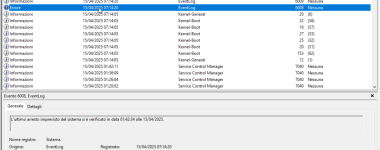

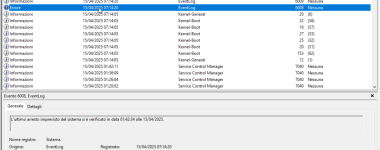

Below I put the windows log with the down time and the host log.

I thank everyone who can help me to read the log.

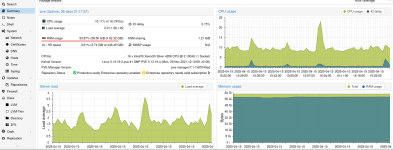

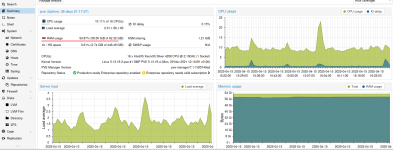

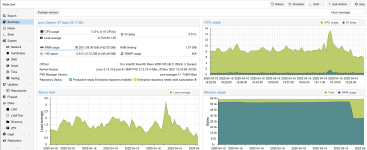

The PVE log from 01.01 to 02.00.

Apr 15 01:01:46 pve pvedaemon[3772978]: <root@pam> successful auth for user 'root@pam'

Apr 15 01:14:08 pve smartd[2963]: Device: /dev/sdc [SAT], SMART Prefailure Attribute: 177 Wear_Leveling_Count changed from 54 to 53

Apr 15 01:14:08 pve smartd[2963]: Device: /dev/sdc [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 71 to 65

Apr 15 01:14:08 pve smartd[2963]: Device: /dev/sdd [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 71 to 65

Apr 15 01:14:17 pve pmxcfs[3703]: [dcdb] notice: data verification successful

Apr 15 01:16:46 pve pvedaemon[3005937]: <root@pam> successful auth for user 'root@pam'

Apr 15 01:17:01 pve CRON[144972]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Apr 15 01:17:01 pve CRON[144973]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Apr 15 01:17:01 pve CRON[144972]: pam_unix(cron:session): session closed for user root

Apr 15 01:31:46 pve pvedaemon[2980551]: <root@pam> successful auth for user 'root@pam'

Apr 15 01:44:09 pve smartd[2963]: Device: /dev/sdc [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 65 to 68

Apr 15 01:44:09 pve smartd[2963]: Device: /dev/sdd [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 65 to 68

Apr 15 01:45:05 pve kernel: pvestatd invoked oom-killer: gfp_mask=0x400dc0(GFP_KERNEL_ACCOUNT|__GFP_ZERO), order=1, oom_score_adj=0

Apr 15 01:45:05 pve kernel: CPU: 11 PID: 288749 Comm: pvestatd Tainted: P IO 5.13.19-2-pve #1

Apr 15 01:45:05 pve kernel: Hardware name: Dell Inc. PowerEdge R440/04JN2K, BIOS 2.9.3 09/23/2020

Apr 15 01:45:05 pve kernel: Call Trace:

Apr 15 01:45:05 pve kernel: dump_stack+0x7d/0x9c

Apr 15 01:45:05 pve kernel: dump_header+0x4f/0x1f6

Apr 15 01:45:05 pve kernel: oom_kill_process.cold+0xb/0x10

Apr 15 01:45:05 pve kernel: out_of_memory+0x1cf/0x530

Apr 15 01:45:05 pve kernel: __alloc_pages_slowpath.constprop.0+0xc96/0xd80

Apr 15 01:45:05 pve kernel: __alloc_pages+0x30e/0x330

Apr 15 01:45:05 pve kernel: alloc_pages+0x87/0x110

Apr 15 01:45:05 pve kernel: __get_free_pages+0x11/0x40

Apr 15 01:45:05 pve kernel: pgd_alloc+0x37/0x230

Apr 15 01:45:05 pve kernel: mm_init+0x1dd/0x300

Apr 15 01:45:05 pve kernel: mm_alloc+0x4e/0x60

Apr 15 01:45:05 pve kernel: alloc_bprm+0x8a/0x2f0

Apr 15 01:45:05 pve kernel: do_execveat_common+0x96/0x1c0

Apr 15 01:45:05 pve kernel: __x64_sys_execve+0x39/0x50

Apr 15 01:45:05 pve kernel: do_syscall_64+0x61/0xb0

Apr 15 01:45:05 pve kernel: ? exit_to_user_mode_prepare+0x37/0x1b0

Apr 15 01:45:05 pve kernel: ? irqentry_exit_to_user_mode+0x9/0x20

Apr 15 01:45:05 pve kernel: ? irqentry_exit+0x19/0x30

Apr 15 01:45:05 pve kernel: ? exc_page_fault+0x8f/0x170

Apr 15 01:45:05 pve kernel: ? asm_exc_page_fault+0x8/0x30

Apr 15 01:45:05 pve kernel: entry_SYSCALL_64_after_hwframe+0x44/0xae

Apr 15 01:45:05 pve kernel: RIP: 0033:0x7f5b75e63ca7

Apr 15 01:45:05 pve kernel: Code: ff ff 76 e7 f7 d8 64 41 89 00 eb df 0f 1f 80 00 00 00 00 f7 d8 64 41 89 00 eb dc 0f 1f 84 00 00 00 00 00 b8 3b 00 00 00 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d b9 61 10 00 f7 d8 64 89 01 48

Apr 15 01:45:05 pve kernel: RSP: 002b:00007ffe83e3aae8 EFLAGS: 00000202 ORIG_RAX: 000000000000003b

Apr 15 01:45:05 pve kernel: RAX: ffffffffffffffda RBX: 0000564ba14d1db0 RCX: 00007f5b75e63ca7

Apr 15 01:45:05 pve kernel: RDX: 0000564ba14ce170 RSI: 0000564ba12e1f10 RDI: 0000564ba14d1db0

Apr 15 01:45:05 pve kernel: RBP: 00007ffe83e3ab50 R08: 00007ffe83e3ab80 R09: 0000000000000000

Apr 15 01:45:05 pve kernel: R10: fffffffffffff7e6 R11: 0000000000000202 R12: 0000564ba12e1f10

Apr 15 01:45:05 pve kernel: R13: 0000564ba14ce170 R14: 0000000000000001 R15: 0000564ba12e1f38

Apr 15 01:45:05 pve kernel: Mem-Info:

Apr 15 01:45:05 pve kernel: active_anon:5458842 inactive_anon:1087506 isolated_anon:0 active_file:454449 inactive_file:528116 isolated_file:0 unevictable:39067 dirty:30 writeback:5 slab_reclaimable:180371 slab_unreclaimable:1192279 mapped:27260 shmem:17998 pagetables:21632 bounce:0 free:153152 free_pcp:171 free_cma:0

Apr 15 01:45:05 pve kernel: Node 0 active_anon:21835368kB inactive_anon:4350024kB active_file:1817796kB inactive_file:2112464kB unevictable:156268kB isolated(anon):0kB isolated(file):0kB mapped:109040kB dirty:120kB writeback:20kB shmem:71992kB shmem_thp: 0kB shmem_pmdmapped: 0kB anon_thp: 26624kB writeback_tmp:0kB kernel_stack:8744kB pagetables:86528kB all_unreclaimable? no

Apr 15 01:45:05 pve kernel: Node 0 DMA free:11264kB min:12kB low:24kB high:36kB reserved_highatomic:0KB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB writepending:0kB present:15980kB managed:15360kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Apr 15 01:45:05 pve kernel: lowmem_reserve[]: 0 1409 63736 63736 63736

Apr 15 01:45:05 pve kernel: Node 0 DMA32 free:252016kB min:1492kB low:2932kB high:4372kB reserved_highatomic:2048KB active_anon:525064kB inactive_anon:161600kB active_file:568kB inactive_file:84kB unevictable:0kB writepending:0kB present:1566652kB managed:1501116kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Apr 15 01:45:05 pve kernel: lowmem_reserve[]: 0 0 62327 62327 62327

Apr 15 01:45:05 pve kernel: Node 0 Normal free:349328kB min:66072kB low:129892kB high:193712kB reserved_highatomic:2048KB active_anon:21310304kB inactive_anon:4188928kB active_file:1817228kB inactive_file:2112160kB unevictable:156268kB writepending:140kB present:65011712kB managed:63830404kB mlocked:153196kB bounce:0kB free_pcp:684kB local_pcp:0kB free_cma:0kB

Apr 15 01:45:05 pve kernel: lowmem_reserve[]: 0 0 0 0 0

Apr 15 01:45:05 pve kernel: Node 0 DMA: 0*4kB 0*8kB 0*16kB 0*32kB 0*64kB 0*128kB 0*256kB 0*512kB 1*1024kB (U) 1*2048kB (M) 2*4096kB (M) = 11264kB

Apr 15 01:45:05 pve kernel: Node 0 DMA32: 2260*4kB (UME) 1842*8kB (UME) 2799*16kB (UMEH) 193*32kB (MEH) 250*64kB (UMEH) 818*128kB (UME) 217*256kB (UM) 2*512kB (ME) 0*1024kB 0*2048kB 0*4096kB = 252016kB

Apr 15 01:45:05 pve kernel: Node 0 Normal: 87495*4kB (UMEH) 14*8kB (H) 11*16kB (H) 1*32kB (H) 5*64kB (H) 3*128kB (H) 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 351004kB

Apr 15 01:45:05 pve kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Apr 15 01:45:05 pve kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Apr 15 01:45:05 pve kernel: 1004897 total pagecache pages

Apr 15 01:45:05 pve kernel: 0 pages in swap cache

Apr 15 01:45:05 pve kernel: Swap cache stats: add 0, delete 0, find 0/0

Apr 15 01:45:05 pve kernel: Free swap = 0kB

Apr 15 01:45:05 pve kernel: Total swap = 0kB

Apr 15 01:45:05 pve kernel: 16648586 pages RAM

Apr 15 01:45:05 pve kernel: 0 pages HighMem/MovableOnly

Apr 15 01:45:05 pve kernel: 311866 pages reserved

Apr 15 01:45:05 pve kernel: 0 pages hwpoisoned

Apr 15 01:45:05 pve kernel: Tasks state (memory values in pages):

Apr 15 01:45:05 pve kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Apr 15 01:45:05 pve kernel: [ 990] 0 990 53666 1912 438272 0 -250 systemd-journal

Apr 15 01:45:05 pve kernel: [ 1025] 0 1025 6054 1289 65536 0 -1000 systemd-udevd

Apr 15 01:45:05 pve kernel: [ 2950] 103 2950 1975 485 57344 0 0 rpcbind

Apr 15 01:45:05 pve kernel: [ 2953] 102 2953 2061 685 57344 0 -900 dbus-daemon

Apr 15 01:45:05 pve kernel: [ 2956] 0 2956 21359 303 53248 0 0 lxcfs

Apr 15 01:45:05 pve kernel: [ 2957] 0 2957 272383 474 188416 0 0 pve-lxc-syscall

Apr 15 01:45:05 pve kernel: [ 2959] 0 2959 55199 933 81920 0 0 rsyslogd

Apr 15 01:45:05 pve kernel: [ 2963] 0 2963 2972 896 65536 0 0 smartd

Apr 15 01:45:05 pve kernel: [ 2964] 0 2964 3465 922 61440 0 0 systemd-logind

Apr 15 01:45:05 pve kernel: [ 2965] 0 2965 558 180 36864 0 -1000 watchdog-mux

Apr 15 01:45:05 pve kernel: [ 2967] 0 2967 59442 789 86016 0 0 zed

Apr 15 01:45:05 pve kernel: [ 2968] 0 2968 1757 453 53248 0 0 ksmtuned

Apr 15 01:45:05 pve kernel: [ 2994] 0 2994 1068 370 49152 0 0 qmeventd

Apr 15 01:45:05 pve kernel: [ 3387] 0 3387 1152 270 49152 0 0 lxc-monitord

Apr 15 01:45:05 pve kernel: [ 3437] 0 3437 2887 131 65536 0 0 iscsid

Apr 15 01:45:05 pve kernel: [ 3438] 0 3438 3013 2968 69632 0 -17 iscsid

Apr 15 01:45:05 pve kernel: [ 3440] 0 3440 3338 1020 69632 0 -1000 sshd

Apr 15 01:45:05 pve kernel: [ 3470] 101 3470 4761 685 61440 0 0 chronyd

Apr 15 01:45:05 pve kernel: [ 3478] 101 3478 2713 487 61440 0 0 chronyd

Apr 15 01:45:05 pve kernel: [ 3482] 0 3482 1461 398 45056 0 0 agetty

Apr 15 01:45:05 pve kernel: [ 3663] 0 3663 216551 894 192512 0 0 rrdcached

Apr 15 01:45:05 pve kernel: [ 3703] 0 3703 175930 16500 471040 0 0 pmxcfs

Apr 15 01:45:05 pve kernel: [ 3735] 0 3735 10013 642 69632 0 0 master

Apr 15 01:45:05 pve kernel: [ 3748] 0 3748 139886 41550 401408 0 0 corosync

Apr 15 01:45:05 pve kernel: [ 3749] 0 3749 1686 569 53248 0 0 cron

Apr 15 01:45:05 pve kernel: [ 3989] 0 3989 69916 21808 303104 0 0 pve-firewall

Apr 15 01:45:05 pve kernel: [ 3992] 0 3992 70720 23533 323584 0 0 pvestatd

Apr 15 01:45:05 pve kernel: [ 3994] 0 3994 591 140 40960 0 0 bpfilter_umh

Apr 15 01:45:05 pve kernel: [ 4017] 0 4017 83213 24359 356352 0 0 pvescheduler

Apr 15 01:45:05 pve kernel: [ 4027] 0 4027 88341 30162 409600 0 0 pvedaemon

Apr 15 01:45:05 pve kernel: [ 4224] 0 4224 84599 24541 360448 0 0 pve-ha-crm

Apr 15 01:45:05 pve kernel: [ 4245] 0 4245 5725358 2378456 43675648 0 0 kvm

Apr 15 01:45:05 pve kernel: [ 4254] 33 4254 88682 35075 430080 0 0 pveproxy

Apr 15 01:45:05 pve kernel: [ 4747] 33 4747 20895 15121 204800 0 0 spiceproxy

Apr 15 01:45:05 pve kernel: [ 4783] 0 4783 84531 24458 368640 0 0 pve-ha-lrm

Apr 15 01:45:05 pve kernel: [2803220] 106 2803220 10159 827 81920 0 0 qmgr

Apr 15 01:45:05 pve kernel: [2980551] 0 2980551 90485 33023 430080 0 0 pvedaemon worke

Apr 15 01:45:05 pve kernel: [3005937] 0 3005937 90890 33458 430080 0 0 pvedaemon worke

Apr 15 01:45:05 pve kernel: [3772978] 0 3772978 90895 33306 430080 0 0 pvedaemon worke

Apr 15 01:45:05 pve kernel: [3600791] 0 3600791 4805203 4237594 35463168 0 0 kvm

Apr 15 01:45:05 pve kernel: [3460416] 33 3460416 20959 13259 192512 0 0 spiceproxy work

Apr 15 01:45:05 pve kernel: [3460419] 0 3460419 20052 451 57344 0 0 pvefw-logger

Apr 15 01:45:05 pve kernel: [3460574] 33 3460574 90845 33130 430080 0 0 pveproxy worker

Apr 15 01:45:05 pve kernel: [3460575] 33 3460575 90853 33143 430080 0 0 pveproxy worker

Apr 15 01:45:05 pve kernel: [3460580] 33 3460580 90850 33115 430080 0 0 pveproxy worker

Apr 15 01:45:05 pve kernel: [ 140271] 106 140271 10080 1271 77824 0 0 pickup

Apr 15 01:45:05 pve kernel: [ 257875] 0 257875 1341 126 49152 0 0 sleep

Apr 15 01:45:05 pve kernel: [ 288749] 0 288749 70720 21883 315392 0 0 pvestatd

Apr 15 01:45:05 pve kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=pvestatd.service,mems_allowed=0,global_oom,task_memcg=/qemu.slice/102.scope,task=kvm,pid=3600791,uid=0

Apr 15 01:45:05 pve kernel: Out of memory: Killed process 3600791 (kvm) total-vm:19220812kB, anon-rss:16940572kB, file-rss:9800kB, shmem-rss:4kB, UID:0 pgtables:34632kB oom_score_adj:0

Apr 15 01:45:05 pve systemd[1]: 102.scope: A process of this unit has been killed by the OOM killer.

Apr 15 01:45:07 pve kernel: oom_reaper: reaped process 3600791 (kvm), now anon-rss:0kB, file-rss:52kB, shmem-rss:4kB

Apr 15 01:45:08 pve kernel: zd0: p1 p2

Apr 15 01:45:08 pve kernel: vmbr1: port 3(tap102i0) entered disabled state

Apr 15 01:45:08 pve kernel: vmbr1: port 3(tap102i0) entered disabled state

Apr 15 01:45:09 pve systemd[1]: 102.scope: Succeeded.

Apr 15 01:45:09 pve systemd[1]: 102.scope: Consumed 11h 32min 42.725s CPU time.

Apr 15 01:45:11 pve qmeventd[289023]: Starting cleanup for 102

Apr 15 01:45:11 pve qmeventd[289023]: Finished cleanup for 102

Apr 15 01:46:46 pve pvedaemon[3005937]: <root@pam> successful auth for user 'root@pam'

Apr 15 02:00:00 pve pvescheduler[328917]: <root@pam> starting task UPID ve:000504D6:12416E38:67FDA180:vzdump::root@pam:

ve:000504D6:12416E38:67FDA180:vzdump::root@pam:

I have a problem that I can't find a solution to.

Often during the night I have a VM that shuts down and in the morning I have to turn it on manually.

This happens every Sunday night, I thought it was a problem with concurrent backups but tonight it happened again.

The PVE version is 7.1-7 and on the host there are two VMs. The VM that goes down is the one with windows.

Below I put the windows log with the down time and the host log.

I thank everyone who can help me to read the log.

The PVE log from 01.01 to 02.00.

Apr 15 01:01:46 pve pvedaemon[3772978]: <root@pam> successful auth for user 'root@pam'

Apr 15 01:14:08 pve smartd[2963]: Device: /dev/sdc [SAT], SMART Prefailure Attribute: 177 Wear_Leveling_Count changed from 54 to 53

Apr 15 01:14:08 pve smartd[2963]: Device: /dev/sdc [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 71 to 65

Apr 15 01:14:08 pve smartd[2963]: Device: /dev/sdd [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 71 to 65

Apr 15 01:14:17 pve pmxcfs[3703]: [dcdb] notice: data verification successful

Apr 15 01:16:46 pve pvedaemon[3005937]: <root@pam> successful auth for user 'root@pam'

Apr 15 01:17:01 pve CRON[144972]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Apr 15 01:17:01 pve CRON[144973]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Apr 15 01:17:01 pve CRON[144972]: pam_unix(cron:session): session closed for user root

Apr 15 01:31:46 pve pvedaemon[2980551]: <root@pam> successful auth for user 'root@pam'

Apr 15 01:44:09 pve smartd[2963]: Device: /dev/sdc [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 65 to 68

Apr 15 01:44:09 pve smartd[2963]: Device: /dev/sdd [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 65 to 68

Apr 15 01:45:05 pve kernel: pvestatd invoked oom-killer: gfp_mask=0x400dc0(GFP_KERNEL_ACCOUNT|__GFP_ZERO), order=1, oom_score_adj=0

Apr 15 01:45:05 pve kernel: CPU: 11 PID: 288749 Comm: pvestatd Tainted: P IO 5.13.19-2-pve #1

Apr 15 01:45:05 pve kernel: Hardware name: Dell Inc. PowerEdge R440/04JN2K, BIOS 2.9.3 09/23/2020

Apr 15 01:45:05 pve kernel: Call Trace:

Apr 15 01:45:05 pve kernel: dump_stack+0x7d/0x9c

Apr 15 01:45:05 pve kernel: dump_header+0x4f/0x1f6

Apr 15 01:45:05 pve kernel: oom_kill_process.cold+0xb/0x10

Apr 15 01:45:05 pve kernel: out_of_memory+0x1cf/0x530

Apr 15 01:45:05 pve kernel: __alloc_pages_slowpath.constprop.0+0xc96/0xd80

Apr 15 01:45:05 pve kernel: __alloc_pages+0x30e/0x330

Apr 15 01:45:05 pve kernel: alloc_pages+0x87/0x110

Apr 15 01:45:05 pve kernel: __get_free_pages+0x11/0x40

Apr 15 01:45:05 pve kernel: pgd_alloc+0x37/0x230

Apr 15 01:45:05 pve kernel: mm_init+0x1dd/0x300

Apr 15 01:45:05 pve kernel: mm_alloc+0x4e/0x60

Apr 15 01:45:05 pve kernel: alloc_bprm+0x8a/0x2f0

Apr 15 01:45:05 pve kernel: do_execveat_common+0x96/0x1c0

Apr 15 01:45:05 pve kernel: __x64_sys_execve+0x39/0x50

Apr 15 01:45:05 pve kernel: do_syscall_64+0x61/0xb0

Apr 15 01:45:05 pve kernel: ? exit_to_user_mode_prepare+0x37/0x1b0

Apr 15 01:45:05 pve kernel: ? irqentry_exit_to_user_mode+0x9/0x20

Apr 15 01:45:05 pve kernel: ? irqentry_exit+0x19/0x30

Apr 15 01:45:05 pve kernel: ? exc_page_fault+0x8f/0x170

Apr 15 01:45:05 pve kernel: ? asm_exc_page_fault+0x8/0x30

Apr 15 01:45:05 pve kernel: entry_SYSCALL_64_after_hwframe+0x44/0xae

Apr 15 01:45:05 pve kernel: RIP: 0033:0x7f5b75e63ca7

Apr 15 01:45:05 pve kernel: Code: ff ff 76 e7 f7 d8 64 41 89 00 eb df 0f 1f 80 00 00 00 00 f7 d8 64 41 89 00 eb dc 0f 1f 84 00 00 00 00 00 b8 3b 00 00 00 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d b9 61 10 00 f7 d8 64 89 01 48

Apr 15 01:45:05 pve kernel: RSP: 002b:00007ffe83e3aae8 EFLAGS: 00000202 ORIG_RAX: 000000000000003b

Apr 15 01:45:05 pve kernel: RAX: ffffffffffffffda RBX: 0000564ba14d1db0 RCX: 00007f5b75e63ca7

Apr 15 01:45:05 pve kernel: RDX: 0000564ba14ce170 RSI: 0000564ba12e1f10 RDI: 0000564ba14d1db0

Apr 15 01:45:05 pve kernel: RBP: 00007ffe83e3ab50 R08: 00007ffe83e3ab80 R09: 0000000000000000

Apr 15 01:45:05 pve kernel: R10: fffffffffffff7e6 R11: 0000000000000202 R12: 0000564ba12e1f10

Apr 15 01:45:05 pve kernel: R13: 0000564ba14ce170 R14: 0000000000000001 R15: 0000564ba12e1f38

Apr 15 01:45:05 pve kernel: Mem-Info:

Apr 15 01:45:05 pve kernel: active_anon:5458842 inactive_anon:1087506 isolated_anon:0 active_file:454449 inactive_file:528116 isolated_file:0 unevictable:39067 dirty:30 writeback:5 slab_reclaimable:180371 slab_unreclaimable:1192279 mapped:27260 shmem:17998 pagetables:21632 bounce:0 free:153152 free_pcp:171 free_cma:0

Apr 15 01:45:05 pve kernel: Node 0 active_anon:21835368kB inactive_anon:4350024kB active_file:1817796kB inactive_file:2112464kB unevictable:156268kB isolated(anon):0kB isolated(file):0kB mapped:109040kB dirty:120kB writeback:20kB shmem:71992kB shmem_thp: 0kB shmem_pmdmapped: 0kB anon_thp: 26624kB writeback_tmp:0kB kernel_stack:8744kB pagetables:86528kB all_unreclaimable? no

Apr 15 01:45:05 pve kernel: Node 0 DMA free:11264kB min:12kB low:24kB high:36kB reserved_highatomic:0KB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB writepending:0kB present:15980kB managed:15360kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Apr 15 01:45:05 pve kernel: lowmem_reserve[]: 0 1409 63736 63736 63736

Apr 15 01:45:05 pve kernel: Node 0 DMA32 free:252016kB min:1492kB low:2932kB high:4372kB reserved_highatomic:2048KB active_anon:525064kB inactive_anon:161600kB active_file:568kB inactive_file:84kB unevictable:0kB writepending:0kB present:1566652kB managed:1501116kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Apr 15 01:45:05 pve kernel: lowmem_reserve[]: 0 0 62327 62327 62327

Apr 15 01:45:05 pve kernel: Node 0 Normal free:349328kB min:66072kB low:129892kB high:193712kB reserved_highatomic:2048KB active_anon:21310304kB inactive_anon:4188928kB active_file:1817228kB inactive_file:2112160kB unevictable:156268kB writepending:140kB present:65011712kB managed:63830404kB mlocked:153196kB bounce:0kB free_pcp:684kB local_pcp:0kB free_cma:0kB

Apr 15 01:45:05 pve kernel: lowmem_reserve[]: 0 0 0 0 0

Apr 15 01:45:05 pve kernel: Node 0 DMA: 0*4kB 0*8kB 0*16kB 0*32kB 0*64kB 0*128kB 0*256kB 0*512kB 1*1024kB (U) 1*2048kB (M) 2*4096kB (M) = 11264kB

Apr 15 01:45:05 pve kernel: Node 0 DMA32: 2260*4kB (UME) 1842*8kB (UME) 2799*16kB (UMEH) 193*32kB (MEH) 250*64kB (UMEH) 818*128kB (UME) 217*256kB (UM) 2*512kB (ME) 0*1024kB 0*2048kB 0*4096kB = 252016kB

Apr 15 01:45:05 pve kernel: Node 0 Normal: 87495*4kB (UMEH) 14*8kB (H) 11*16kB (H) 1*32kB (H) 5*64kB (H) 3*128kB (H) 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 351004kB

Apr 15 01:45:05 pve kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Apr 15 01:45:05 pve kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Apr 15 01:45:05 pve kernel: 1004897 total pagecache pages

Apr 15 01:45:05 pve kernel: 0 pages in swap cache

Apr 15 01:45:05 pve kernel: Swap cache stats: add 0, delete 0, find 0/0

Apr 15 01:45:05 pve kernel: Free swap = 0kB

Apr 15 01:45:05 pve kernel: Total swap = 0kB

Apr 15 01:45:05 pve kernel: 16648586 pages RAM

Apr 15 01:45:05 pve kernel: 0 pages HighMem/MovableOnly

Apr 15 01:45:05 pve kernel: 311866 pages reserved

Apr 15 01:45:05 pve kernel: 0 pages hwpoisoned

Apr 15 01:45:05 pve kernel: Tasks state (memory values in pages):

Apr 15 01:45:05 pve kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Apr 15 01:45:05 pve kernel: [ 990] 0 990 53666 1912 438272 0 -250 systemd-journal

Apr 15 01:45:05 pve kernel: [ 1025] 0 1025 6054 1289 65536 0 -1000 systemd-udevd

Apr 15 01:45:05 pve kernel: [ 2950] 103 2950 1975 485 57344 0 0 rpcbind

Apr 15 01:45:05 pve kernel: [ 2953] 102 2953 2061 685 57344 0 -900 dbus-daemon

Apr 15 01:45:05 pve kernel: [ 2956] 0 2956 21359 303 53248 0 0 lxcfs

Apr 15 01:45:05 pve kernel: [ 2957] 0 2957 272383 474 188416 0 0 pve-lxc-syscall

Apr 15 01:45:05 pve kernel: [ 2959] 0 2959 55199 933 81920 0 0 rsyslogd

Apr 15 01:45:05 pve kernel: [ 2963] 0 2963 2972 896 65536 0 0 smartd

Apr 15 01:45:05 pve kernel: [ 2964] 0 2964 3465 922 61440 0 0 systemd-logind

Apr 15 01:45:05 pve kernel: [ 2965] 0 2965 558 180 36864 0 -1000 watchdog-mux

Apr 15 01:45:05 pve kernel: [ 2967] 0 2967 59442 789 86016 0 0 zed

Apr 15 01:45:05 pve kernel: [ 2968] 0 2968 1757 453 53248 0 0 ksmtuned

Apr 15 01:45:05 pve kernel: [ 2994] 0 2994 1068 370 49152 0 0 qmeventd

Apr 15 01:45:05 pve kernel: [ 3387] 0 3387 1152 270 49152 0 0 lxc-monitord

Apr 15 01:45:05 pve kernel: [ 3437] 0 3437 2887 131 65536 0 0 iscsid

Apr 15 01:45:05 pve kernel: [ 3438] 0 3438 3013 2968 69632 0 -17 iscsid

Apr 15 01:45:05 pve kernel: [ 3440] 0 3440 3338 1020 69632 0 -1000 sshd

Apr 15 01:45:05 pve kernel: [ 3470] 101 3470 4761 685 61440 0 0 chronyd

Apr 15 01:45:05 pve kernel: [ 3478] 101 3478 2713 487 61440 0 0 chronyd

Apr 15 01:45:05 pve kernel: [ 3482] 0 3482 1461 398 45056 0 0 agetty

Apr 15 01:45:05 pve kernel: [ 3663] 0 3663 216551 894 192512 0 0 rrdcached

Apr 15 01:45:05 pve kernel: [ 3703] 0 3703 175930 16500 471040 0 0 pmxcfs

Apr 15 01:45:05 pve kernel: [ 3735] 0 3735 10013 642 69632 0 0 master

Apr 15 01:45:05 pve kernel: [ 3748] 0 3748 139886 41550 401408 0 0 corosync

Apr 15 01:45:05 pve kernel: [ 3749] 0 3749 1686 569 53248 0 0 cron

Apr 15 01:45:05 pve kernel: [ 3989] 0 3989 69916 21808 303104 0 0 pve-firewall

Apr 15 01:45:05 pve kernel: [ 3992] 0 3992 70720 23533 323584 0 0 pvestatd

Apr 15 01:45:05 pve kernel: [ 3994] 0 3994 591 140 40960 0 0 bpfilter_umh

Apr 15 01:45:05 pve kernel: [ 4017] 0 4017 83213 24359 356352 0 0 pvescheduler

Apr 15 01:45:05 pve kernel: [ 4027] 0 4027 88341 30162 409600 0 0 pvedaemon

Apr 15 01:45:05 pve kernel: [ 4224] 0 4224 84599 24541 360448 0 0 pve-ha-crm

Apr 15 01:45:05 pve kernel: [ 4245] 0 4245 5725358 2378456 43675648 0 0 kvm

Apr 15 01:45:05 pve kernel: [ 4254] 33 4254 88682 35075 430080 0 0 pveproxy

Apr 15 01:45:05 pve kernel: [ 4747] 33 4747 20895 15121 204800 0 0 spiceproxy

Apr 15 01:45:05 pve kernel: [ 4783] 0 4783 84531 24458 368640 0 0 pve-ha-lrm

Apr 15 01:45:05 pve kernel: [2803220] 106 2803220 10159 827 81920 0 0 qmgr

Apr 15 01:45:05 pve kernel: [2980551] 0 2980551 90485 33023 430080 0 0 pvedaemon worke

Apr 15 01:45:05 pve kernel: [3005937] 0 3005937 90890 33458 430080 0 0 pvedaemon worke

Apr 15 01:45:05 pve kernel: [3772978] 0 3772978 90895 33306 430080 0 0 pvedaemon worke

Apr 15 01:45:05 pve kernel: [3600791] 0 3600791 4805203 4237594 35463168 0 0 kvm

Apr 15 01:45:05 pve kernel: [3460416] 33 3460416 20959 13259 192512 0 0 spiceproxy work

Apr 15 01:45:05 pve kernel: [3460419] 0 3460419 20052 451 57344 0 0 pvefw-logger

Apr 15 01:45:05 pve kernel: [3460574] 33 3460574 90845 33130 430080 0 0 pveproxy worker

Apr 15 01:45:05 pve kernel: [3460575] 33 3460575 90853 33143 430080 0 0 pveproxy worker

Apr 15 01:45:05 pve kernel: [3460580] 33 3460580 90850 33115 430080 0 0 pveproxy worker

Apr 15 01:45:05 pve kernel: [ 140271] 106 140271 10080 1271 77824 0 0 pickup

Apr 15 01:45:05 pve kernel: [ 257875] 0 257875 1341 126 49152 0 0 sleep

Apr 15 01:45:05 pve kernel: [ 288749] 0 288749 70720 21883 315392 0 0 pvestatd

Apr 15 01:45:05 pve kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=pvestatd.service,mems_allowed=0,global_oom,task_memcg=/qemu.slice/102.scope,task=kvm,pid=3600791,uid=0

Apr 15 01:45:05 pve kernel: Out of memory: Killed process 3600791 (kvm) total-vm:19220812kB, anon-rss:16940572kB, file-rss:9800kB, shmem-rss:4kB, UID:0 pgtables:34632kB oom_score_adj:0

Apr 15 01:45:05 pve systemd[1]: 102.scope: A process of this unit has been killed by the OOM killer.

Apr 15 01:45:07 pve kernel: oom_reaper: reaped process 3600791 (kvm), now anon-rss:0kB, file-rss:52kB, shmem-rss:4kB

Apr 15 01:45:08 pve kernel: zd0: p1 p2

Apr 15 01:45:08 pve kernel: vmbr1: port 3(tap102i0) entered disabled state

Apr 15 01:45:08 pve kernel: vmbr1: port 3(tap102i0) entered disabled state

Apr 15 01:45:09 pve systemd[1]: 102.scope: Succeeded.

Apr 15 01:45:09 pve systemd[1]: 102.scope: Consumed 11h 32min 42.725s CPU time.

Apr 15 01:45:11 pve qmeventd[289023]: Starting cleanup for 102

Apr 15 01:45:11 pve qmeventd[289023]: Finished cleanup for 102

Apr 15 01:46:46 pve pvedaemon[3005937]: <root@pam> successful auth for user 'root@pam'

Apr 15 02:00:00 pve pvescheduler[328917]: <root@pam> starting task UPID