Hi!

We have small 3 node Proxmox cluster in our lab, and I am currently testing virtualizing firewalls.

There is some very strange behaviour regarding to virtual machines doing routing. This is going to be somewhat lenghty post...

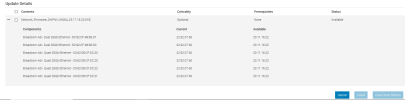

Hardware is brand new, I think raw performance is not issue, every node is:

- Dell PowerEdge R760xs with Intel Xeon Gold 6526Y

- memory 512G and disk 4 x 3.2T NVMe SSd

- Dual BCM57414 NetXtreme-E 10Gb/25Gb

- Quad BCM57504 NetXtreme-E 10Gb/25Gb

There is CEPH datastore using MESH networking (without switch) between nodes, Dual BCM57414 NICs is used at 25GbE speed and its working as expected.

VM traffic is currently handled bridging single port of quad BCM57414 nic to VMBR0. Nothing unusual here, no LACP or bonding etc...

To testing I have 3 Debian VM. One is acting as router:

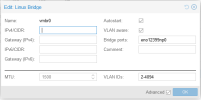

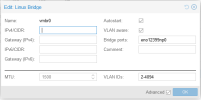

There is 2 virtual NICs

And then there is two other VM:s, one is on VLAN 29 and another in VLAN602.

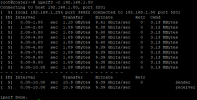

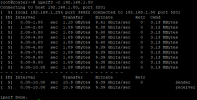

iPerf3 from VM in VLAN602 to router:

iPerf3 from router to VM in VLAN29:

All good....?

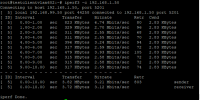

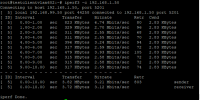

And then from VM (VLAN602) to another VM (VLAN29) through VM acting as router:

It's not Gbits, its Mbits... And a lot of retransmissions.

In this particular test client VM:s are on node PX02 and router VM is in node PX01. I have made numerous test, and conclusion is clear:

When traffic is going physically "IN direction" to Broadcom quad NIC (ie. RX), AND there is routing involved at VM, speed is over 1000 times slower than it should be.

Out direction speed is good, even from VM doing the routing.

I have tested using Palo Alto Virtual-VM, and there is exact same problem. I also tested OpnSense firewall, and there is exactly same problems. There is zero problem when simply testing connectivity and speed within the same VLAN, regardless traffic direction. Also migration traffic speed is good, despite using ports from same quad-port adapter.

All the Dell firmware and drivers are up to date, Proxmox is up to date.

I have already tested to disable auto negotiation from both switch and NIC. I have tested two brands of switches, Ruckus/Broadcom and Extreme Networks. Exactly same speeds.

I have changed cables, tried three different brands... We use DAC cables, but I tested also some fibre optics SFP modules, but it's all the same.

We have some spare NICs, and I made some tests:

- Using 1 Gb copper. All is good, routing or not, both directions. No problems.

- Using older NetXtreme II BCM57810 10 Gb SFP+ adapter. Speed is good, but there is lot of retransmits.

I am out of ideas. Whats next? Support ticket to Proxmox, perhaps?

We have small 3 node Proxmox cluster in our lab, and I am currently testing virtualizing firewalls.

There is some very strange behaviour regarding to virtual machines doing routing. This is going to be somewhat lenghty post...

Hardware is brand new, I think raw performance is not issue, every node is:

- Dell PowerEdge R760xs with Intel Xeon Gold 6526Y

- memory 512G and disk 4 x 3.2T NVMe SSd

- Dual BCM57414 NetXtreme-E 10Gb/25Gb

- Quad BCM57504 NetXtreme-E 10Gb/25Gb

There is CEPH datastore using MESH networking (without switch) between nodes, Dual BCM57414 NICs is used at 25GbE speed and its working as expected.

VM traffic is currently handled bridging single port of quad BCM57414 nic to VMBR0. Nothing unusual here, no LACP or bonding etc...

To testing I have 3 Debian VM. One is acting as router:

Bash:

root@router:~# cat /proc/sys/net/ipv4/ip_forward

1There is 2 virtual NICs

Code:

net0: virtio=BC:24:11:BF:A2:61,bridge=vmbr0,tag=602

net1: virtio=BC:24:11:0A:E8:A8,bridge=vmbr0,tag=29And then there is two other VM:s, one is on VLAN 29 and another in VLAN602.

iPerf3 from VM in VLAN602 to router:

iPerf3 from router to VM in VLAN29:

All good....?

And then from VM (VLAN602) to another VM (VLAN29) through VM acting as router:

It's not Gbits, its Mbits... And a lot of retransmissions.

In this particular test client VM:s are on node PX02 and router VM is in node PX01. I have made numerous test, and conclusion is clear:

When traffic is going physically "IN direction" to Broadcom quad NIC (ie. RX), AND there is routing involved at VM, speed is over 1000 times slower than it should be.

Out direction speed is good, even from VM doing the routing.

I have tested using Palo Alto Virtual-VM, and there is exact same problem. I also tested OpnSense firewall, and there is exactly same problems. There is zero problem when simply testing connectivity and speed within the same VLAN, regardless traffic direction. Also migration traffic speed is good, despite using ports from same quad-port adapter.

All the Dell firmware and drivers are up to date, Proxmox is up to date.

I have already tested to disable auto negotiation from both switch and NIC. I have tested two brands of switches, Ruckus/Broadcom and Extreme Networks. Exactly same speeds.

I have changed cables, tried three different brands... We use DAC cables, but I tested also some fibre optics SFP modules, but it's all the same.

We have some spare NICs, and I made some tests:

- Using 1 Gb copper. All is good, routing or not, both directions. No problems.

- Using older NetXtreme II BCM57810 10 Gb SFP+ adapter. Speed is good, but there is lot of retransmits.

I am out of ideas. Whats next? Support ticket to Proxmox, perhaps?

Last edited: