Hi all,

I have troubles understanding how this setup should be done:

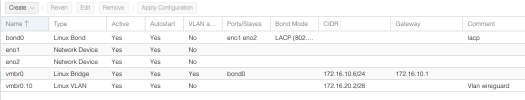

eno1 + eno2 -> bond0 (lacp) -> bridge0

vlan1 : 172.16.10.0/24

vlan10 : 172.16.20.0/29

Now I need this bridge to be able to talk to vlan10 and non vlan clients (or vlan1). 2 containers need to have 1 virtual nic connected to lan and 1 virtual nic connected to the vlan10 network.

I connected the bond0 to both vlans but there is no traffic on vlan10

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

auto eno2

iface eno2 inet manual

auto bond0

iface bond0 inet manual

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

#lacp

auto vmbr0

iface vmbr0 inet static

address 172.16.10.6/24

gateway 172.16.10.1

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#bridge

auto vlan10

iface vlan10 inet manual

vlan-raw-device bond0

#wireshark

auto vlan1

iface vlan1 inet manual

vlan-raw-device bond0

Problem: every container in Vlan1 works as expected. containers in vlan10 do not.

any suggestion?

thank you

I have troubles understanding how this setup should be done:

eno1 + eno2 -> bond0 (lacp) -> bridge0

vlan1 : 172.16.10.0/24

vlan10 : 172.16.20.0/29

Now I need this bridge to be able to talk to vlan10 and non vlan clients (or vlan1). 2 containers need to have 1 virtual nic connected to lan and 1 virtual nic connected to the vlan10 network.

I connected the bond0 to both vlans but there is no traffic on vlan10

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

auto eno2

iface eno2 inet manual

auto bond0

iface bond0 inet manual

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

#lacp

auto vmbr0

iface vmbr0 inet static

address 172.16.10.6/24

gateway 172.16.10.1

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#bridge

auto vlan10

iface vlan10 inet manual

vlan-raw-device bond0

#wireshark

auto vlan1

iface vlan1 inet manual

vlan-raw-device bond0

Problem: every container in Vlan1 works as expected. containers in vlan10 do not.

any suggestion?

thank you

Last edited: