Hi proxmox community,

I am still new and not quite sure why this is not working. I have been researching online for what feels like 2 hours learning about ZFS. I shutdown my two test nodes for the weekend and the VMs mounts disappeared. I remounted the drives, which felt odd that a few unmounted. Now most VMs are backup except for the ones I'm testing on the ZFS pool. The drives setup in ZFS are online, the mountpoints are showing up in shell, just that the VMs in my /ZFS01/VMs are gone and in Proxmox that directory is gone. If I go to zfs list it is showing ZFS01/VMs/vm-101-disk-0 and other VMs with no mountpoint. the ZFS01/VMs is pointed to the mountpoint /ZFS01/VMs. When I re-add the /ZFS01/VMs to the directory again in proxmox it doesn't find the VMs as they are jut not in that directory.

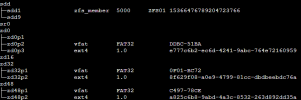

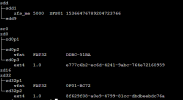

If I lsblk -f I can see the zfs system and zd0p3 is probably the first disk missing and zd32p2 is the 2nd as I setup the disks as EXT4.

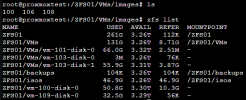

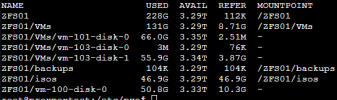

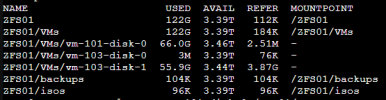

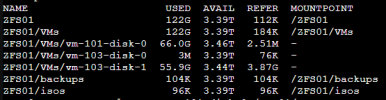

Below is after I use zfs list and it shows the disks are there just missing a mountpoint. However, the ZFS01/VMs has the mountpoint.

The challenge is I'm not quite sure how to mount these VMs.

I am still new and not quite sure why this is not working. I have been researching online for what feels like 2 hours learning about ZFS. I shutdown my two test nodes for the weekend and the VMs mounts disappeared. I remounted the drives, which felt odd that a few unmounted. Now most VMs are backup except for the ones I'm testing on the ZFS pool. The drives setup in ZFS are online, the mountpoints are showing up in shell, just that the VMs in my /ZFS01/VMs are gone and in Proxmox that directory is gone. If I go to zfs list it is showing ZFS01/VMs/vm-101-disk-0 and other VMs with no mountpoint. the ZFS01/VMs is pointed to the mountpoint /ZFS01/VMs. When I re-add the /ZFS01/VMs to the directory again in proxmox it doesn't find the VMs as they are jut not in that directory.

If I lsblk -f I can see the zfs system and zd0p3 is probably the first disk missing and zd32p2 is the 2nd as I setup the disks as EXT4.

Below is after I use zfs list and it shows the disks are there just missing a mountpoint. However, the ZFS01/VMs has the mountpoint.

The challenge is I'm not quite sure how to mount these VMs.