Hi,

I have set up a Windows Server 2022 VM where I am getting very slow nvme speeds.

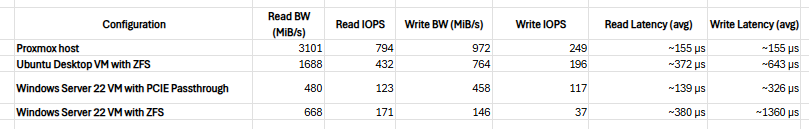

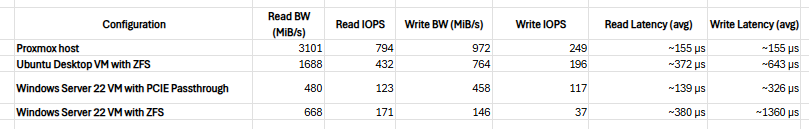

As shown below I have tested one of my nvme drives both directly on Proxmox, in an Ubuntu Desktop VM with ZFS, in the Windows VM using PCIE passthrough and in the Windows VM using a virtual ZFS disk from Proxmox.

There seems to be some sort of bottleneck which slows down my disk performance on the Windows VM significantly. And it happens both with PCIE passthrough (Passed the controller through as VFIO) and with a virtual disk.

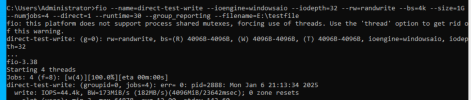

The benchmark tests are done using fio. The tested nvme drive is a Kingston NV2 1 TB. I have also tested a Samsung 990 PRO with similarly bad results for the Windows VM.

Do you guys have any idea what could be causing this? Or how I can identify the issue?

I have set up a Windows Server 2022 VM where I am getting very slow nvme speeds.

As shown below I have tested one of my nvme drives both directly on Proxmox, in an Ubuntu Desktop VM with ZFS, in the Windows VM using PCIE passthrough and in the Windows VM using a virtual ZFS disk from Proxmox.

There seems to be some sort of bottleneck which slows down my disk performance on the Windows VM significantly. And it happens both with PCIE passthrough (Passed the controller through as VFIO) and with a virtual disk.

The benchmark tests are done using fio. The tested nvme drive is a Kingston NV2 1 TB. I have also tested a Samsung 990 PRO with similarly bad results for the Windows VM.

Do you guys have any idea what could be causing this? Or how I can identify the issue?

Attachments

Last edited: