Hello !

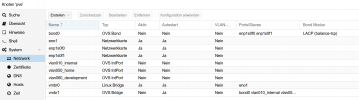

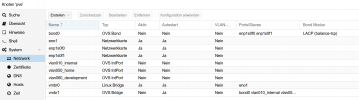

After a server/host reboot OVS bond, bridge and ports are in disabled state and can't be enabled.

Here some info

Can anyone help ?

Many thanks in advance !

After a server/host reboot OVS bond, bridge and ports are in disabled state and can't be enabled.

Here some info

Code:

root@pve:~# apt install openvswitch-switch

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

openvswitch-switch is already the newest version (2.15.0+ds1-2).

The following packages were automatically installed and are no longer required:

bsdmainutils libatomic1 libdouble-conversion1 libllvm7

Use 'apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

root@pve:~# dpkg -l openvswitch-*

Desired=Unknown/Install/Remove/Purge/Hold

| Status=Not/Inst/Conf-files/Unpacked/halF-conf/Half-inst/trig-aWait/Trig-pend

|/ Err?=(none)/Reinst-required (Status,Err: uppercase=bad)

||/ Name Version Architecture Description

+++-==========================-============-============-===================================

ii openvswitch-common 2.15.0+ds1-2 amd64 Open vSwitch common components

ii openvswitch-switch 2.15.0+ds1-2 amd64 Open vSwitch switch implementations

un openvswitch-test <none> <none> (no description available)

un openvswitch-testcontroller <none> <none> (no description available)

un openvswitch-vtep <none> <none> (no description available)

root@pve:~#

Code:

root@pve:/etc/network# more interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

auto enp1s0f0

iface enp1s0f0 inet manual

auto enp1s0f1

iface enp1s0f1 inet manual

allow-vmbr1 vlan010_internal

iface vlan010_internal inet static

address 192.168.10.0/24

ovs_type OVSIntPort

ovs_bridge vmbr1

ovs_options tag=10

allow-vmbr1 vlan050_home

iface vlan050_home inet static

address 192.168.50.0/24

ovs_type OVSIntPort

ovs_bridge vmbr1

ovs_options tag=50

allow-vmbr1 vlan060_development

iface vlan060_development inet static

address 192.168.60.0/28

ovs_type OVSIntPort

ovs_bridge vmbr1

ovs_options tag=60

allow-vmbr1 bond0

iface bond0 inet manual

ovs_bonds enp1s0f0 enp1s0f1

ovs_type OVSBond

ovs_bridge vmbr1

ovs_options lacp=active bond_mode=balance-tcp other_config:lacp-time=fast

auto vmbr0

iface vmbr0 inet static

address 192.168.0.50/24

gateway 192.168.0.1

bridge-ports eno1

bridge-stp off

bridge-fd 0

allow-ovs vmbr1

iface vmbr1 inet manual

ovs_type OVSBridge

ovs_ports bond0 vlan010_internal vlan050_home vlan060_development

root@pve:/etc/network#Can anyone help ?

Many thanks in advance !