Nah. That

sda1 partition is shown in the output of your

fdisk -l command above. In your case it's just a tiny thing (~1MB) used by the system for holding boot info.

The reason the

ProxOS storage location is doing the question mark thing is because it's apparently using an LVM volume group called "ProxOS" that doesn't exist on the server. Well, that's my assumption from reading the

vgs output, as it's not showing that volume group as existing.

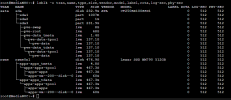

Please run

lsblk -o tran,name,type,size,vendor,model,label,rota,log-sec,phy-sec and paste the output here. That'll give the complete list of physical storage devices attached to your system, with some useful reference info about them too.

Btw, please check the output and let us know if there's some storage attached to the system which

isn't showing up in that list, as that would be a problem that needs investigating.