I had a 2 node proxmox cluster in my homelab (which I have since learned is sub-optimal), and one of the nodes stopped being accessible through the web interface. When accessing the master node, I could see the listing for the other node, but it would just give me 500 errors when trying to look at the node summary or start any of the vms (Red indicator on the node, question marks on the vms)

This occurred after moving the node physically from where I had it sitting to a proper rack, and its network interface is now plugged into a new switch. I can't understand why that might contribute, as the network interface didn't change, and it is reserving the static IP as expected.

I tried several things in an attempt to solve the problem and otherwise simplify the troubleshooting.

At one point I was able to ssh into the stricken node, but at this exact moment I cannot access either the web console or ssh in. I am using ipmi to access the console and attempt changes.

This is not a production node, just has some templates and vms I never backed up (laziness/lack of importance) that I would like to recover, but if I have somehow severely broken things I can just accept that and start fresh.

Any advice for a relative newb to proxmox?

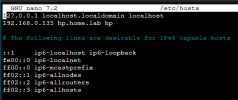

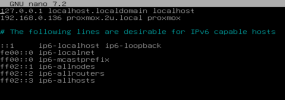

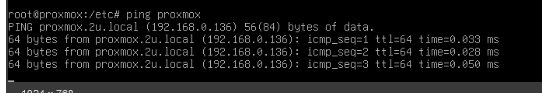

hostname lookup 'proxmox' failed - failed to get address info for: proxmox: Name or service not known (500). Yes that node was creatively named "proxmox".This occurred after moving the node physically from where I had it sitting to a proper rack, and its network interface is now plugged into a new switch. I can't understand why that might contribute, as the network interface didn't change, and it is reserving the static IP as expected.

I tried several things in an attempt to solve the problem and otherwise simplify the troubleshooting.

- Confirmed the IP is correct and the network interface didn't change with

ip a,ip r - Confirmed I am accessing the correct URL (with https and the correct port)

https://192.168.0.136:8006 - Confirmed the host is correctly serving the page (running

curl -v https://192.168.0.136:8006from the host and getting the html back - Split the malfunctioning host from the cluster by following the steps on this page (section 5.5.1 specifically): https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_remove_a_cluster_node

- As part of this step - fixed the master node to be standalone

- set both nodes to only expect 1 vote

- Checked the statuses of a few services (all up/active, and no obvious errors), as well as various restarts of said services after trying small tweaks from other troubleshooting threads

systemctl status pveproxy,kubectl status pve-cluster.service,kubectl status pvedaemon.service - Checked journalctl with some arguments I don't remember to look for any possibly related errors (none noticed, but I am by no means well versed in journalctl logs)

- Edit: Forgot a step. Also checked the firewall rules, and they seemed to match the working master node perfectly.

At one point I was able to ssh into the stricken node, but at this exact moment I cannot access either the web console or ssh in. I am using ipmi to access the console and attempt changes.

This is not a production node, just has some templates and vms I never backed up (laziness/lack of importance) that I would like to recover, but if I have somehow severely broken things I can just accept that and start fresh.

Any advice for a relative newb to proxmox?

Last edited: