I used a 3-node group to form a PVE cluster, and when the management gateway address was unreachable (core switch reboot), the three PVE nodes almost automatically restarted at the same time.

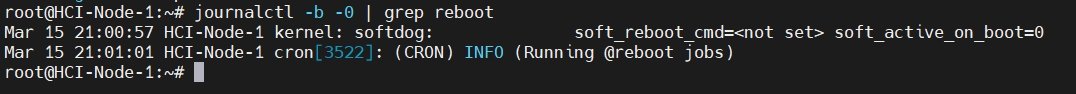

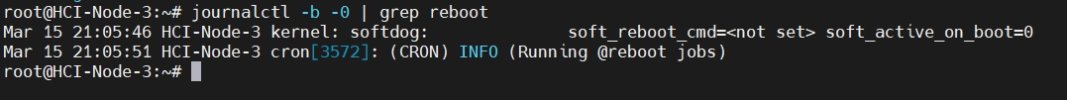

By using the 'Journalctl - b -0 | grep reboot' command to query logs on each node: “kernel: softdog: soft_reboot_cmd=<not set> soft_active_on_boot=0”“cron[3572]: (CRON) INFO (Running @reboot jobs)”。 By analyzing the logs, it is possible that the kernel module softdog initiated a restart task. May I ask how to prevent this situation from happening again? Attached below are screenshots of my network topology and log query.

By using the 'Journalctl - b -0 | grep reboot' command to query logs on each node: “kernel: softdog: soft_reboot_cmd=<not set> soft_active_on_boot=0”“cron[3572]: (CRON) INFO (Running @reboot jobs)”。 By analyzing the logs, it is possible that the kernel module softdog initiated a restart task. May I ask how to prevent this situation from happening again? Attached below are screenshots of my network topology and log query.