PVE remotes:

proxmox-ve: 8.4.0 (running kernel: 6.8.12-11-pve)

pve-manager: 8.4.1 (running version: 8.4.1/2a5fa54a8503f96d)

PDM:

Installed: 0.1.11

VM 10 GB + 1 TB disks

All endpoints 3 devices are on the same subnet without fw, 10 Gbps network, hdd raid. This was 2nd try, 1st try was stuck on end of 1st disk.

From:

To:

PDM:

proxmox-ve: 8.4.0 (running kernel: 6.8.12-11-pve)

pve-manager: 8.4.1 (running version: 8.4.1/2a5fa54a8503f96d)

PDM:

Installed: 0.1.11

VM 10 GB + 1 TB disks

All endpoints 3 devices are on the same subnet without fw, 10 Gbps network, hdd raid. This was 2nd try, 1st try was stuck on end of 1st disk.

From:

Code:

2025-07-23 12:08:58 remote: started tunnel worker 'UPID:pve-backup-2:002E85EE:16AA56A1:6880B4BA:qmtunnel:103:root@pam!pdm-admin:'

tunnel: -> sending command "version" to remote

tunnel: <- got reply

2025-07-23 12:09:03 local WS tunnel version: 2

2025-07-23 12:09:03 remote WS tunnel version: 2

2025-07-23 12:09:03 minimum required WS tunnel version: 2

websocket tunnel started

2025-07-23 12:09:03 starting migration of VM 101 to node 'pve-backup-2' (pve-backup-2)

tunnel: -> sending command "bwlimit" to remote

tunnel: <- got reply

tunnel: -> sending command "bwlimit" to remote

tunnel: <- got reply

2025-07-23 12:09:03 found local disk 'pve:vm-101-disk-0' (attached)

2025-07-23 12:09:03 found local disk 'pve:vm-101-disk-1' (attached)

2025-07-23 12:09:03 copying local disk images

tunnel: -> sending command "disk-import" to remote

tunnel: <- got reply

tunnel: accepted new connection on '/run/pve/101.storage'

tunnel: requesting WS ticket via tunnel

tunnel: established new WS for forwarding '/run/pve/101.storage'

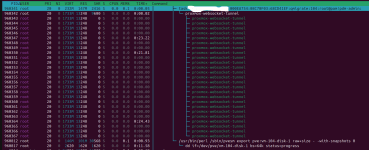

262144 bytes (262 kB, 256 KiB) copied, 4 s, 64.7 kB/s327680 bytes (328 kB, 320 KiB) copied, 4 s, 80.9 kB/s393216 bytes (393 kB, 384 KiB) copied, 4 s, 97.0 kB/s458752 bytes (459 kB, 448 KiB) copied, 4 s, 113 kB/s 238747648 bytes (239 MB, 228 MiB) copied, 5 s, 47.7 MB/s478150656 bytes (478 MB, 456 MiB) copied, 6 s, 79.7 MB/s720896000 bytes (721 MB, 688 MiB) copied, 7 s, 103 MB/s 960888832 bytes (961 MB, 916 MiB) copied, 8 s, 120 MB/s1210712064 bytes (1.2 GB, 1.1 GiB) copied, 9 s, 135 MB/s1458307072 bytes (1.5 GB, 1.4 GiB) copied, 10 s, 146 MB/s1674313728 bytes (1.7 GB, 1.6 GiB) copied, 11 s, 152 MB/s1866399744 bytes (1.9 GB, 1.7 GiB) copied, 12 s, 156 MB/s2096889856 bytes (2.1 GB, 2.0 GiB) copied, 13 s, 161 MB/s2328625152 bytes (2.3 GB, 2.2 GiB) copied, 14 s, 166 MB/s2565341184 bytes (2.6 GB, 2.4 GiB) copied, 15 s, 171 MB/s2826960896 bytes (2.8 GB, 2.6 GiB) copied, 16 s, 177 MB/s3074228224 bytes (3.1 GB, 2.9 GiB) copied, 17 s, 181 MB/s3307012096 bytes (3.3 GB, 3.1 GiB) copied, 18 s, 184 MB/s3562209280 bytes (3.6 GB, 3.3 GiB) copied, 19 s, 187 MB/s3801415680 bytes (3.8 GB, 3.5 GiB) copied, 20 s, 190 MB/s4049272832 bytes (4.0 GB, 3.8 GiB) copied, 21 s, 193 MB/s4291362816 bytes (4.3 GB, 4.0 GiB) copied, 22 s, 195 MB/s4517068800 bytes (4.5 GB, 4.2 GiB) copied, 23 s, 196 MB/s4745658368 bytes (4.7 GB, 4.4 GiB) copied, 24 s, 198 MB/s4965531648 bytes (5.0 GB, 4.6 GiB) copied, 25 s, 199 MB/s5178654720 bytes (5.2 GB, 4.8 GiB) copied, 26 s, 199 MB/s5382078464 bytes (5.4 GB, 5.0 GiB) copied, 27 s, 199 MB/s5590810624 bytes (5.6 GB, 5.2 GiB) copied, 28 s, 200 MB/s5818286080 bytes (5.8 GB, 5.4 GiB) copied, 29 s, 201 MB/s6034292736 bytes (6.0 GB, 5.6 GiB) copied, 30 s, 201 MB/s6249775104 bytes (6.2 GB, 5.8 GiB) copied, 31 s, 202 MB/s6479282176 bytes (6.5 GB, 6.0 GiB) copied, 32 s, 202 MB/s6706888704 bytes (6.7 GB, 6.2 GiB) copied, 33 s, 203 MB/s6935085056 bytes (6.9 GB, 6.5 GiB) copied, 34 s, 204 MB/s7161970688 bytes (7.2 GB, 6.7 GiB) copied, 35 s, 205 MB/s7382564864 bytes (7.4 GB, 6.9 GiB) copied, 36 s, 205 MB/s7610236928 bytes (7.6 GB, 7.1 GiB) copied, 37 s, 206 MB/s7834959872 bytes (7.8 GB, 7.3 GiB) copied, 38 s, 206 MB/s8064729088 bytes (8.1 GB, 7.5 GiB) copied, 39 s, 207 MB/s8299216896 bytes (8.3 GB, 7.7 GiB) copied, 40 s, 207 MB/s8522825728 bytes (8.5 GB, 7.9 GiB) copied, 41 s, 208 MB/s8758493184 bytes (8.8 GB, 8.2 GiB) copied, 42 s, 209 MB/s8994488320 bytes (9.0 GB, 8.4 GiB) copied, 43 s, 209 MB/s9205579776 bytes (9.2 GB, 8.6 GiB) copied, 44 s, 209 MB/s9443475456 bytes (9.4 GB, 8.8 GiB) copied, 45 s, 210 MB/s9671540736 bytes (9.7 GB, 9.0 GiB) copied, 46 s, 210 MB/s9907929088 bytes (9.9 GB, 9.2 GiB) copied, 47 s, 211 MB/s10138222592 bytes (10 GB, 9.4 GiB) copied, 48 s, 211 MB/s10359406592 bytes (10 GB, 9.6 GiB) copied, 49 s, 211 MB/s10584129536 bytes (11 GB, 9.9 GiB) copied, 50 s, 212 MB/s

163840+0 records in

163840+0 records out

10737418240 bytes (11 GB, 10 GiB) copied, 51.6712 s, 208 MB/s

tunnel: -> sending command "query-disk-import" to remote

tunnel: done handling forwarded connection from '/run/pve/101.storage'

tunnel: <- got reply

2025-07-23 12:09:57 disk-import: volume datavg1/vm-103-disk-0 already exists - importing with a different name

tunnel: -> sending command "query-disk-import" to remote

tunnel: <- got reply

2025-07-23 12:09:58 disk-import: Logical volume "vm-103-disk-2" created.

tunnel: -> sending command "query-disk-import" to remote

tunnel: <- got reply

2025-07-23 12:10:04 waiting for disk import to finish..

tunnel: -> sending command "query-disk-import" to remote

tunnel: <- got reply

2025-07-23 12:10:10 waiting for disk import to finish..

tunnel: -> sending command "query-disk-import" to remote

tunnel: <- got reply

2025-07-23 12:10:11 disk-import: 3232+639798 records in

tunnel: -> sending command "query-disk-import" to remote

tunnel: <- got reply

2025-07-23 12:10:12 disk-import: 3232+639798 records out

tunnel: -> sending command "query-disk-import" to remote

tunnel: <- got reply

2025-07-23 12:10:13 disk-import: 10737418240 bytes (11 GB, 10 GiB) copied, 60.4283 s, 178 MB/s

tunnel: -> sending command "query-disk-import" to remote

tunnel: <- got reply

2025-07-23 12:10:14 volume 'pve:vm-101-disk-0' is 'datavg1:vm-103-disk-2' on the target

tunnel: -> sending command "disk-import" to remote

tunnel: <- got reply

tunnel: accepted new connection on '/run/pve/101.storage'

tunnel: requesting WS ticket via tunnel

tunnel: established new WS for forwarding '/run/pve/101.storage'

262144 bytes (262 kB, 256 KiB) copied, 4 s, 65.4 kB/s327680 bytes (328 kB, 320 KiB) copied, 4 s, 81.8 kB/s393216 bytes (393 kB, 384 KiB) copied, 4 s, 98.1 kB/s458752 bytes (459 kB, 448 KiB) copied, 4 s, 114 kB/sTo:

Code:

mtunnel started

received command 'version'

received command 'bwlimit'

received command 'bwlimit'

received command 'disk-import'

ready

received command 'ticket'

received command 'query-disk-import'

disk-import: volume datavg1/vm-103-disk-0 already exists - importing with a different name

received command 'query-disk-import'

disk-import: Logical volume "vm-103-disk-2" created.

received command 'query-disk-import'

received command 'query-disk-import'

received command 'query-disk-import'

disk-import: 3232+639798 records in

received command 'query-disk-import'

disk-import: 3232+639798 records out

received command 'query-disk-import'

disk-import: 10737418240 bytes (11 GB, 10 GiB) copied, 60.4283 s, 178 MB/s

received command 'query-disk-import'

disk-import: successfully imported 'datavg1:vm-103-disk-2'

received command 'disk-import'

ready

received command 'ticket'PDM:

Code:

mtunnel startedreceived command 'version'received command 'bwlimit'received command 'bwlimit'received command 'disk-import'readyreceived command 'ticket'received command 'query-disk-import'disk-import: volume datavg1/vm-103-disk-0 already exists - importing with a different namereceived command 'query-disk-import'disk-import: Logical volume "vm-103-disk-2" created.received command 'query-disk-import'received command 'query-disk-import'received command 'query-disk-import'disk-import: 3232+639798 records inreceived command 'query-disk-import'disk-import: 3232+639798 records outreceived command 'query-disk-import'disk-import: 10737418240 bytes (11 GB, 10 GiB) copied, 60.4283 s, 178 MB/sreceived command 'query-disk-import'disk-import: successfully imported 'datavg1:vm-103-disk-2'received command 'disk-import'readyreceived command 'ticket'