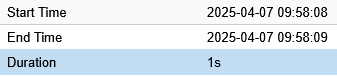

So, as the title says, its taking 40min to create a VM and I can't figure out for the life of me why this is happening... I attached an image with all the settings I'm using to create the VM... Any help would be greatly appreciated!

System Specs:

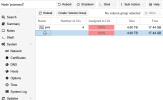

System when creating the VM:

VM Settings:

- There is no hardware raid

- VM OS Ubuntu 24.04

System Specs:

PowerEdge R730xd:

2 Intel(R) Xeon(R) CPU E5-2683 v4 @ 2.10GHz

256GB DDR4 2400MHz PC4-2400T ECC

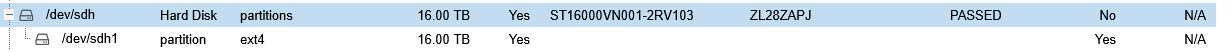

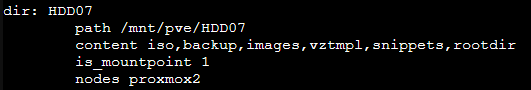

12x16TB Seagate IronWolf Pro NAS Hard Drives --Yes I know they are not enterprise...

Not sure if this has to do with anything?

System when creating the VM:

VM Settings:

Last edited: