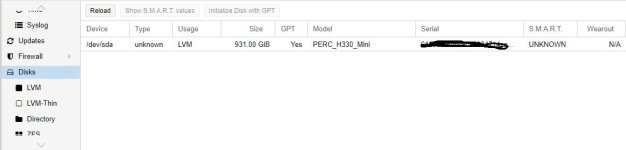

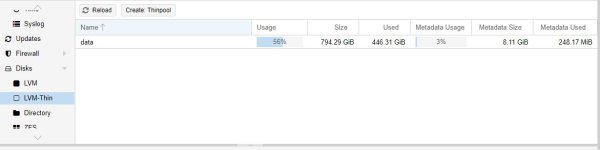

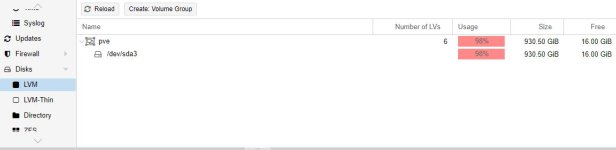

hi guys, simply : trying to restore vm, i get this warning : " sum of all thin volume sizes ( 900.00 Gib) exceeds the size of thin pool pve/data and the amount of free space in volume group. What means this? i'm put some screen shots of my proxmox where it's possible to see my disks configuration; any idea about this warning ? thanx a lot in advance

sum of all thin volume sizes exceeds the size of thin pool...

- Thread starter elettrodata4

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Its like the message says...you only got 16GiB of free space on that storage and try to add a thin provisioned disk with 900GiB. So the VM is allowed to write 900GB but after it writes more than 16 GiB you get errors and data may be corrupted because you are running out of space.

If you use thin provisioning and do overprovisioning you alsway need to make sure by yourself that there is always enought free capacity available.

If you use thin provisioning and do overprovisioning you alsway need to make sure by yourself that there is always enought free capacity available.

@Dunuin thanx for reply :] . i have just one doubt; on node i've 3 vm : 300 gb, 250gb, 200gb respectively and when i try to restore the 4th vm, that warning appear ( sum of etc... ), but the 4th vm has 110gb hdd ( 850gb total).... i don't understand this... for now i've restored 4th vm on one of my NAS for not risk anything problem :]

I still don't get it.

Migrating a 4 GB container to a node that has a thin-lvm with about 60ish GB free.

Where does it get I've provisioned 88 GB for this container?

The migration went through just fine I think.

Can somebody please help me understand this?

Migrating a 4 GB container to a node that has a thin-lvm with about 60ish GB free.

Where does it get I've provisioned 88 GB for this container?

The migration went through just fine I think.

Can somebody please help me understand this?

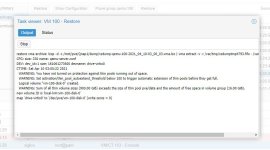

Code:

2021-11-25 16:41:13 shutdown CT 102

2021-11-25 16:41:17 starting migration of CT 102 to node 'smaug' (192.168.0.7)

2021-11-25 16:41:17 found local volume 'local-lvm:vm-102-disk-0' (in current VM config)

2021-11-25 16:41:20 WARNING: You have not turned on protection against thin pools running out of space.

2021-11-25 16:41:20 WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

2021-11-25 16:41:20 Logical volume "vm-102-disk-0" created.

2021-11-25 16:41:20 WARNING: Sum of all thin volume sizes (88.00 GiB) exceeds the size of thin pool pve/data and the amount of free space in volume group (13.48 GiB).

2021-11-25 16:43:02 65536+0 records in

2021-11-25 16:43:02 65536+0 records out

2021-11-25 16:43:02 4294967296 bytes (4.3 GB, 4.0 GiB) copied, 102.669 s, 41.8 MB/s

2021-11-25 16:43:06 5521+234098 records in

2021-11-25 16:43:06 5521+234098 records out

2021-11-25 16:43:06 4294967296 bytes (4.3 GB, 4.0 GiB) copied, 105.06 s, 40.9 MB/s

2021-11-25 16:43:06 successfully imported 'local-lvm:vm-102-disk-0'

2021-11-25 16:43:06 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=smaug' root@192.168.0.7 pvesr set-state 102 \''{}'\'

Logical volume "vm-102-disk-0" successfully removed

2021-11-25 16:43:08 start final cleanup

2021-11-25 16:43:10 start container on target node

2021-11-25 16:43:10 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=smaug' root@192.168.0.7 pct start 102

2021-11-25 16:43:16 migration finished successfully (duration 00:02:03)

TASK OKYou are probably running multiple LXCs that need 88GiB in total but you only got 13.48 GiB free space left. So all your LXCs together may only write up to 13.48GiB more of data, but your LXCs will try to write up to 88GiB of data, because you told them too use more space than you actually got.

So nothing will prevent the LXC from completely filling up that disk until the server crashes and you need to manually take care of that this will never happen by deleting data in your LXCs or replacing the storage with a bigger disk.

So nothing will prevent the LXC from completely filling up that disk until the server crashes and you need to manually take care of that this will never happen by deleting data in your LXCs or replacing the storage with a bigger disk.

Last edited:

You are probably running multiple LXCs that need 88GiB in total but you only got 13.48 GiB free space left. So all your LXCs together may only write up to 13.48GiB more of data, but your LXCs will try to write up to 88GiB of data, because you told them too use more space than you actually got.

So nothing will prevent the LXC from completely filling up that disk until the server crashes and you need to manually take care of that this will never happen by deleting data in your LXCs or replacing the storage with a bigger disk.

I see what you mean.

However, I have five containers, each set up with 4 GB of storage, and totalling 20 GB.

With that in mind, I still don't see where those 88 gigs are coming from.