I'm trying to set up a simple network bond, and I can't seem to get it to work. My server has 2 NICs which I am trying to connect to an HP1920/48G switch for increased network capacity. I would like the server to present a single IP address for management and have the VMs use the same bridge for their access, all on the same subnet.

On the switch side, I created a 2-port static link aggregation using 802.3ad, and I can see that the switch considers both ports "selected"--that is active and part of the link aggregation.

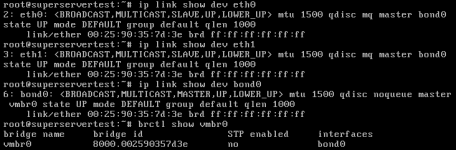

On the server side (proxmox 4.4-5) I have the following in /etc/network/interfaces:

When I reboot the server, the links appear to come up, but I cannot access the server by ssh nor web interface (although weirdly I can get through under certain rare circumstances). I don't know if the VMs can communicate through the bridge or not.

Can anyone see any obvious problems with this setup and/or my config? Can you suggest a more robust way to achieve the goal?

Thanks,

Jeff

On the switch side, I created a 2-port static link aggregation using 802.3ad, and I can see that the switch considers both ports "selected"--that is active and part of the link aggregation.

On the server side (proxmox 4.4-5) I have the following in /etc/network/interfaces:

Code:

auto lo

iface lo inet loopback

iface eth0 inet manual

iface eth1 inet manual

auto bond0

iface bond0 inet manual

slaves eth0 eth1

bond_miimon 100

bond_mode 802.3ad

bond_xmit_hash_policy layer2+3

auto vmbr0

iface vmbr0 inet static

address 192.168.240.195

netmask 255.255.252.0

gateway 192.168.243.24

bridge_ports bond0

bridge_stp off

bridge_fd 0When I reboot the server, the links appear to come up, but I cannot access the server by ssh nor web interface (although weirdly I can get through under certain rare circumstances). I don't know if the VMs can communicate through the bridge or not.

Can anyone see any obvious problems with this setup and/or my config? Can you suggest a more robust way to achieve the goal?

Thanks,

Jeff