Hello,

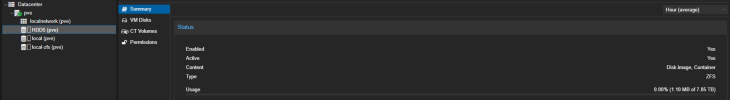

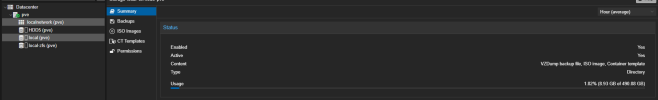

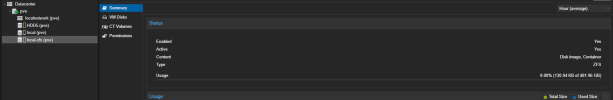

This is my first time working with Proxmox, and I can't explain the following issue. How come both 'local' and 'local-zfs' are approximately the same size?

The configuration is 3x256 SSDs and 3x4TB HDDs. My idea is to use the SSDs in a Raidz-1 setup for Proxmox and VMs, and the HDDs in a Raidz-1 setup for storage.

I think that if I have installed Proxmox correctly and understood it properly, 'local' and 'local-zfs' should be around ~500GB in total.

Is there a way to figure out which partition is using which disks?

Thank you.

This is my first time working with Proxmox, and I can't explain the following issue. How come both 'local' and 'local-zfs' are approximately the same size?

The configuration is 3x256 SSDs and 3x4TB HDDs. My idea is to use the SSDs in a Raidz-1 setup for Proxmox and VMs, and the HDDs in a Raidz-1 setup for storage.

I think that if I have installed Proxmox correctly and understood it properly, 'local' and 'local-zfs' should be around ~500GB in total.

Is there a way to figure out which partition is using which disks?

Thank you.