Hello,

First I would explore the firmware question because of the following points (before throwing 990 Pro's to trash ...) :

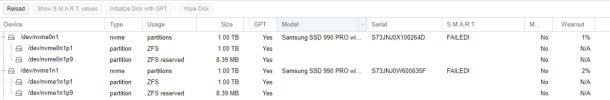

- because wearout indicator is only 1-2 % and the FAILED status is not permanent probably means the (supposed) failure condition is not permanent

- temperature higher than some preset warning after a (long lasting) high load could fit this condition, especially for non-heatsink version of drives

- this hypothesis is stronger for recent PCIe gen 5 and high-end PCIe gen 4 drives, which the 990 Pro line is from

- you could look with the "Show S.M.A.R.T. values" button for maybe confirming this hypothesis (but optional, please read below)

- Samsung 990 Pro (in your case, but also 980 Pro) is sadly known in 2023 for different problems related to its (older version of) firmware

- including some bad interpretation of SMART data, temperature or wearout by the first firmware versions, leading to numerous "defective" SSD's

- it seems that the latest firmware solved these problems, at least for the SSD that where not nearly dead

- firmware from Samsung is available as ISO that you can boot from (with Ventoy for example, I recommend it if you not already know it)

- a quick search land me to

THIS page from Samsung where you can download ISO in the Firmware section (below the annoying Magician software)

- I did a dozen firmware updates in the past with those ISO's on 800 and 900 series SSD, which is way simpler than to move them to a Windows box

With a little touch of humor I would add : "This is not consumer SSD that are not suitable for ZFS, this is ZFS that is less suitable as local storage for PVE nodes" ... also based on my (humble) experience going back and forth between EXT4 and ZFS on consumer SSD's and some (simple) tests like CrystalDiskMark (up to 3x more perfs in write with EXT4 + qcow2 against ZFS ...).

Hope it helps !

You explain basically exactly the issues here, with 990 Pro's

"explore the firmware question"

-> Newest firmware doesn't change anything, they maybe fail slightly later. I thought updating helps too.

"indicator is only 1-2 %"

-> Correct, here either, they still fail.

"temperature higher than some preset warning"

-> Impossible here, since they sit on a Bifurbication Card with Own Fan and a gigantic heatsink for the SSD's. I even used Cryonaut paste between the chips and heatsink.

And for everything else, 980 Pros never had such issue and are Rock-Stable. 970 Evo Plus are Rock-Stable either.

My Favourites are the 870 EVO "SSD's", they are Performance wise a bit slow, extremely slow tbh, but none of those died ever in out Company. We use them in an Open-E JovianDSS Storage Cluster.

Thats basically a ZFS HA-Storage Solution over iscsi. There are 48x 970 EVO 2TB Drives.

So in all Servers that i have Private or in the Company, i just stumbled across 990 Series and Micron MX500 that are crap.

For the Microns its even worse, because as the MX500 Camed out first, they were TLC based and were undestroyable almost, but they changed mid Production to QLC and the same MX500 Drive got absolutely worst piece of crap.

You can only Distinquish the good from the bad ones by the Firmware Version. The good ones have 0010 FW and the bad ones everything above 0024.

We encountered this, while building an new Server and were wondering why the same Micron MX500 drives die weekly. It took forever to find out the reason, but after dissasembling an old drive and new drive, it was absolutely Clear!

Here is a picture, on the Left side are the old MX500 with 0010 FW and on the Right is a new MX500 with 0024 FW.

The worst part is, that you don't know that before, you expect that if you have good experience with a Product, and you buy the same Product, that you get what you expect. But in this case, micron sells those new MX500 SSD's as the same model, and they are pure crap.

Cheers

PS: Both are Genuine and both are MX500 2TB. The left ones didnt died ever, the right ones die after max 2 weeks in our company.

But in the meantime we exchanged all 48 of those MX500 with Samsung 870 EVO's, simply because you can't get anymore the old MX500. So we cannot replace them in the long term, so they had to be exchanged.