HELP!

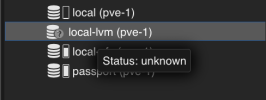

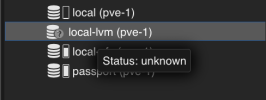

Proxmox seems to have 'lost' my local-lvm storage somehow. Most of my VMs and containers live on this drive. In the Proxmox GUI, status shows as 'Unknown' in the sidebar and Status shows Active: No Most of my VMs are still running, but I am afraid to shut down or restart the host. If I try to start a VM which is not already running I get the error: TASK ERROR: no such logical volume local-lvm/local-lvm. I can not seem to mount it or otherwise get Proxmox to recognize the local-lvm storage, even though the running VMs are stored on that drive!

I have no idea how to troubleshoot this situation. Any help would be GREATLY appreciated!

Thank you.

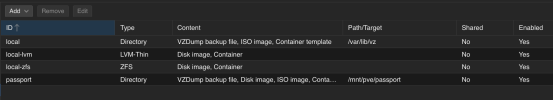

I can see it in

And

Proxmox seems to have 'lost' my local-lvm storage somehow. Most of my VMs and containers live on this drive. In the Proxmox GUI, status shows as 'Unknown' in the sidebar and Status shows Active: No Most of my VMs are still running, but I am afraid to shut down or restart the host. If I try to start a VM which is not already running I get the error: TASK ERROR: no such logical volume local-lvm/local-lvm. I can not seem to mount it or otherwise get Proxmox to recognize the local-lvm storage, even though the running VMs are stored on that drive!

I have no idea how to troubleshoot this situation. Any help would be GREATLY appreciated!

Thank you.

I can see it in

lsblk

Code:

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 1.9T 0 disk

└─sda1 8:1 0 1.9T 0 part

├─local--lvm-local--lvm_tmeta 252:0 0 15.9G 0 lvm

│ └─local--lvm-local--lvm-tpool 252:2 0 1.8T 0 lvm

│ ├─local--lvm-local--lvm 252:3 0 1.8T 1 lvm

│ ├─local--lvm-vm--101--disk--0 252:4 0 1000G 0 lvm

│ │ ├─local--lvm-vm--101--disk--0p1 252:11 0 1M 0 part

│ │ └─local--lvm-vm--101--disk--0p2 252:12 0 976.6G 0 part

│ ├─local--lvm-vm--103--disk--0 252:5 0 50G 0 lvm

│ ├─local--lvm-vm--102--disk--0 252:6 0 8G 0 lvm

│ ├─local--lvm-vm--105--disk--0 252:7 0 8G 0 lvm

│ ├─local--lvm-vm--106--disk--0 252:8 0 8G 0 lvm

│ ├─local--lvm-vm--107--disk--0 252:9 0 8G 0 lvm

│ └─local--lvm-vm--108--disk--0 252:10 0 2G 0 lvm

└─local--lvm-local--lvm_tdata 252:1 0 1.8T 0 lvm

└─local--lvm-local--lvm-tpool 252:2 0 1.8T 0 lvm

├─local--lvm-local--lvm 252:3 0 1.8T 1 lvm

├─local--lvm-vm--101--disk--0 252:4 0 1000G 0 lvm

│ ├─local--lvm-vm--101--disk--0p1 252:11 0 1M 0 part

│ └─local--lvm-vm--101--disk--0p2 252:12 0 976.6G 0 part

├─local--lvm-vm--103--disk--0 252:5 0 50G 0 lvm

├─local--lvm-vm--102--disk--0 252:6 0 8G 0 lvm

├─local--lvm-vm--105--disk--0 252:7 0 8G 0 lvm

├─local--lvm-vm--106--disk--0 252:8 0 8G 0 lvm

├─local--lvm-vm--107--disk--0 252:9 0 8G 0 lvm

└─local--lvm-vm--108--disk--0 252:10 0 2G 0 lvmAnd

fdisk -l

Code:

Disk /dev/sda: 1.86 TiB, 2048408248320 bytes, 4000797360 sectors

Disk model: Samsung SSD 850

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: CB63BC22-2519-44F1-A915-4CF8D8F6EFDA

Device Start End Sectors Size Type

/dev/sda1 2048 4000796671 4000794624 1.9T Linux LVM