This is likely a very easy question, but i've searched and can't seem to find the answer. And i wouldn't call myself a guru by any strech of the word, so forgive me if this is first day of grade school stuff.

I'm migrating to a new server that has 10gbe dual NIC. Old server was 2.5gbe dual NIC. I've created the bond & bridge correctly and ethtool correctly shows 20gbe for the bond/bridge. (and i know this is not the speed i'll get, but rather the bandwidth)

I'm migrating via Proxmox Backup Server. (Connected to both old/new boxes)

Basically I'm Shutting down CT's & VM's on old server, backing them up, and restoring them to new server.

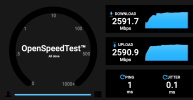

My problem is: Any CT/VM i create from scratch in the new server gets the correct speed, but the CT's/VM's i'm pulling from PBS are retaining the 2.5gbe speeds somehow. I'm assuming somewhere they're configured that way, but i can't find it. I've compared new/old CT's/VM and network settings in the GUI are the same. I've looked in the CT/MV config files are they're the same. (both using vmbr0 bridge). The only diff is the vlan and i don't think i have restrictions on inter-vlan routing in my network. I can certainly try to put them or my client in native vlan to rule that out though. (didn't think to try that)

One was created from scratch and is getting the 10gbe & the other was pulled from backup and is getting 2.5gbe. The network in the containers appears same. iperf3 shows correct speeds for "from scratch" containers and shows old speeds for "migrated" containers.

I'm pulling my hair out trying to figure it out. The CT's & VM's are stopped on the old server, but the server is still running. I've tried CT's & VM's and both get 2.5gbe unless i create them from scratch so i assume there is some configuration within the CT/VM themselves, but i can't seem to locate it.

Anyone have any thoughts?

I'm migrating to a new server that has 10gbe dual NIC. Old server was 2.5gbe dual NIC. I've created the bond & bridge correctly and ethtool correctly shows 20gbe for the bond/bridge. (and i know this is not the speed i'll get, but rather the bandwidth)

I'm migrating via Proxmox Backup Server. (Connected to both old/new boxes)

Basically I'm Shutting down CT's & VM's on old server, backing them up, and restoring them to new server.

My problem is: Any CT/VM i create from scratch in the new server gets the correct speed, but the CT's/VM's i'm pulling from PBS are retaining the 2.5gbe speeds somehow. I'm assuming somewhere they're configured that way, but i can't find it. I've compared new/old CT's/VM and network settings in the GUI are the same. I've looked in the CT/MV config files are they're the same. (both using vmbr0 bridge). The only diff is the vlan and i don't think i have restrictions on inter-vlan routing in my network. I can certainly try to put them or my client in native vlan to rule that out though. (didn't think to try that)

One was created from scratch and is getting the 10gbe & the other was pulled from backup and is getting 2.5gbe. The network in the containers appears same. iperf3 shows correct speeds for "from scratch" containers and shows old speeds for "migrated" containers.

I'm pulling my hair out trying to figure it out. The CT's & VM's are stopped on the old server, but the server is still running. I've tried CT's & VM's and both get 2.5gbe unless i create them from scratch so i assume there is some configuration within the CT/VM themselves, but i can't seem to locate it.

Anyone have any thoughts?