Hi,

I am running Proxmox VE v8.2.4, and I often create Debian 11 VM's snapshots with memory.

For several weeks (I'm not sure it happened with qemu v9) I have systematically a critical issue :

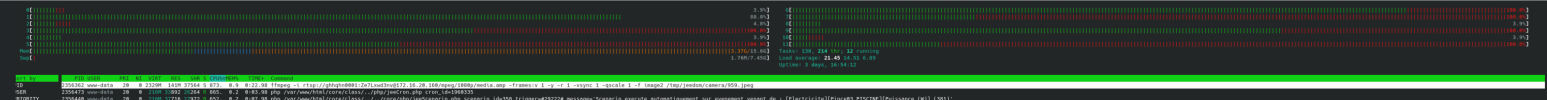

State of the VM at the end of snapshot :

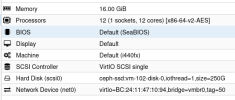

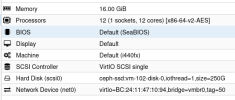

Here is my configuration :

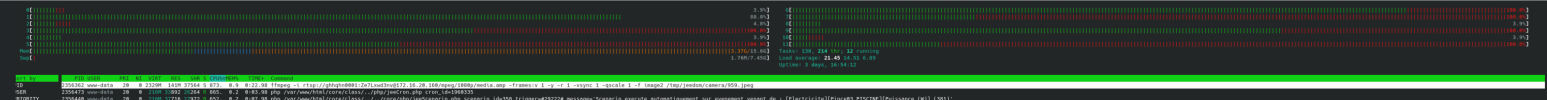

I am not sure, it seems there is a subject with "io" :

-> Did I missed something in configuration ?

-> Is there any patch to fix this critical issue ?

Don't hesitate to ask me for logs.

Regards.

I am running Proxmox VE v8.2.4, and I often create Debian 11 VM's snapshots with memory.

For several weeks (I'm not sure it happened with qemu v9) I have systematically a critical issue :

- Every time I make a snapshot, VM becomes very slow / non-responsive.

- The only way to return in normal mode is to stop and restart VM.

State of the VM at the end of snapshot :

Here is my configuration :

I am not sure, it seems there is a subject with "io" :

-> Did I missed something in configuration ?

-> Is there any patch to fix this critical issue ?

Don't hesitate to ask me for logs.

Regards.