Hi, I have problem with low IO and really low read on my backup server. Operations such as cleaning old backups chunks take like 10 days (i have 20TB HDD pool with SSD for ZIL and L2ARC, and its almost full):

I have configured L2ARC and ZIL on two different SSDs, but even if I remove them from pool (to see if anything changes), IOps and read speed won't change.

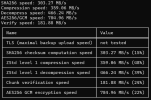

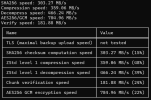

Heres benchmark:

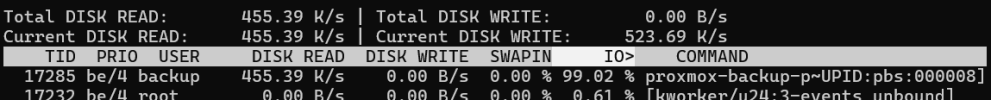

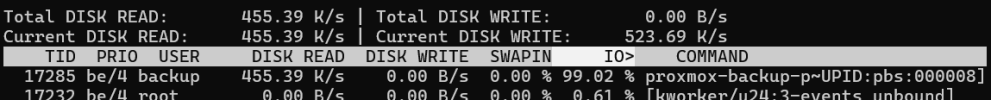

And iotop while marking chanks:

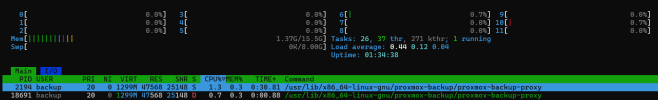

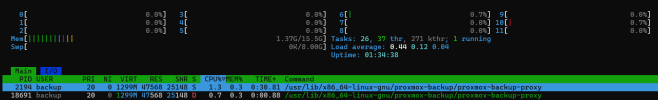

And htop here:

My Hardware:

Code:

2024-05-16T14:10:00+02:00: starting garbage collection on store HDD1

2024-05-16T14:10:00+02:00: task triggered by schedule 'daily'

2024-05-16T14:10:00+02:00: Start GC phase1 (mark used chunks)

2024-05-16T23:53:49+02:00: marked 1% (36 of 3553 index files)

2024-05-17T12:42:43+02:00: marked 2% (72 of 3553 index files)

2024-05-17T23:48:08+02:00: marked 3% (107 of 3553 index files)

2024-05-18T09:53:53+02:00: marked 4% (143 of 3553 index files)

2024-05-18T22:50:36+02:00: marked 5% (178 of 3553 index files)

2024-05-19T07:14:28+02:00: marked 6% (214 of 3553 index files)

2024-05-19T11:43:56+02:00: marked 7% (249 of 3553 index files)

2024-05-19T16:29:23+02:00: marked 8% (285 of 3553 index files)

2024-05-20T02:02:22+02:00: marked 9% (320 of 3553 index files)

2024-05-20T02:14:49+02:00: marked 10% (356 of 3553 index files)

2024-05-20T02:23:23+02:00: marked 11% (391 of 3553 index files)

2024-05-20T02:35:52+02:00: marked 12% (427 of 3553 index files)

2024-05-20T02:44:05+02:00: marked 13% (462 of 3553 index files)

2024-05-20T05:12:40+02:00: marked 14% (498 of 3553 index files)

2024-05-20T05:42:39+02:00: marked 15% (533 of 3553 index files)

2024-05-20T07:05:40+02:00: marked 16% (569 of 3553 index files)

2024-05-20T07:33:52+02:00: marked 17% (605 of 3553 index files)

2024-05-20T07:54:24+02:00: marked 18% (640 of 3553 index files)

2024-05-20T09:45:18+02:00: marked 19% (676 of 3553 index files)

2024-05-20T15:35:15+02:00: marked 20% (711 of 3553 index files)

2024-05-20T16:05:12+02:00: marked 21% (747 of 3553 index files)

2024-05-20T16:13:02+02:00: received abort request ...

2024-05-20T16:13:02+02:00: TASK ERROR: abort requested - aborting taskI have configured L2ARC and ZIL on two different SSDs, but even if I remove them from pool (to see if anything changes), IOps and read speed won't change.

Heres benchmark:

And iotop while marking chanks:

And htop here:

My Hardware:

- Intel(R) Xeon(R) CPU E5-1650 0 @ 3.20GHz

- 16GB RAM

- 8x HUA723030ALA641 - ZFS Pool

- 1x Samsung MZVLW256 - 256GB

- 1x Samsung PM851 - 128GB