Hi there,

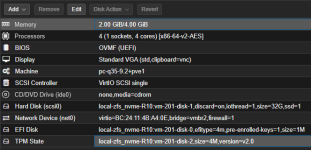

i'm configuring a new Proxmox Server (INTEL(R) XEON(R) GOLD 6544Y, 384 GB RAM, 2x 10Gbit NIC) and run into a problem with the network speed between VMs and VMs accross the network to other devices.

Test-Setup:

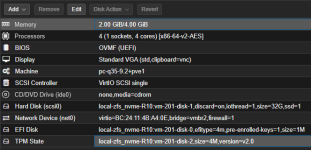

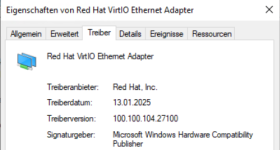

- Two Windows Server 2022-Servers with actual VirtIO-Drivers

- Both bound to a new vmbr2 (no physical links assigned) with static IPs (192.168.44.1 VM1, .2 for VM2)

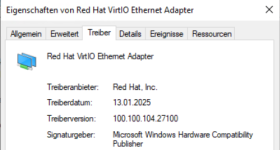

Both VMs use this Version of the VirtIO-Driver from the actual VirtIO-Guesttools-iso:

If i run iperf with default settings (iperf -s on the VM1, iperf -c 192.168.44.2 on VM2), i get values between 1 and 2Gbit:

I thought the network speed between VMs on the same node with the VirtIO-NIC would be higher (like on VMware with vmxnet3)?

I tested:

- "x86-64-v2-AES" and "Host" CPU-Type

- With and without Numa/Hot Plug CPU/Memory

- Set MTU on bridge to 1430, and/or VM-NICs to MTU 1430

- Set Multiqueue in both NICs to the amount of Cores (4)

- Tested other productive VMs, that communicate over vmbr0 and 10G-Downlinks to our 10G-Core-Switch. Same speeds.

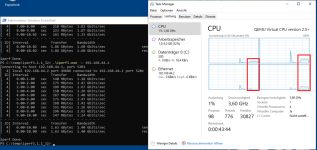

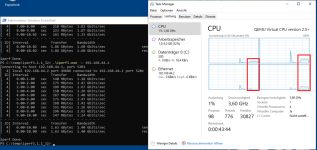

When i test the bandwith with iperf, the CPUs on the client don't get above ~15%:

Has anyone an idea, why the speeds are so slow?

Thanks in advance,

Bastian

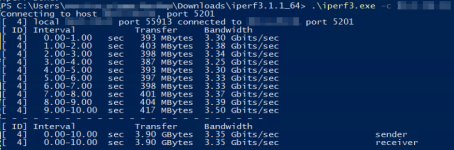

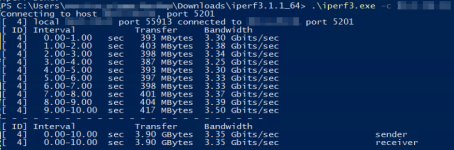

Edit: I tried iperf3 on the Proxmox-Host itself (as iperf3-server) to a Backup-Server (Windows, iperf3-client). Both are connected via 10G to a 10G Switch.

The Backup-Server is not the fastest (Intel E3-1220 v5), but i also get not near to 10G-Throughput:

I can't test it with another, newer 10G-Server and i'm not sure if this indicates a problem with the Proxmox-Host itself. If i check the performance between two VMs on the same Proxmox-Host/Storage on a vmbr without assigned physical NICs, the problem should has nothing to do with the physical NICs and Switch-Infrastructure (?!).

Additional Info's to the Proxmox-Host:

- Manager version: pve-manager/8.4.1/2a5fa54a8503f96d

- Kernel Linux 6.8.12-11-pve (2025-05-22T09:39Z)

- No-Subscription Repo

- INTEL(R) XEON(R) GOLD 6544Y

- 140/377GB RAM used

- ZFS RAID 10 with 6 Enterprise-NVME's

Not much load:

i'm configuring a new Proxmox Server (INTEL(R) XEON(R) GOLD 6544Y, 384 GB RAM, 2x 10Gbit NIC) and run into a problem with the network speed between VMs and VMs accross the network to other devices.

Test-Setup:

- Two Windows Server 2022-Servers with actual VirtIO-Drivers

- Both bound to a new vmbr2 (no physical links assigned) with static IPs (192.168.44.1 VM1, .2 for VM2)

Both VMs use this Version of the VirtIO-Driver from the actual VirtIO-Guesttools-iso:

If i run iperf with default settings (iperf -s on the VM1, iperf -c 192.168.44.2 on VM2), i get values between 1 and 2Gbit:

Code:

Server listening on 5201

-----------------------------------------------------------

Accepted connection from 192.168.44.2, port 49679

[ 5] local 192.168.44.1 port 5201 connected to 192.168.44.2 port 49680

[ ID] Interval Transfer Bandwidth

[ 5] 0.00-1.00 sec 152 MBytes 1.27 Gbits/sec

[ 5] 1.00-2.00 sec 152 MBytes 1.27 Gbits/sec

[ 5] 2.00-3.00 sec 150 MBytes 1.26 Gbits/sec

[ 5] 3.00-4.00 sec 160 MBytes 1.34 Gbits/sec

[ 5] 4.00-5.00 sec 211 MBytes 1.77 Gbits/sec

[ 5] 5.00-6.00 sec 226 MBytes 1.89 Gbits/sec

[ 5] 6.00-7.00 sec 252 MBytes 2.11 Gbits/sec

[ 5] 7.00-8.00 sec 216 MBytes 1.81 Gbits/sec

[ 5] 8.00-9.00 sec 225 MBytes 1.88 Gbits/sec

[ 5] 9.00-10.00 sec 160 MBytes 1.34 Gbits/sec

[ 5] 10.00-10.03 sec 1.85 MBytes 567 Mbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bandwidth

[ 5] 0.00-10.03 sec 0.00 Bytes 0.00 bits/sec sender

[ 5] 0.00-10.03 sec 1.86 GBytes 1.59 Gbits/sec receiverI thought the network speed between VMs on the same node with the VirtIO-NIC would be higher (like on VMware with vmxnet3)?

I tested:

- "x86-64-v2-AES" and "Host" CPU-Type

- With and without Numa/Hot Plug CPU/Memory

- Set MTU on bridge to 1430, and/or VM-NICs to MTU 1430

- Set Multiqueue in both NICs to the amount of Cores (4)

- Tested other productive VMs, that communicate over vmbr0 and 10G-Downlinks to our 10G-Core-Switch. Same speeds.

When i test the bandwith with iperf, the CPUs on the client don't get above ~15%:

Has anyone an idea, why the speeds are so slow?

Thanks in advance,

Bastian

Edit: I tried iperf3 on the Proxmox-Host itself (as iperf3-server) to a Backup-Server (Windows, iperf3-client). Both are connected via 10G to a 10G Switch.

The Backup-Server is not the fastest (Intel E3-1220 v5), but i also get not near to 10G-Throughput:

I can't test it with another, newer 10G-Server and i'm not sure if this indicates a problem with the Proxmox-Host itself. If i check the performance between two VMs on the same Proxmox-Host/Storage on a vmbr without assigned physical NICs, the problem should has nothing to do with the physical NICs and Switch-Infrastructure (?!).

Additional Info's to the Proxmox-Host:

- Manager version: pve-manager/8.4.1/2a5fa54a8503f96d

- Kernel Linux 6.8.12-11-pve (2025-05-22T09:39Z)

- No-Subscription Repo

- INTEL(R) XEON(R) GOLD 6544Y

- 140/377GB RAM used

- ZFS RAID 10 with 6 Enterprise-NVME's

Not much load:

Last edited: