Hello team,

I am on the latest version of Proxmox with the enterprise repo.

We are moving the disks from a ZFS storage to a ceph storage and all this on NVMe Enterprise SSD PCIE 4.0 * 2 per node = 6 SSD with a 100G Ethernet network.

So the IO should be high and it should not be an issue.

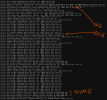

It's stuck at drive-scsi0: mirror-job finished.

for a long time - over 30–40 mins for each disk being moved. -any ideas on how to fix this.

I am on the latest version of Proxmox with the enterprise repo.

We are moving the disks from a ZFS storage to a ceph storage and all this on NVMe Enterprise SSD PCIE 4.0 * 2 per node = 6 SSD with a 100G Ethernet network.

So the IO should be high and it should not be an issue.

It's stuck at drive-scsi0: mirror-job finished.

for a long time - over 30–40 mins for each disk being moved. -any ideas on how to fix this.