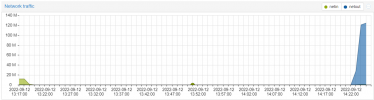

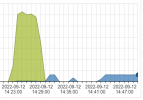

I recently set up three nodes running a fairly small VM which consists of 3 disks 25/4/25 Gb so 54Gb in total. Migration of that VM from one node to another tops out at 120 Megabit/s. You can quite clearly see the limitation on the IO graphs.

I can quite happily get 950 Megabits between nodes for other data or doing a backup to a network drive so why is this bit so slow? There isn't much in the way of contention but the I'd expect migration to be < 10 mins not over an hour.

The IO limitation is so flat that it looks exactly like bandwidth limitation is set.

I think what's its doing is creating a snapshot, then compressing that (which goes to about 10Gb) and then pushing that over - but its terrible performance.

I do have replication for the nodes and that only takes a minute or two every 15 mins with the changes we accumulate - but the initial sync, I think went similarly slow.

The nodes do have additional 10GBit ports which I plan to use for replication, but the replication isn't using more than 10% of the primary to start with.

I have looked around and found others notes slow behaviour after the nodes have been running a long time, but these are only a week old. So I don't know what else to check.

I can quite happily get 950 Megabits between nodes for other data or doing a backup to a network drive so why is this bit so slow? There isn't much in the way of contention but the I'd expect migration to be < 10 mins not over an hour.

The IO limitation is so flat that it looks exactly like bandwidth limitation is set.

I think what's its doing is creating a snapshot, then compressing that (which goes to about 10Gb) and then pushing that over - but its terrible performance.

I do have replication for the nodes and that only takes a minute or two every 15 mins with the changes we accumulate - but the initial sync, I think went similarly slow.

The nodes do have additional 10GBit ports which I plan to use for replication, but the replication isn't using more than 10% of the primary to start with.

I have looked around and found others notes slow behaviour after the nodes have been running a long time, but these are only a week old. So I don't know what else to check.