Hi everyone,

I am new to the Proxmox environment and most of my setup is for learning, experimental purposes, and testing, but I am seeking guidance as to why I am unable to resolve my slow speeds when benchmarking a VM's mounted network drive that is using NFS shared storage.

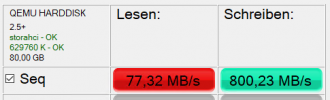

I get around 110mb/s read | 15 mb/s write on a VM instance with 10gb shared storage via TrueNAS nvme raid1 pools. Obviously if the VM is using a nodes local storage, the read write speeds are as one would expect with raid1 ssd's (500mb/s both ways).

A quick run down of my cluster/network and storage share setup:

3 Super Micro 1U's

24 core x2 cpus

128gigs ram

2ssd's raidz1 for local/os

2ssds raidz1 for zfs pool

10gb nic

TrueNAS server:

MSI gaming motherboard

i5

16GB ram

10gb nic

4 1tb ssds

2 1tb nvme's

no caches setup

Ubiquti Aggregation Switch

all 10gb om3 fiber cables

utilizing only 1 10gb port per nic on each node including TrueNAS server.

The 2nvme's installed in my TrueNAS server are what I am mounting the VM shared storage to in Proxmox, they're configured in a raid1 pool using Iz4 compression and NFS share.

Also wanted to mention, if the VM is restarted (configured with windows 10 os) it takes an extremely long time to boot into windows, around 15-20 minutes.

I do not see where a bottle neck issue would be coming from, but hoping someone on here can provide information on what could possibly be the issue.

I am new to the Proxmox environment and most of my setup is for learning, experimental purposes, and testing, but I am seeking guidance as to why I am unable to resolve my slow speeds when benchmarking a VM's mounted network drive that is using NFS shared storage.

I get around 110mb/s read | 15 mb/s write on a VM instance with 10gb shared storage via TrueNAS nvme raid1 pools. Obviously if the VM is using a nodes local storage, the read write speeds are as one would expect with raid1 ssd's (500mb/s both ways).

A quick run down of my cluster/network and storage share setup:

3 Super Micro 1U's

24 core x2 cpus

128gigs ram

2ssd's raidz1 for local/os

2ssds raidz1 for zfs pool

10gb nic

TrueNAS server:

MSI gaming motherboard

i5

16GB ram

10gb nic

4 1tb ssds

2 1tb nvme's

no caches setup

Ubiquti Aggregation Switch

all 10gb om3 fiber cables

utilizing only 1 10gb port per nic on each node including TrueNAS server.

The 2nvme's installed in my TrueNAS server are what I am mounting the VM shared storage to in Proxmox, they're configured in a raid1 pool using Iz4 compression and NFS share.

Also wanted to mention, if the VM is restarted (configured with windows 10 os) it takes an extremely long time to boot into windows, around 15-20 minutes.

I do not see where a bottle neck issue would be coming from, but hoping someone on here can provide information on what could possibly be the issue.

Last edited: