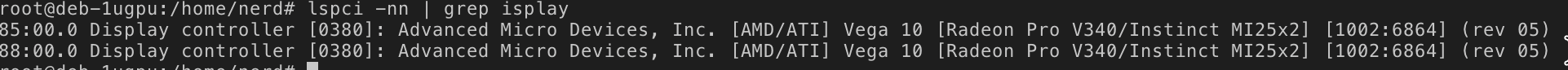

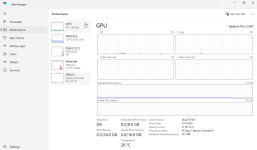

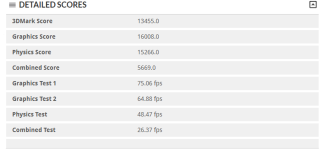

Has anyone used the Radeon Pro v340 with Proxmox? I was hoping to share this card between multiple VMs, for VDI. The closest I could find was this:

But that appears to be for an older version of Proxmox. Also uses an older/different AMD card.

When searching the forums, the one thread that did focus on the card seems to end with a question (unresolved?):

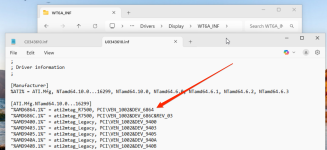

MxGPU-related threads and documentation, for VDI-capable GPUs, seem to only mention the AMD FirePro S7100X, S7150, and S7150 x2.

Should I use a different card for this workload?

But that appears to be for an older version of Proxmox. Also uses an older/different AMD card.

When searching the forums, the one thread that did focus on the card seems to end with a question (unresolved?):

MxGPU-related threads and documentation, for VDI-capable GPUs, seem to only mention the AMD FirePro S7100X, S7150, and S7150 x2.

Should I use a different card for this workload?