You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Depends on the Ceph version running on both. If you install Octopus (v15) on the external cluster, it could work, even if you run a newer version (Pacific, v16 or Quincy V17), though the closer they are, the better. But please upgrade the old cluster as PVE 6 is EOL since this summer!

Hi aaron,

pveversion of the main ceph cluster

pveversion of the node that I want to share with

Do i need to install ceph packages on my proxmox 6.4 ?

pveversion of the main ceph cluster

Code:

root@xxx1:~# pveversion --verbose

proxmox-ve: 7.1-1 (running kernel: 5.13.19-2-pve)

pve-manager: 7.1-7 (running version: 7.1-7/df5740ad)

pve-kernel-helper: 7.1-6

pve-kernel-5.13: 7.1-5

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph: 16.2.9-pve1

ceph-fuse: 16.2.9-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-14

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.0-4

libpve-storage-perl: 7.0-15

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.1.2-1

proxmox-backup-file-restore: 2.1.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-4

pve-cluster: 7.1-2

pve-container: 4.1-2

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-3

pve-ha-manager: 3.3-1

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

zfsutils-linux: 2.1.1-pve3pveversion of the node that I want to share with

Code:

root@xxx2:~# pveversion --verbose

proxmox-ve: 6.3-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.4-4 (running version: 6.4-4/337d6701)

pve-kernel-5.4: 6.4-1

pve-kernel-helper: 6.4-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.8

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-2

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-1

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.5-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-3

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-1

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1Do i need to install ceph packages on my proxmox 6.4 ?

I'm aware of this, I'll try upgrade to proxmox 7 next monthBut please upgrade the old cluster as PVE 6 is EOL since this summer!

Last edited:

The client on the old cluster is definitely too old. I would configure the Ceph Octopus repository on those servers and then install the latest updates. This will install the newer Ceph packages from the Octopus repo needed to connect as a client.

Hi aaron thanks for the reply,

I've updated my client node and now it's running on ceph version 15.2.16 (octopus)

right now i'm trying to integrate my ceph cluster rbd to my client node using this tutorial

https://knowledgebase.45drives.com/kb/kb450244-configuring-external-ceph-rbd-storage-with-proxmox/

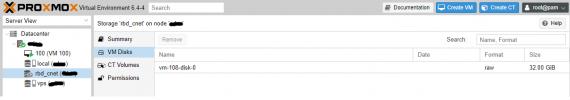

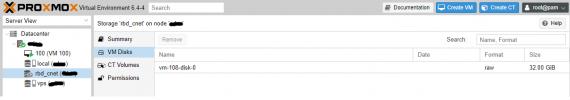

But when i tried to add the storage the disk is showing question mark and lagging (loading pop-up) and the disk details is not showing up

i also tried to create vm on my main ceph cluster and it seems that my client node detects it

this is the log journal of the client node

what am i missing right now ?

I've updated my client node and now it's running on ceph version 15.2.16 (octopus)

Code:

root@xxx2~# ceph -v

ceph version 15.2.16 (bf2dd02a9cb15dc7653698c30c498f0b3b3f19a6) octopus (stable)right now i'm trying to integrate my ceph cluster rbd to my client node using this tutorial

https://knowledgebase.45drives.com/kb/kb450244-configuring-external-ceph-rbd-storage-with-proxmox/

But when i tried to add the storage the disk is showing question mark and lagging (loading pop-up) and the disk details is not showing up

i also tried to create vm on my main ceph cluster and it seems that my client node detects it

this is the log journal of the client node

Code:

Sep 14 10:08:02 xxx2 pvestatd[70259]: got timeout

Sep 14 10:08:02 xxx2 pvestatd[70259]: status update time (5.063 seconds)

Sep 14 10:08:06 xxx2 pvedaemon[70248]: got timeout

Sep 14 10:08:12 xxx2 pvestatd[70259]: got timeout

Sep 14 10:08:12 xxx2 pvestatd[70259]: status update time (5.057 seconds)

Sep 14 10:08:22 xxx2 pvestatd[70259]: got timeout

Sep 14 10:08:22 xxx2 pvestatd[70259]: status update time (5.050 seconds)

Sep 14 10:08:23 xxx2 pvedaemon[70246]: got timeout

Sep 14 10:08:26 xxx2 pvedaemon[70247]: got timeout

Sep 14 10:08:32 xxx2 pvestatd[70259]: got timeout

Sep 14 10:08:32 xxx2 pvestatd[70259]: status update time (5.055 seconds)

Sep 14 10:08:36 xxx2 pvedaemon[70248]: got timeout

Sep 14 10:08:42 xxx2 pvestatd[70259]: got timeout

Sep 14 10:08:42 xxx2 pvestatd[70259]: status update time (5.054 seconds)

Sep 14 10:08:52 xxx2 pvedaemon[70247]: got timeout

Sep 14 10:08:52 xxx2 pvestatd[70259]: got timeout

Sep 14 10:08:52 xxx2 pvestatd[70259]: status update time (5.058 seconds)what am i missing right now ?

Last edited:

So you placed a keyring file with enough permissions at

Can you reach all nodes in the target cluster that run any Ceph service?

/etc/pve/priv/ceph/<storage name>.keyring and created the storage config where you did configure the list of IPs for the monitor nodes on the target cluster?Can you reach all nodes in the target cluster that run any Ceph service?

hi aaron, sorry for the late answer. I currently working on another project right now

yes, i copied theSo you placed a keyring file with enough permissions at/etc/pve/priv/ceph/<storage name>.keyringand created the storage config where you did configure the list of IPs for the monitor nodes on the target cluster?

/etc/ceph/ceph.conf and /etc/pve/priv/ceph.client.admin.keyring to my target cluster (scp from main cluster to the target cluster). I put ceph.conf and the keyring under /etc/pve/priv/ceph/ directoryyes, my target cluster can reach all nodes on my main ceph cluster and my main can reach the targetCan you reach all nodes in the target cluster that run any Ceph service?

Last edited:

by the way, I jusy realize that the permission of the file is different

on my target node

the

any idea anyone ?

Code:

root@ceph1:~# ls -la /etc/ceph/ceph.conf

lrwxrwxrwx 1 root root 18 Jul 21 18:37 /etc/ceph/ceph.conf -> /etc/pve/ceph.conf

root@ceph1:~# ls -la /etc/pve/priv/ceph.client.admin.keyring

-rw------- 1 root www-data 151 Jun 19 16:49 /etc/pve/priv/ceph.client.admin.keyringon my target node

Code:

root@target:~# ls -la /etc/pve/priv/ceph/

total 1

drwx------ 2 root www-data 0 Sep 9 09:04 .

drwx------ 2 root www-data 0 Sep 8 16:53 ..

-rw------- 1 root www-data 909 Sep 14 09:56 rbd_cnet.conf

-rw------- 1 root www-data 151 Sep 14 09:56 rbd_cnet.keyringthe

.conf file has different permission from my main ceph cluster, but when I try to edit the permission using chmod 777 /etc/pve/priv/ceph/rbd_cnet.conf it's showing

Code:

root@target:~# chmod 777 /etc/pve/priv/ceph/rbd_cnet.conf

chmod: changing permissions of '/etc/pve/priv/ceph/rbd_cnet.conf': Operation not permittedany idea anyone ?

Last edited: