Hello Proxmox Community,

I'm reaching out for advice on an LVM disk space reallocation issue. I'm trying to optimize my disk usage on my Proxmox host and avoid using a Live USB for a specific task if possible.

My Storage Journey and Current Goal:I initially set up Proxmox on a small 60GB NVMe drive (a 2230 model, salvaged from a Steam Deck!). I quickly realized this was insufficient for my plans, even with additional large HDDs (3TB and 14TB) mounted as storage. To gain more flexibility for VMs and containers, I recently cloned my entire Proxmox OS to a larger 512GB NVMe drive.

Now, with the larger NVMe, I want to fully utilize this space for new containers (like Batocera and Home Assistant) and existing VMs. My current goal is to shrink my pve-root Logical Volume (which hosts /) from its current excessive size down to a more reasonable 50GB. The substantial amount of space freed (around 400GB) will then be used to extend my pve-data Logical Volume (which backs the local-lvm storage for VMs/CTs). I aim to add approximately 380GB to pve-data to provide ample fast storage for my virtual machines.

My System Specifications:

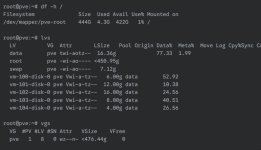

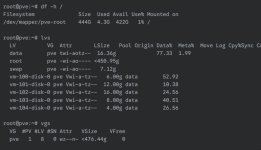

As you can see, pve-root is vastly oversized (444GB with only 1% used), but VFree in the pve Volume Group is 0. This indicates that all space is currently allocated to existing Logical Volumes (primarily pve-root and pve-data). My pve-data (local-lvm) only has about 3.7GB free (16.36GB * 0.2267).

The Problems Encountered (Online Shrinking & GParted Limitation):

My Question to the Community:

Given that resize2fs doesn't support online shrinking for ext4 and GParted couldn't directly manipulate the LVs, are there any alternative methods to shrink the pve-root filesystem (and then the LV) online or with minimal/no interruption beyond a simple reboot (i.e., avoiding booting from a Live USB)? I'm looking for the least disruptive way to achieve this LVM reallocation.

p.s. i don't have downtime issues, but I would like to avoid to destroy everything I've done

Any advice or alternative strategies would be greatly appreciated! Thank you.

I'm reaching out for advice on an LVM disk space reallocation issue. I'm trying to optimize my disk usage on my Proxmox host and avoid using a Live USB for a specific task if possible.

My Storage Journey and Current Goal:I initially set up Proxmox on a small 60GB NVMe drive (a 2230 model, salvaged from a Steam Deck!). I quickly realized this was insufficient for my plans, even with additional large HDDs (3TB and 14TB) mounted as storage. To gain more flexibility for VMs and containers, I recently cloned my entire Proxmox OS to a larger 512GB NVMe drive.

Now, with the larger NVMe, I want to fully utilize this space for new containers (like Batocera and Home Assistant) and existing VMs. My current goal is to shrink my pve-root Logical Volume (which hosts /) from its current excessive size down to a more reasonable 50GB. The substantial amount of space freed (around 400GB) will then be used to extend my pve-data Logical Volume (which backs the local-lvm storage for VMs/CTs). I aim to add approximately 380GB to pve-data to provide ample fast storage for my virtual machines.

My System Specifications:

- Proxmox VE Version: PVE 8.x (kernel 6.8.12-9-pve)

- CPU: Intel Core i3-6100

- RAM: 16GB

- Storage: 512GB NVMe (Proxmox OS on /dev/nvme0n1p3, which is the PV for pve VG), 3TB HDD (/mnt/pve/RED3TB), 14TB HDD (/mnt/pve/Toshiba14TB).

- All VMs/CTs are currently shut down, and full backups have been successfully created on my 14TB disk for safety.

As you can see, pve-root is vastly oversized (444GB with only 1% used), but VFree in the pve Volume Group is 0. This indicates that all space is currently allocated to existing Logical Volumes (primarily pve-root and pve-data). My pve-data (local-lvm) only has about 3.7GB free (16.36GB * 0.2267).

The Problems Encountered (Online Shrinking & GParted Limitation):

- Online resize2fs failure: When attempting to shrink the ext4 filesystem on pve-root while Proxmox is running:

I receive the error:Code:Bash resize2fs /dev/mapper/pve-root 50G

Code:resize2fs: On-line shrinking not supported

This confirms that shrinking an ext4 filesystem (especially the root filesystem /) requires it to be unmounted, meaning the system cannot be running from it. - GParted Live USB limitation: I then tried booting from a GParted Live USB. While GParted correctly identified /dev/nvme0n1 and its LVM Physical Volume nvme0n1p3, it did not expose the individual Logical Volumes (like pve-root and pve-data) for direct graphical manipulation/resizing within its interface. It only showed the lvm2 pv pve container, not its contents. This means GParted cannot be used to perform the necessary resize2fs on pve-root and subsequent lvreduce/lvextend operations on the LVs right? .

- Shut down the Proxmox server.

- Boot from the Ubuntu Live USB.

- Manually activate the LVM Volume Group.

- Unmount /dev/mapper/pve-root.

- Perform the resize2fs operation offline to shrink the filesystem to 50GB.

- Then, use lvreduce on pve-root and lvextend on pve-data.

My Question to the Community:

Given that resize2fs doesn't support online shrinking for ext4 and GParted couldn't directly manipulate the LVs, are there any alternative methods to shrink the pve-root filesystem (and then the LV) online or with minimal/no interruption beyond a simple reboot (i.e., avoiding booting from a Live USB)? I'm looking for the least disruptive way to achieve this LVM reallocation.

p.s. i don't have downtime issues, but I would like to avoid to destroy everything I've done

Any advice or alternative strategies would be greatly appreciated! Thank you.

Last edited: