I have a host with 4 NICs. The first NIC was assigned as nic0, and to vmbr0, with a CIDR of 192.168.50.40/24. I can access the Web UI and SSH into the host fine.

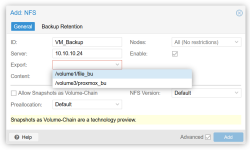

I then configured nic1 with vmbrWS with a CIDR of 10.10.10.40/24 and no default gateway. I want the host to access an NFS share on the 10.10.10.x network.

nic0 is physically connected to the 192.168.50.x switch, and nic1 is physically connected to the 10.10.10.x switch.

Once I apply the settings, I lose the webUI and SSH access to 192.168.50.40, but can then access them through 10.10.10.40.

But I want my webUI and cluster qdevice on the 192.168.50.x network (which is for servers).

I read that there were ARP settings for a multi-homed host and try changing some settings there, without luck.

So, how can I have the web ui, ssh, and cluster devices all on the 192.168.50.x network (vmbr0 and nic0), but still have the proxmox host access an NFS on the 10.10.10x network (vmbrWS, nic1, CIDR 10.10.10.43/0 with no default gateway)?

Note: When I had the Linux bonds like vmbrWS setup with no CIDR, it worked great for VMs. I could have a VM that had 2 network cards, each tied to a different network, and the vm could communicate with both networks. It's just the ProxMox host talking to more than one network that gives me a problem.

Thanks in advance.

I then configured nic1 with vmbrWS with a CIDR of 10.10.10.40/24 and no default gateway. I want the host to access an NFS share on the 10.10.10.x network.

nic0 is physically connected to the 192.168.50.x switch, and nic1 is physically connected to the 10.10.10.x switch.

Once I apply the settings, I lose the webUI and SSH access to 192.168.50.40, but can then access them through 10.10.10.40.

But I want my webUI and cluster qdevice on the 192.168.50.x network (which is for servers).

I read that there were ARP settings for a multi-homed host and try changing some settings there, without luck.

So, how can I have the web ui, ssh, and cluster devices all on the 192.168.50.x network (vmbr0 and nic0), but still have the proxmox host access an NFS on the 10.10.10x network (vmbrWS, nic1, CIDR 10.10.10.43/0 with no default gateway)?

Note: When I had the Linux bonds like vmbrWS setup with no CIDR, it worked great for VMs. I could have a VM that had 2 network cards, each tied to a different network, and the vm could communicate with both networks. It's just the ProxMox host talking to more than one network that gives me a problem.

Thanks in advance.