Hi everyone,

Could you help me understand something on my Proxmox backup.

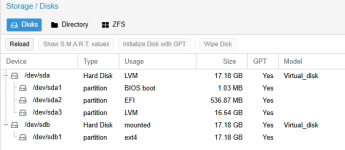

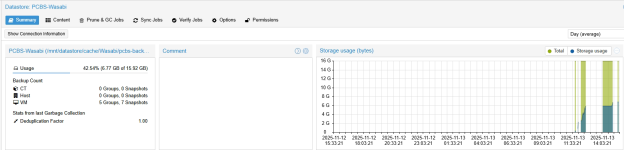

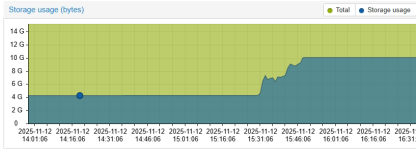

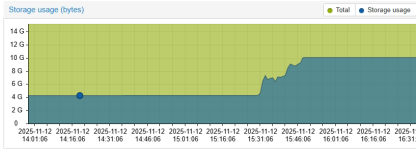

I updated it to 4.0 to connect to an S3 endpoint and I made my first backup on it, but I saw that the space used on my local storage has increased.

I can correctly see the backups on the bucket so I assume that I correctly set up my datastore.

Thanks for your help.

Could you help me understand something on my Proxmox backup.

I updated it to 4.0 to connect to an S3 endpoint and I made my first backup on it, but I saw that the space used on my local storage has increased.

I can correctly see the backups on the bucket so I assume that I correctly set up my datastore.

Thanks for your help.