Hello,

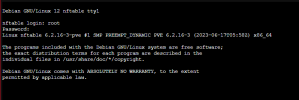

I'm trying to run nftables to do some routing inside an lxc-container, but I keep getting this error:

Running nftables commands does work. I was able to actually add rules and I've tested it and it works as expected inside the container.

I'm trying to run nftables to do some routing inside an lxc-container, but I keep getting this error:

nftables seems to be installed by default in the Debian 12 lxc-container image, so I'm not sure why this isn't working out of the box. In any case, what would be the most sensible way to solve this?audit: type=1400 audit(1711923842.917:224): apparmor="DENIED" operation="mount" class="mount" info="failed perms check" error=-13 profile="lxc-2000_</var/lib/lxc>" name="/run/systemd/unit-root/" pid=429132 comm="(nft)" srcname="/" flags="rw, rbind"

Running nftables commands does work. I was able to actually add rules and I've tested it and it works as expected inside the container.

Last edited: