This post is not directly related with Proxmox but since my server is using version 8.0 and all processes were executed using proxmox VMs, I found it worth it to share. If this is not the case let me know to move it to appropriate forum.

I want to share this “how to” for anyone who mistakenly could removed partitions from 3x HDs in a ZFS RAIDZ1 configuration without need of recreating pool from scratch + recover backup.

Credits to user2209743 from https://askubuntu.com/questions/1373040/recovering-data-from-zfs-pool-with-deleted-partition-tables who gave me some light at the end of the tunnel.

Proceed at your own risk, I am just sharing my experience.

Remember that backup your data is your best friend on top of that, but sometimes things happen and this saved me to recover some latest precious data not back it up...

How partitions end up being deleted:

I was about to install Windows 11 in an external NVME pci express were ZFS disk where connected too and (do not ask why, doing paral·lel stuff same time...) I mistakenly remove all partitions from the 3xZFS disks. Once realized about it I suddenly stopped the server and disconnected the disks before to proceed.

NOTE: below process may not apply if partitions removed done differently but a lab environment can help for sure...

Play with a lab / testing environment:

I created a lab / test VM to “recreate” similar scenario and be able to play with fake virtual drives of 3x5GB to trial and error (using snapshots to go back in time) to ensure getting partitions back before to proceed with my real Hard Disks. This was key to get comfortable and confidence on the Linux commands and programs to use to recover the partitions.

Once tests finished, I clearly saw 2 ways to proceed:

- Recommended: Get an extra disk to dump data from 3x ZFS disks to 3x images using dd. It warranties you to get your data back again in case of something happen. In my case I just had space to dump data from 1 disk and although higher risk, I felt comfortable with only 1 dump because of raidz1 allow one disk to fail + all testing done in my lab.

- Higher risk one (to avoid): Do not dump any data and use one of the ZFS disks to get sectors created from new zpool creation. If you are lucky, you could recover partitions from the other 2 disks and in degrade State you can resilver the 3th one (used to get partition sectors number).

Steps followed:

- 2 of the 3 ZFS drives were disconnected from the server (to avoid any further data damage/change)

- Get information of the ZFS disk connected and the external drive using fdisk

Bash:

fdisk –lDump the data to an image in an external drive

Bash:

dd if=/dev/sdb of=/media/8TB/zfs1dump4TB.img bs=1024k status=progress#IMPORTANT: "if" is the source of the disk Connected and "of" is the destination image of the external drive to backup the content. Do not mix them both!

- Once dump completed (can take some hours), use the source ZFS disk and create a single zpool disk

Bash:

zpool create seagatedisks -f -o ashift=12 /dev/sdbNOTE: looks like if you use whole disk, partitions are created equally regardless of raidz1, single, mirror,... start and end of sectors are the same

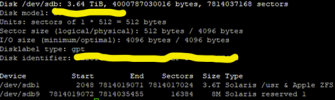

- Run again “fdisk –l” to show up partitions created

- Recover the dump data back to the source ZFS disk

Bash:

dd if=/media/8TB/ zfs1dump4TB.img of=/dev/sdb bs=1024k status=progress- With data recovered to the ZFS Connected disk, create the partitions using information from previous image (start and end of sectors). Double check start and end of sectors are correct as well as destination device.

Bash:

sgdisk -n1:2048:7814019071 -t1:BF01 -n9:7814019072:7814035455 -t9:BF07 /dev/sdbNOTE: BF01 is “Solaris /usr & Apple ZFS” type and BF07 “Solaris reserve 1”. n1 stands for partition 1 (sdb1) and n9 respectively.

- Try to import the pool. You should get the info of unavailable disk but should show that found a ZFS disk to import while rest 2 are “unavailable

Bash:

zpool importNOTE: sometimes can show “nothing to import” but if you repeat the command eventually will appear. This is first important milestone of the process.

- Get other one of the 2 ZFS drives connected back again, identify devices with “fdisk –l” and create partitions (IMPORTANT: this assumes all drives have exactly same amount of sectors. You can confirm this with fdisk too. If would have been diferent, process had change and I would created this sceneario in my lab with diferent disks sizes to play around):

Bash:

sgdisk -n1:2048:7814019071 -t1:BF01 -n9:7814019072:7814035455 -t9:BF07 /dev/sda

zpool importNOTE: message should be pool is in degrade status and now you could access to your data. At this point leave it to you to recover directly the data in degraded state or proceed to create partitions in the 3th disk after recover your data. I end up doing the second option since didn’t wanted to recover in degrade status if 2 previous disks worked but...

- Connect the 3th disk and create partitions:

Bash:

sgdisk -n1:2048:7814019071 -t1:BF01 -n9:7814019072:7814035455 -t9:BF07 /dev/sdc

zpool importAll disks must appear online and healthy

Considerations / lessons learnt:

- In a Raidz1 config, you could luckly have 1 chance to screw up one drive and recover data in degrade mode from other 2 ones so this gives a bit of relieve when playing with above procedure.

- It is recommended to unmount affected disk before dump or recover data.

- My set up was made of 3x4TB Seagate Ironwolf (same vendor and model). This scenario fits with disks of same exact sectors.

- I had the original “commands” used to create my raidz1. Not sure if will work otherwise if you create a pool using different “ashift”. You could play with it in your lab.

- I learned you can backup your partition tables so it is a must from now on. You can do it with “testdisk” command or other similar tool.

- Side note: While playing in the lab, I could recreate the pool even with shrinking the data partition “Solaris /usr & Apple ZFS” few sectors from the end while able to extract data in good shape. Most probably if your data is using latest sectors won’t be able to recover but this is something to take into consideration if you have different disks and sector sizes are different.

- Play with your lab as much as possible, ensuring data back and you are able to open files, images etc...

Last edited: