Hello,

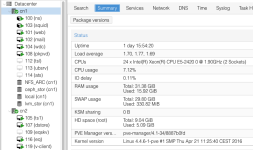

We have 3 identical servers, with the following specs:

Asus RS300-E8-PS4

Intel Xeon E3-1320v3

16Gb RAM

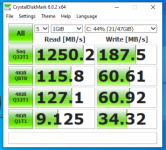

4 Enteprise SATA HDs, 2TB, with Hardware RAID LSI Logic / Symbios Logic MegaRAID SAS 2108

3x1Gb Ethernet ports bonded to get more bandwidth

We have been using them as a very simple Proxmox VE 3.x cluster, no distributed storage, and has so far met our needs really well.

We are now looking into upgrading to Proxmox VE 4.1, and we have moved all our load to only 2 nodes, so we can simply reinstall and start from scratch, one node at a time.

I am looking for some recommendations on "best practices", "what would you do", etc.

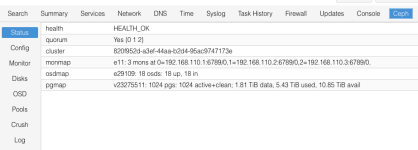

I have seen some threads about Ceph, DRBD9, ZFS sync, etc... for achieving live migration with just 3 nodes, but I would appreciate some advice so we know which direction to move forward and start researching.

So to all the Proxmox admins here, what would you do with our hardware?

Thanks!

We have 3 identical servers, with the following specs:

Asus RS300-E8-PS4

Intel Xeon E3-1320v3

16Gb RAM

4 Enteprise SATA HDs, 2TB, with Hardware RAID LSI Logic / Symbios Logic MegaRAID SAS 2108

3x1Gb Ethernet ports bonded to get more bandwidth

We have been using them as a very simple Proxmox VE 3.x cluster, no distributed storage, and has so far met our needs really well.

We are now looking into upgrading to Proxmox VE 4.1, and we have moved all our load to only 2 nodes, so we can simply reinstall and start from scratch, one node at a time.

I am looking for some recommendations on "best practices", "what would you do", etc.

I have seen some threads about Ceph, DRBD9, ZFS sync, etc... for achieving live migration with just 3 nodes, but I would appreciate some advice so we know which direction to move forward and start researching.

So to all the Proxmox admins here, what would you do with our hardware?

Thanks!