Hello! This is my first post here; thanks to anyone who will be give me any advice!

I'm running two production VE server on two DELL R540 server (each equipped with 128GB ram, 4x1TB SSD with ZFS RAID10, 2x Gold 6130), with a total of 8 VM (all Linux, some Debians and some Centos), I recently bought another server in an another datacenter to run PBS (2x1TB SSD for PBS and 4x22TB SATA disks in ZFS RAID10 for backup storage, 64GB ram, 32 cores).

Everithing is setup and running, I have about ten backups for each VM, in snapshot mode. All the PVE and PBS server has been installed in Dev 2024 and are up-to-date.

My issue is that I'm experiencing random freezes of the guest OS during backups.

Today, for example: the scheduled job runs the backup for VM106 and it freezed (I coult still login via SSH but every shell command took several minutes to complete, including the login itself). I stop the backup via PBS web console; I reboot the guest OS. I run a backup manually from the PVE web console and it works without any freeze.

Also today: scheduled backup of VM103 goes well, then I try a manual backup and it freezes the host. It really looks a "random" thing,

No errors on journalctl, no errors on PBS or VE consoles.

In other words: VM106 is a production web server with about 200 web sites and they were all not responding (timeout) during the scheduled backup; during the manual backup they where running smootly. The exact opposite with VM103 (scheduled ok, manual freezes). I experience this random behaviour on all VMs.

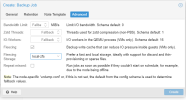

This is the failed scheduled backup, which was running but I had to stop it because freezing the server is not acceptable:

and this is the same backup that I manually run from the web console few minutes later, without any freezes:

What am I doing wrong ? What can I do to avoid the guest OS to freeze for minutes during backups ?

Additional information: all these VMs where born as ESXi 6.5 VMs, they've been running on PVE for about two months.

VM106 config:

Please feel free to ask any kind of question; any help is really appreciated!

Best regards to you all,

Giorgio

I'm running two production VE server on two DELL R540 server (each equipped with 128GB ram, 4x1TB SSD with ZFS RAID10, 2x Gold 6130), with a total of 8 VM (all Linux, some Debians and some Centos), I recently bought another server in an another datacenter to run PBS (2x1TB SSD for PBS and 4x22TB SATA disks in ZFS RAID10 for backup storage, 64GB ram, 32 cores).

Everithing is setup and running, I have about ten backups for each VM, in snapshot mode. All the PVE and PBS server has been installed in Dev 2024 and are up-to-date.

My issue is that I'm experiencing random freezes of the guest OS during backups.

Today, for example: the scheduled job runs the backup for VM106 and it freezed (I coult still login via SSH but every shell command took several minutes to complete, including the login itself). I stop the backup via PBS web console; I reboot the guest OS. I run a backup manually from the PVE web console and it works without any freeze.

Also today: scheduled backup of VM103 goes well, then I try a manual backup and it freezes the host. It really looks a "random" thing,

No errors on journalctl, no errors on PBS or VE consoles.

In other words: VM106 is a production web server with about 200 web sites and they were all not responding (timeout) during the scheduled backup; during the manual backup they where running smootly. The exact opposite with VM103 (scheduled ok, manual freezes). I experience this random behaviour on all VMs.

This is the failed scheduled backup, which was running but I had to stop it because freezing the server is not acceptable:

Code:

106: 2025-01-01 21:00:13 INFO: Starting Backup of VM 106 (qemu)

106: 2025-01-01 21:00:13 INFO: status = running

106: 2025-01-01 21:00:13 INFO: VM Name: srv5

106: 2025-01-01 21:00:13 INFO: include disk 'scsi0' 'local-zfs:vm-106-disk-0' 70G

106: 2025-01-01 21:00:13 INFO: include disk 'scsi1' 'local-zfs:vm-106-disk-1' 200G

106: 2025-01-01 21:00:13 INFO: include disk 'scsi2' 'local-zfs:vm-106-disk-2' 20G

106: 2025-01-01 21:00:13 INFO: include disk 'scsi3' 'local-zfs:vm-106-disk-3' 20G

106: 2025-01-01 21:00:13 INFO: include disk 'scsi4' 'local-zfs:vm-106-disk-4' 20G

106: 2025-01-01 21:00:13 INFO: include disk 'scsi5' 'local-zfs:vm-106-disk-5' 50G

106: 2025-01-01 21:00:13 INFO: include disk 'scsi6' 'local-zfs:vm-106-disk-6' 50G

106: 2025-01-01 21:00:13 INFO: backup mode: snapshot

106: 2025-01-01 21:00:13 INFO: ionice priority: 7

106: 2025-01-01 21:00:13 INFO: creating Proxmox Backup Server archive 'vm/106/2025-01-01T20:00:13Z'

106: 2025-01-01 21:00:16 INFO: started backup task 'de971a8e-45ab-4192-a341-7efbc0a292c3'

106: 2025-01-01 21:00:16 INFO: resuming VM again

106: 2025-01-01 21:00:16 INFO: scsi0: dirty-bitmap status: OK (23.1 GiB of 70.0 GiB dirty)

106: 2025-01-01 21:00:16 INFO: scsi1: dirty-bitmap status: OK (5.6 GiB of 200.0 GiB dirty)

106: 2025-01-01 21:00:16 INFO: scsi2: dirty-bitmap status: OK (568.0 MiB of 20.0 GiB dirty)

106: 2025-01-01 21:00:16 INFO: scsi3: dirty-bitmap status: OK (drive clean)

106: 2025-01-01 21:00:16 INFO: scsi4: dirty-bitmap status: OK (324.0 MiB of 20.0 GiB dirty)

106: 2025-01-01 21:00:16 INFO: scsi5: dirty-bitmap status: OK (13.6 GiB of 50.0 GiB dirty)

106: 2025-01-01 21:00:16 INFO: scsi6: dirty-bitmap status: OK (800.0 MiB of 50.0 GiB dirty)

106: 2025-01-01 21:00:16 INFO: using fast incremental mode (dirty-bitmap), 44.0 GiB dirty of 430.0 GiB total

106: 2025-01-01 21:00:19 INFO: 0% (76.0 MiB of 44.0 GiB) in 3s, read: 25.3 MiB/s, write: 25.3 MiB/s

106: 2025-01-01 21:07:05 INFO: 1% (504.0 MiB of 44.0 GiB) in 6m 49s, read: 1.1 MiB/s, write: 1.1 MiB/s

106: 2025-01-01 21:08:16 ERROR: interrupted by signal

106: 2025-01-01 21:08:16 INFO: aborting backup job

106: 2025-01-01 21:08:16 INFO: resuming VM again

106: 2025-01-01 21:08:16 ERROR: Backup of VM 106 failed - interrupted by signaland this is the same backup that I manually run from the web console few minutes later, without any freezes:

Code:

vzdump 106 --mode snapshot --node d00sug-antworksprox --storage backup --remove 0 --notes-template '{{guestname}}' --notification-mode auto

106: 2025-01-01 21:23:56 INFO: Starting Backup of VM 106 (qemu)

106: 2025-01-01 21:23:56 INFO: status = running

106: 2025-01-01 21:23:56 INFO: VM Name: srv5

106: 2025-01-01 21:23:56 INFO: include disk 'scsi0' 'local-zfs:vm-106-disk-0' 70G

106: 2025-01-01 21:23:56 INFO: include disk 'scsi1' 'local-zfs:vm-106-disk-1' 200G

106: 2025-01-01 21:23:56 INFO: include disk 'scsi2' 'local-zfs:vm-106-disk-2' 20G

106: 2025-01-01 21:23:56 INFO: include disk 'scsi3' 'local-zfs:vm-106-disk-3' 20G

106: 2025-01-01 21:23:56 INFO: include disk 'scsi4' 'local-zfs:vm-106-disk-4' 20G

106: 2025-01-01 21:23:56 INFO: include disk 'scsi5' 'local-zfs:vm-106-disk-5' 50G

106: 2025-01-01 21:23:56 INFO: include disk 'scsi6' 'local-zfs:vm-106-disk-6' 50G

106: 2025-01-01 21:23:56 INFO: backup mode: snapshot

106: 2025-01-01 21:23:56 INFO: ionice priority: 7

106: 2025-01-01 21:23:56 INFO: creating Proxmox Backup Server archive 'vm/106/2025-01-01T20:23:56Z'

106: 2025-01-01 21:23:58 INFO: started backup task 'd4d020b5-9cf4-4a38-9885-a35e644ee63f'

106: 2025-01-01 21:23:58 INFO: resuming VM again

106: 2025-01-01 21:23:58 INFO: scsi0: dirty-bitmap status: OK (23.2 GiB of 70.0 GiB dirty)

106: 2025-01-01 21:23:58 INFO: scsi1: dirty-bitmap status: OK (5.6 GiB of 200.0 GiB dirty)

106: 2025-01-01 21:23:58 INFO: scsi2: dirty-bitmap status: OK (684.0 MiB of 20.0 GiB dirty)

106: 2025-01-01 21:23:58 INFO: scsi3: dirty-bitmap status: OK (drive clean)

106: 2025-01-01 21:23:58 INFO: scsi4: dirty-bitmap status: OK (324.0 MiB of 20.0 GiB dirty)

106: 2025-01-01 21:23:58 INFO: scsi5: dirty-bitmap status: OK (13.6 GiB of 50.0 GiB dirty)

106: 2025-01-01 21:23:58 INFO: scsi6: dirty-bitmap status: OK (800.0 MiB of 50.0 GiB dirty)

106: 2025-01-01 21:23:58 INFO: using fast incremental mode (dirty-bitmap), 44.1 GiB dirty of 430.0 GiB total

106: 2025-01-01 21:24:01 INFO: 0% (348.0 MiB of 44.1 GiB) in 3s, read: 116.0 MiB/s, write: 112.0 MiB/s

106: 2025-01-01 21:24:04 INFO: 2% (948.0 MiB of 44.1 GiB) in 6s, read: 200.0 MiB/s, write: 146.7 MiB/s

106: 2025-01-01 21:24:07 INFO: 3% (1.3 GiB of 44.1 GiB) in 9s, read: 141.3 MiB/s, write: 136.0 MiB/s

106: 2025-01-01 21:24:10 INFO: 4% (1.8 GiB of 44.1 GiB) in 12s, read: 164.0 MiB/s, write: 164.0 MiB/s

106: 2025-01-01 21:24:13 INFO: 5% (2.4 GiB of 44.1 GiB) in 15s, read: 194.7 MiB/s, write: 156.0 MiB/s

106: 2025-01-01 21:24:16 INFO: 6% (2.9 GiB of 44.1 GiB) in 18s, read: 177.3 MiB/s, write: 161.3 MiB/s

106: 2025-01-01 21:24:19 INFO: 7% (3.5 GiB of 44.1 GiB) in 21s, read: 189.3 MiB/s, write: 189.3 MiB/s

106: 2025-01-01 21:24:22 INFO: 9% (4.0 GiB of 44.1 GiB) in 24s, read: 185.3 MiB/s, write: 185.3 MiB/s

106: 2025-01-01 21:24:25 INFO: 10% (4.5 GiB of 44.1 GiB) in 27s, read: 156.0 MiB/s, write: 156.0 MiB/s

106: 2025-01-01 21:24:28 INFO: 11% (5.0 GiB of 44.1 GiB) in 30s, read: 173.3 MiB/s, write: 173.3 MiB/s

106: 2025-01-01 21:24:31 INFO: 12% (5.3 GiB of 44.1 GiB) in 33s, read: 126.7 MiB/s, write: 126.7 MiB/s

106: 2025-01-01 21:24:34 INFO: 13% (5.8 GiB of 44.1 GiB) in 36s, read: 160.0 MiB/s, write: 158.7 MiB/s

106: 2025-01-01 21:24:39 INFO: 14% (6.3 GiB of 44.1 GiB) in 41s, read: 100.8 MiB/s, write: 100.8 MiB/s

106: 2025-01-01 21:24:42 INFO: 15% (6.7 GiB of 44.1 GiB) in 44s, read: 145.3 MiB/s, write: 145.3 MiB/s

106: 2025-01-01 21:24:45 INFO: 16% (7.3 GiB of 44.1 GiB) in 47s, read: 180.0 MiB/s, write: 174.7 MiB/s

106: 2025-01-01 21:24:48 INFO: 17% (7.7 GiB of 44.1 GiB) in 50s, read: 145.3 MiB/s, write: 145.3 MiB/s

106: 2025-01-01 21:24:51 INFO: 18% (8.3 GiB of 44.1 GiB) in 53s, read: 198.7 MiB/s, write: 157.3 MiB/s

106: 2025-01-01 21:24:54 INFO: 19% (8.7 GiB of 44.1 GiB) in 56s, read: 150.7 MiB/s, write: 94.7 MiB/s

106: 2025-01-01 21:24:57 INFO: 21% (9.3 GiB of 44.1 GiB) in 59s, read: 198.7 MiB/s, write: 166.7 MiB/s

106: 2025-01-01 21:25:00 INFO: 22% (9.9 GiB of 44.1 GiB) in 1m 2s, read: 209.3 MiB/s, write: 169.3 MiB/s

106: 2025-01-01 21:25:03 INFO: 23% (10.5 GiB of 44.1 GiB) in 1m 5s, read: 198.7 MiB/s, write: 144.0 MiB/s

106: 2025-01-01 21:25:06 INFO: 25% (11.1 GiB of 44.1 GiB) in 1m 8s, read: 194.7 MiB/s, write: 178.7 MiB/s

106: 2025-01-01 21:25:09 INFO: 26% (11.7 GiB of 44.1 GiB) in 1m 11s, read: 220.0 MiB/s, write: 145.3 MiB/s

106: 2025-01-01 21:25:12 INFO: 27% (12.3 GiB of 44.1 GiB) in 1m 14s, read: 190.7 MiB/s, write: 190.7 MiB/s

106: 2025-01-01 21:25:15 INFO: 29% (12.8 GiB of 44.1 GiB) in 1m 17s, read: 188.0 MiB/s, write: 188.0 MiB/s

106: 2025-01-01 21:25:18 INFO: 30% (13.4 GiB of 44.1 GiB) in 1m 20s, read: 194.7 MiB/s, write: 184.0 MiB/s

106: 2025-01-01 21:25:21 INFO: 31% (14.0 GiB of 44.1 GiB) in 1m 23s, read: 196.0 MiB/s, write: 182.7 MiB/s

106: 2025-01-01 21:25:24 INFO: 32% (14.5 GiB of 44.1 GiB) in 1m 26s, read: 178.7 MiB/s, write: 170.7 MiB/s

106: 2025-01-01 21:25:27 INFO: 33% (15.0 GiB of 44.1 GiB) in 1m 29s, read: 166.7 MiB/s, write: 157.3 MiB/s

106: 2025-01-01 21:25:30 INFO: 34% (15.4 GiB of 44.1 GiB) in 1m 32s, read: 152.0 MiB/s, write: 148.0 MiB/s

106: 2025-01-01 21:25:33 INFO: 36% (16.0 GiB of 44.1 GiB) in 1m 35s, read: 185.3 MiB/s, write: 184.0 MiB/s

106: 2025-01-01 21:25:36 INFO: 37% (16.5 GiB of 44.1 GiB) in 1m 38s, read: 192.0 MiB/s, write: 174.7 MiB/s

106: 2025-01-01 21:25:39 INFO: 38% (16.9 GiB of 44.1 GiB) in 1m 41s, read: 120.0 MiB/s, write: 92.0 MiB/s

106: 2025-01-01 21:25:44 INFO: 39% (17.3 GiB of 44.1 GiB) in 1m 46s, read: 80.8 MiB/s, write: 72.0 MiB/s

106: 2025-01-01 21:25:47 INFO: 40% (17.8 GiB of 44.1 GiB) in 1m 49s, read: 166.7 MiB/s, write: 136.0 MiB/s

106: 2025-01-01 21:25:50 INFO: 41% (18.4 GiB of 44.1 GiB) in 1m 52s, read: 228.0 MiB/s, write: 145.3 MiB/s

106: 2025-01-01 21:25:53 INFO: 42% (18.9 GiB of 44.1 GiB) in 1m 55s, read: 181.3 MiB/s, write: 178.7 MiB/s

106: 2025-01-01 21:25:56 INFO: 44% (19.5 GiB of 44.1 GiB) in 1m 58s, read: 194.7 MiB/s, write: 194.7 MiB/s

106: 2025-01-01 21:25:59 INFO: 45% (20.1 GiB of 44.1 GiB) in 2m 1s, read: 213.3 MiB/s, write: 189.3 MiB/s

106: 2025-01-01 21:26:02 INFO: 47% (20.8 GiB of 44.1 GiB) in 2m 4s, read: 216.0 MiB/s, write: 209.3 MiB/s

106: 2025-01-01 21:26:05 INFO: 48% (21.3 GiB of 44.1 GiB) in 2m 7s, read: 188.0 MiB/s, write: 166.7 MiB/s

106: 2025-01-01 21:26:08 INFO: 49% (21.9 GiB of 44.1 GiB) in 2m 10s, read: 204.0 MiB/s, write: 162.7 MiB/s

106: 2025-01-01 21:26:11 INFO: 50% (22.5 GiB of 44.1 GiB) in 2m 13s, read: 184.0 MiB/s, write: 165.3 MiB/s

106: 2025-01-01 21:26:14 INFO: 52% (23.0 GiB of 44.1 GiB) in 2m 16s, read: 198.7 MiB/s, write: 162.7 MiB/s

106: 2025-01-01 21:26:17 INFO: 53% (23.6 GiB of 44.1 GiB) in 2m 19s, read: 192.0 MiB/s, write: 169.3 MiB/s

106: 2025-01-01 21:26:20 INFO: 54% (24.2 GiB of 44.1 GiB) in 2m 22s, read: 209.3 MiB/s, write: 144.0 MiB/s

106: 2025-01-01 21:26:23 INFO: 56% (24.8 GiB of 44.1 GiB) in 2m 25s, read: 185.3 MiB/s, write: 153.3 MiB/s

106: 2025-01-01 21:26:26 INFO: 57% (25.3 GiB of 44.1 GiB) in 2m 28s, read: 166.7 MiB/s, write: 160.0 MiB/s

106: 2025-01-01 21:26:29 INFO: 58% (25.8 GiB of 44.1 GiB) in 2m 31s, read: 172.0 MiB/s, write: 162.7 MiB/s

106: 2025-01-01 21:26:32 INFO: 59% (26.3 GiB of 44.1 GiB) in 2m 34s, read: 197.3 MiB/s, write: 166.7 MiB/s

106: 2025-01-01 21:26:35 INFO: 60% (26.9 GiB of 44.1 GiB) in 2m 37s, read: 198.7 MiB/s, write: 172.0 MiB/s

106: 2025-01-01 21:26:38 INFO: 62% (27.5 GiB of 44.1 GiB) in 2m 40s, read: 201.3 MiB/s, write: 189.3 MiB/s

106: 2025-01-01 21:26:41 INFO: 63% (28.0 GiB of 44.1 GiB) in 2m 43s, read: 182.7 MiB/s, write: 176.0 MiB/s

106: 2025-01-01 21:26:44 INFO: 64% (28.6 GiB of 44.1 GiB) in 2m 46s, read: 189.3 MiB/s, write: 165.3 MiB/s

106: 2025-01-01 21:26:47 INFO: 66% (29.1 GiB of 44.1 GiB) in 2m 49s, read: 186.7 MiB/s, write: 154.7 MiB/s

106: 2025-01-01 21:26:50 INFO: 67% (29.7 GiB of 44.1 GiB) in 2m 52s, read: 188.0 MiB/s, write: 153.3 MiB/s

106: 2025-01-01 21:26:53 INFO: 68% (30.2 GiB of 44.1 GiB) in 2m 55s, read: 181.3 MiB/s, write: 138.7 MiB/s

106: 2025-01-01 21:26:56 INFO: 69% (30.8 GiB of 44.1 GiB) in 2m 58s, read: 178.7 MiB/s, write: 148.0 MiB/s

106: 2025-01-01 21:26:59 INFO: 70% (31.2 GiB of 44.1 GiB) in 3m 1s, read: 152.0 MiB/s, write: 150.7 MiB/s

106: 2025-01-01 21:27:02 INFO: 71% (31.7 GiB of 44.1 GiB) in 3m 4s, read: 186.7 MiB/s, write: 170.7 MiB/s

106: 2025-01-01 21:27:05 INFO: 73% (32.3 GiB of 44.1 GiB) in 3m 7s, read: 194.7 MiB/s, write: 149.3 MiB/s

106: 2025-01-01 21:27:08 INFO: 74% (32.9 GiB of 44.1 GiB) in 3m 10s, read: 201.3 MiB/s, write: 88.0 MiB/s

106: 2025-01-01 21:27:11 INFO: 76% (33.6 GiB of 44.1 GiB) in 3m 13s, read: 249.3 MiB/s, write: 102.7 MiB/s

106: 2025-01-01 21:27:14 INFO: 77% (34.2 GiB of 44.1 GiB) in 3m 16s, read: 201.3 MiB/s, write: 146.7 MiB/s

106: 2025-01-01 21:27:17 INFO: 78% (34.8 GiB of 44.1 GiB) in 3m 19s, read: 185.3 MiB/s, write: 168.0 MiB/s

106: 2025-01-01 21:27:20 INFO: 80% (35.5 GiB of 44.1 GiB) in 3m 22s, read: 252.0 MiB/s, write: 94.7 MiB/s

106: 2025-01-01 21:27:23 INFO: 81% (36.1 GiB of 44.1 GiB) in 3m 25s, read: 196.0 MiB/s, write: 166.7 MiB/s

106: 2025-01-01 21:27:26 INFO: 82% (36.6 GiB of 44.1 GiB) in 3m 28s, read: 186.7 MiB/s, write: 162.7 MiB/s

106: 2025-01-01 21:27:29 INFO: 84% (37.2 GiB of 44.1 GiB) in 3m 31s, read: 204.0 MiB/s, write: 156.0 MiB/s

106: 2025-01-01 21:27:32 INFO: 85% (37.8 GiB of 44.1 GiB) in 3m 34s, read: 193.3 MiB/s, write: 156.0 MiB/s

106: 2025-01-01 21:27:35 INFO: 86% (38.4 GiB of 44.1 GiB) in 3m 37s, read: 205.3 MiB/s, write: 162.7 MiB/s

106: 2025-01-01 21:27:38 INFO: 88% (39.0 GiB of 44.1 GiB) in 3m 40s, read: 194.7 MiB/s, write: 149.3 MiB/s

106: 2025-01-01 21:27:41 INFO: 89% (39.5 GiB of 44.1 GiB) in 3m 43s, read: 190.7 MiB/s, write: 165.3 MiB/s

106: 2025-01-01 21:27:44 INFO: 90% (40.1 GiB of 44.1 GiB) in 3m 46s, read: 208.0 MiB/s, write: 168.0 MiB/s

106: 2025-01-01 21:27:47 INFO: 92% (40.7 GiB of 44.1 GiB) in 3m 49s, read: 208.0 MiB/s, write: 142.7 MiB/s

106: 2025-01-01 21:27:50 INFO: 93% (41.3 GiB of 44.1 GiB) in 3m 52s, read: 197.3 MiB/s, write: 146.7 MiB/s

106: 2025-01-01 21:27:53 INFO: 94% (41.9 GiB of 44.1 GiB) in 3m 55s, read: 193.3 MiB/s, write: 156.0 MiB/s

106: 2025-01-01 21:27:56 INFO: 96% (42.5 GiB of 44.1 GiB) in 3m 58s, read: 197.3 MiB/s, write: 162.7 MiB/s

106: 2025-01-01 21:27:59 INFO: 97% (43.0 GiB of 44.1 GiB) in 4m 1s, read: 189.3 MiB/s, write: 170.7 MiB/s

106: 2025-01-01 21:28:02 INFO: 98% (43.6 GiB of 44.1 GiB) in 4m 4s, read: 205.3 MiB/s, write: 134.7 MiB/s

106: 2025-01-01 21:28:05 INFO: 100% (44.1 GiB of 44.1 GiB) in 4m 7s, read: 178.7 MiB/s, write: 162.7 MiB/s

106: 2025-01-01 21:28:29 INFO: backup was done incrementally, reused 392.66 GiB (91%)

106: 2025-01-01 21:28:29 INFO: transferred 44.14 GiB in 271 seconds (166.8 MiB/s)

106: 2025-01-01 21:28:29 INFO: adding notes to backup

106: 2025-01-01 21:28:30 INFO: Finished Backup of VM 106 (00:04:34)What am I doing wrong ? What can I do to avoid the guest OS to freeze for minutes during backups ?

Additional information: all these VMs where born as ESXi 6.5 VMs, they've been running on PVE for about two months.

VM106 config:

Please feel free to ask any kind of question; any help is really appreciated!

Best regards to you all,

Giorgio

Last edited: