Greetings everyone,

I have three VM's.

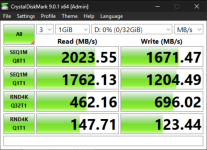

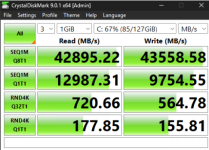

1: Working with 42GB r/w speed per second

2: Malware isolated VM for reverse analysis and application testing (arg: -hypervisor flag set to hide VM from malware)

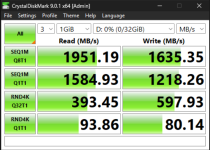

3: Another vm, pretty vanilla with 2gb r/w seq

all three VM's are on the same physical processor, zfs storage pool and settings (excluding from vm two now which is working property) mirrored.

After about 9 hours of troubleshooting VM#2 I was able to figure out that disabling the Hypervisor flag absolutely murdered my 4k random IOPS from ~700mb down to 20MB. Reenabling this flag the speeds went sky high once more giving me full speed of my known good VM.

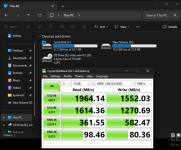

VM#3 having sightly different R/W issues, the Random IO's are fine (reaching the same as VM#1 and 2) but the sequential r/w seem capped at 2GB a second while VM#1&2 are now getting anywhere from 24GB to 42GB a second.

All three VM's are setup identical and am trying for hours trying to figure out why the one remaining VM is getting capped at 2gb while the other two are getting 10's of gb.

I added both a VirtIO and SCSI disk to VM#3 and it appears both disk are capped at ~2gb per second for both R/W.

Anything I am overlooking maybe outside the config that would be causing this limit? Both VM's have the latest guest tools installed (0.1.285) which I assume has both drivers up to date the same on each VM. With the VM's on the same storage, same host, with the following settings below, its leading me to think there is something different external to the VM that is limiting the R/W of the disk.

[VM#1]

agent: 1

bios: ovmf

boot: order=scsi0;net0

cores: 4

cpu: x86-64-v2-AES

efidisk0: local-zfs:vm-101-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

machine: pc-q35-9.0,viommu=virtio

memory: 12288

meta: creation-qemu=9.0.2,ctime=1737426023

name: Windows11

net0: virtio=BC:24:11:2C 5:62,bridge=vmbr0,firewall=1

5:62,bridge=vmbr0,firewall=1

numa: 0

ostype: win11

scsi0: local-zfs:vm-101-disk-3,cache=writeback,size=128G

scsihw: virtio-scsi-single

smbios1: uuid=8aaef5f8-511f-4b28-91d9-639ebe140c5a

sockets: 1

tpmstate0: local-zfs:vm-101-disk-2,size=4M,version=v2.0

vmgenid: f61bd622-7b5b-4ff3-9bd0-00e42fa53be0

[VM#3]

agent: 1

bios: ovmf

boot: order=virtio0;net0;ide2

cores: 4

cpu: x86-64-v2-AES

efidisk0: local-zfs:vm-106-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

ide2: local:iso/virtio-win-0.1.285.iso,media=cdrom,size=771138K

machine: pc-q35-9.0,viommu=virtio

memory: 12288

meta: creation-qemu=9.0.2,ctime=1751256788

name: DiskTestIssueWithTemplate

net0: virtio=BC:24:11:50:50:64,bridge=vmbr0

numa: 0

ostype: win11

scsi0: local-zfs:vm-106-disk-3,backup=0,cache=writeback,iothread=1,size=32G

scsihw: virtio-scsi-single

smbios1: uuid=c406870d-66ab-4949-a377-7975bfb9ecc6

sockets: 1

tpmstate0: local-zfs:vm-106-disk-1,size=4M,version=v2.0

virtio0: local-zfs:vm-106-disk-2,backup=0,cache=writeback,iothread=1,size=64G

vmgenid: f2aa089c-4e50-421f-807f-2eaed0b2978b

My other question as for VM#2, is if I want to hide Hypervisor then is there any reasonable secondary sub flag/option to get the Random 4k IO's higher then 20ish MB a second (preferably without just multiple performance tweaks) or is disabling hypervisor an all or nothing option as to what is making a direct impact to the r/w?

Thanks for any feedback as to these two questions.

I have three VM's.

1: Working with 42GB r/w speed per second

2: Malware isolated VM for reverse analysis and application testing (arg: -hypervisor flag set to hide VM from malware)

3: Another vm, pretty vanilla with 2gb r/w seq

all three VM's are on the same physical processor, zfs storage pool and settings (excluding from vm two now which is working property) mirrored.

After about 9 hours of troubleshooting VM#2 I was able to figure out that disabling the Hypervisor flag absolutely murdered my 4k random IOPS from ~700mb down to 20MB. Reenabling this flag the speeds went sky high once more giving me full speed of my known good VM.

VM#3 having sightly different R/W issues, the Random IO's are fine (reaching the same as VM#1 and 2) but the sequential r/w seem capped at 2GB a second while VM#1&2 are now getting anywhere from 24GB to 42GB a second.

All three VM's are setup identical and am trying for hours trying to figure out why the one remaining VM is getting capped at 2gb while the other two are getting 10's of gb.

I added both a VirtIO and SCSI disk to VM#3 and it appears both disk are capped at ~2gb per second for both R/W.

Anything I am overlooking maybe outside the config that would be causing this limit? Both VM's have the latest guest tools installed (0.1.285) which I assume has both drivers up to date the same on each VM. With the VM's on the same storage, same host, with the following settings below, its leading me to think there is something different external to the VM that is limiting the R/W of the disk.

[VM#1]

agent: 1

bios: ovmf

boot: order=scsi0;net0

cores: 4

cpu: x86-64-v2-AES

efidisk0: local-zfs:vm-101-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

machine: pc-q35-9.0,viommu=virtio

memory: 12288

meta: creation-qemu=9.0.2,ctime=1737426023

name: Windows11

net0: virtio=BC:24:11:2C

numa: 0

ostype: win11

scsi0: local-zfs:vm-101-disk-3,cache=writeback,size=128G

scsihw: virtio-scsi-single

smbios1: uuid=8aaef5f8-511f-4b28-91d9-639ebe140c5a

sockets: 1

tpmstate0: local-zfs:vm-101-disk-2,size=4M,version=v2.0

vmgenid: f61bd622-7b5b-4ff3-9bd0-00e42fa53be0

[VM#3]

agent: 1

bios: ovmf

boot: order=virtio0;net0;ide2

cores: 4

cpu: x86-64-v2-AES

efidisk0: local-zfs:vm-106-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

ide2: local:iso/virtio-win-0.1.285.iso,media=cdrom,size=771138K

machine: pc-q35-9.0,viommu=virtio

memory: 12288

meta: creation-qemu=9.0.2,ctime=1751256788

name: DiskTestIssueWithTemplate

net0: virtio=BC:24:11:50:50:64,bridge=vmbr0

numa: 0

ostype: win11

scsi0: local-zfs:vm-106-disk-3,backup=0,cache=writeback,iothread=1,size=32G

scsihw: virtio-scsi-single

smbios1: uuid=c406870d-66ab-4949-a377-7975bfb9ecc6

sockets: 1

tpmstate0: local-zfs:vm-106-disk-1,size=4M,version=v2.0

virtio0: local-zfs:vm-106-disk-2,backup=0,cache=writeback,iothread=1,size=64G

vmgenid: f2aa089c-4e50-421f-807f-2eaed0b2978b

My other question as for VM#2, is if I want to hide Hypervisor then is there any reasonable secondary sub flag/option to get the Random 4k IO's higher then 20ish MB a second (preferably without just multiple performance tweaks) or is disabling hypervisor an all or nothing option as to what is making a direct impact to the r/w?

Thanks for any feedback as to these two questions.