Hi all,

I have two Proxmox servers running on very very similar hardware (DELL PowerEdge R630 with 2 Xeon E5-2697 CPUs with 14 cores each and HT, 512Gb ECC RAM).

Both server have PVE 7.4-18 (yes I know, I have to upgrade).

Both servers have PBS 2.4-7 installed along with PVE.

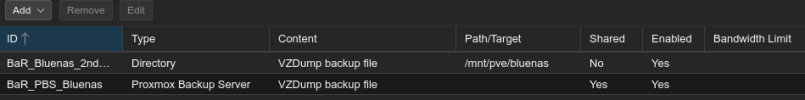

Both server mount a NAS disk though NFS, and both have PBS configured to write on that mount point (i.e. the datastore points to that mount point).

The first server works without any problems (and its BPS instance also does hosts backups for another older PVE server, which runs PVE 6.4).

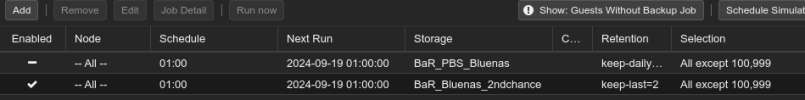

The second server cannot complete a single backup of any one of the 8 VMs hosted, if using PBS as storage. If using "local" storage (even using the same NFS mount point as "directory" type) it completes the backups without issues.

Actually, when using PBS it cannot even start backups.

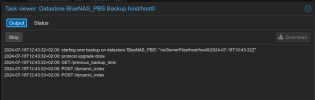

Here is an example backup job log (the one that is running right now):

Meanwhile, on PBS, each backup task stays running even when PVE gives up with

On PBS one example task log is like this:

and it keeps running forever until I click on the "Stop" button.

Even the Garbage Collection task hangs forever on PBS, and - in this case - I cannot even stop it using the Stop button.

Actually, it seems that the GC process dies quite soon after starting, but somehow PBS doesn't notice this.

This is what I see in /var/log/daemon.log (excerpt):

One final note: when PVE gives up on the backup for a VM, the VM keeps running smoothly if it was already running before.

If instead it was powered off before the backup, it stays in a fake "running" state until I give a "qm stop" (

I have read lots of threads regarding problems with qmp and the "got timeout" error, however I haven't found an exact match for my situation, and even the solved threads have quite sketchy resolutions.

Please help.

I am available to give any missing details if needed.

Thank you in advance!

Cris

I have two Proxmox servers running on very very similar hardware (DELL PowerEdge R630 with 2 Xeon E5-2697 CPUs with 14 cores each and HT, 512Gb ECC RAM).

Both server have PVE 7.4-18 (yes I know, I have to upgrade).

Both servers have PBS 2.4-7 installed along with PVE.

Both server mount a NAS disk though NFS, and both have PBS configured to write on that mount point (i.e. the datastore points to that mount point).

The first server works without any problems (and its BPS instance also does hosts backups for another older PVE server, which runs PVE 6.4).

The second server cannot complete a single backup of any one of the 8 VMs hosted, if using PBS as storage. If using "local" storage (even using the same NFS mount point as "directory" type) it completes the backups without issues.

Actually, when using PBS it cannot even start backups.

Here is an example backup job log (the one that is running right now):

Code:

INFO: starting new backup job: vzdump --all 1 --quiet 1 --storage AMENDED --mailnotification failure --prune-backups 'keep-daily=1,keep-last=2,keep-monthly=1,keep-weekly=1,keep-yearly=1' --notes-template '{{guestname}}' --mode snapshot --mailto log@AMENDED

INFO: Starting Backup of VM 100 (qemu)

INFO: Backup started at 2024-07-17 01:00:06

INFO: status = stopped

INFO: backup mode: stop

INFO: ionice priority: 7

INFO: VM Name: AMENDED

INFO: include disk 'scsi0' 'local-lvm:vm-100-disk-0' 40G

INFO: creating Proxmox Backup Server archive 'vm/100/2024-07-16T23:00:06Z'

INFO: starting kvm to execute backup task

ERROR: VM 100 qmp command 'backup' failed - got timeout

INFO: aborting backup job

ERROR: VM 100 qmp command 'backup-cancel' failed - got timeout

VM 100 qmp command 'query-status' failed - got timeout

ERROR: Backup of VM 100 failed - VM 100 qmp command 'backup' failed - got timeout

INFO: Failed at 2024-07-17 01:12:19

INFO: Starting Backup of VM 101 (qemu)

INFO: Backup started at 2024-07-17 01:12:19

INFO: status = running

INFO: VM Name: AMENDED

INFO: include disk 'scsi0' 'local-lvm:vm-101-disk-0' 200G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/101/2024-07-16T23:12:19Z'

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

ERROR: VM 101 qmp command 'backup' failed - got timeout

INFO: aborting backup job

ERROR: VM 101 qmp command 'backup-cancel' failed - got timeout

INFO: resuming VM again

ERROR: Backup of VM 101 failed - VM 101 qmp command 'cont' failed - got timeout

INFO: Failed at 2024-07-17 01:25:10

INFO: Starting Backup of VM 102 (qemu)

INFO: Backup started at 2024-07-17 01:25:10

INFO: status = running

INFO: VM Name: AMENDED

INFO: include disk 'scsi0' 'vmzfs:vm-102-disk-0' 200G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/102/2024-07-16T23:25:10Z'

INFO: issuing guest-agent 'fs-freeze' command

...Meanwhile, on PBS, each backup task stays running even when PVE gives up with

INFO: aborting backup job.On PBS one example task log is like this:

Code:

2024-07-17T01:00:17+02:00: starting new backup on datastore 'BlueNAS_PBS': "vm/100/2024-07-16T23:00:06Z"

2024-07-17T01:00:17+02:00: GET /previous: 400 Bad Request: no valid previous backupEven the Garbage Collection task hangs forever on PBS, and - in this case - I cannot even stop it using the Stop button.

Actually, it seems that the GC process dies quite soon after starting, but somehow PBS doesn't notice this.

This is what I see in /var/log/daemon.log (excerpt):

Code:

Jul 17 01:33:36 host0 pvestatd[3992]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:33:41 host0 pvestatd[3992]: VM 102 qmp command failed - VM 102 qmp command 'query-proxmox-support' failed - unable to connect to VM 102 qmp socket - timeout after 51 retries

Jul 17 01:33:46 host0 pvestatd[3992]: VM 101 qmp command failed - VM 101 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:33:47 host0 pvestatd[3992]: status update time (21.759 seconds)

Jul 17 01:33:58 host0 pvestatd[3992]: VM 101 qmp command failed - VM 101 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:34:03 host0 pvestatd[3992]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:34:08 host0 pvestatd[3992]: VM 102 qmp command failed - VM 102 qmp command 'query-proxmox-support' failed - unable to connect to VM 102 qmp socket - timeout after 51 retries

Jul 17 01:34:09 host0 pvestatd[3992]: status update time (21.820 seconds)

Jul 17 01:34:20 host0 pvestatd[3992]: VM 101 qmp command failed - VM 101 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:34:25 host0 pvestatd[3992]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:34:30 host0 pvestatd[3992]: VM 102 qmp command failed - VM 102 qmp command 'query-proxmox-support' failed - unable to connect to VM 102 qmp socket - timeout after 51 retries

Jul 17 01:34:30 host0 pvestatd[3992]: status update time (21.739 seconds)

Jul 17 01:34:41 host0 pvestatd[3992]: VM 101 qmp command failed - VM 101 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:34:46 host0 pvestatd[3992]: VM 102 qmp command failed - VM 102 qmp command 'query-proxmox-support' failed - unable to connect to VM 102 qmp socket - timeout after 51 retries

Jul 17 01:34:51 host0 pvestatd[3992]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - got timeout

Jul 17 01:34:52 host0 pvestatd[3992]: status update time (21.759 seconds)One final note: when PVE gives up on the backup for a VM, the VM keeps running smoothly if it was already running before.

If instead it was powered off before the backup, it stays in a fake "running" state until I give a "qm stop" (

qm shutdown gives an error: VM 100 qmp command 'query-status' failed - got timeout).I have read lots of threads regarding problems with qmp and the "got timeout" error, however I haven't found an exact match for my situation, and even the solved threads have quite sketchy resolutions.

Please help.

I am available to give any missing details if needed.

Thank you in advance!

Cris