Hi, I wanted to ask for comments-thoughts. I was trying to setup a box for a client today, it was running VMware until - today - and we wanted to flip it over to be a test box for proof-of-concept as proxmox at their site. The drama appears to be mostly related to the fact that it has a Perc H200i raid card. Which looks like it believes itself to be a real hardware raid, but when I proceed with proxmox install - it cannot detect the thing. Vanilla debian11 has the same outcome. I am guessing linux support for this thing is just not great. I foolishly assumed it was a slightly nicer-newer thing than a Perc6 but clearly that is not the case.

Just wondering if anyone has managed to get a sane setup on one of these raid cards or not?

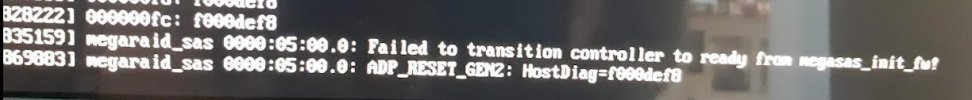

I tried it with a raid1 mirror - and also just "2 disks, no raid JBOD" - and nothing was visible to linux. It complained briefly in the dmesg logs but fails.

Some google digging suggests some people do a 3rd party firmware flash onto the thing / and put a LSI based firmware for "IT mode" to make the thing into a vanilla JBOD disk controller. Which I am guessing might be possible but seems slightly insane. I would then presumably do a vanilla Debian install, linux SW raid, and proxmox on top of that (ie, which is a config I am comfortable doing, so long as disks are visible reliably / and performance is not absolute garbage?)

Otherwise the secondary bit of fun is that the on-board broadcom quad-gig-NIC interfaces appear to be a chipset not supported on vanilla install / and I need to fuss about with some extra modules at install time to get the NIC(s) working. Which is annoying. But not a show stopper. Certainly I am more fussed about the raid card than the NIC chipset.

any comments are greatly appreciated.

Thank you!

Tim

Just wondering if anyone has managed to get a sane setup on one of these raid cards or not?

I tried it with a raid1 mirror - and also just "2 disks, no raid JBOD" - and nothing was visible to linux. It complained briefly in the dmesg logs but fails.

Some google digging suggests some people do a 3rd party firmware flash onto the thing / and put a LSI based firmware for "IT mode" to make the thing into a vanilla JBOD disk controller. Which I am guessing might be possible but seems slightly insane. I would then presumably do a vanilla Debian install, linux SW raid, and proxmox on top of that (ie, which is a config I am comfortable doing, so long as disks are visible reliably / and performance is not absolute garbage?)

Otherwise the secondary bit of fun is that the on-board broadcom quad-gig-NIC interfaces appear to be a chipset not supported on vanilla install / and I need to fuss about with some extra modules at install time to get the NIC(s) working. Which is annoying. But not a show stopper. Certainly I am more fussed about the raid card than the NIC chipset.

any comments are greatly appreciated.

Thank you!

Tim