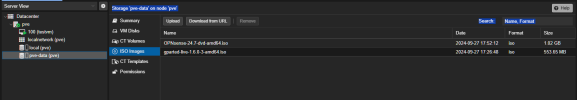

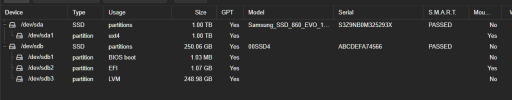

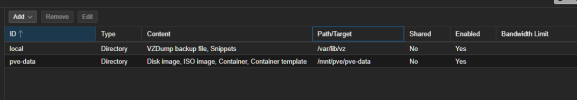

Today, we face an issue where an OS disk gets full. The reason was that even though we were uploading files to a Proxmox Storage outside the OS partition (a CEPH-FS Storage), we identified that Today, we faced an issue where the OS disk got full. Despite uploading files to a Proxmox Storage outside the OS partition (a CEPH-FS Storage), we found out that a file was generated in the /var/tmp directory. Initially, we assumed that this was due to a particular procedure used. However, I replicated the behavior and was able to confirm that it indeed caused the undesired situation. To test the theory further, I deleted the hidden file, which resulted in the following error message: "Error 500: temporary file '/var/tmp/pveupload-edac042ecf93413e26ad85a04506305a' does not exist."a file was generated on the /var/tmp. In the beginning, those are assumptions based on the procedure used. Therefore, I replicated the behavior, and sadly, I succeeded in replicating the undesired situation. To get some error, I deleted the hidden file, and that caused the following error, which proves the theory.

Error 500: temporary file '/var/tmp/pveupload-edac042ecf93413e26ad85a04506305a' does not exist

I strongly believe that "granting access" to a section that a user should not have access to, simply for the purpose of uploading a file, is not an appropriate behavior. It is vital that we ensure the security of our system.

I'm counting on to get help me keep our system running smoothly and without any issues.

Thanks

Error 500: temporary file '/var/tmp/pveupload-edac042ecf93413e26ad85a04506305a' does not exist

Code:

Package Versions:

proxmox-ve: 7.4-1 (running kernel: 5.15.131-2-pve)

pve-manager: 7.4-17 (running version: 7.4-17/513c62be)

pve-kernel-5.15: 7.4-9

pve-kernel-5.15.131-2-pve: 5.15.131-3

pve-kernel-5.15.126-1-pve: 5.15.126-1

pve-kernel-5.15.39-3-pve: 5.15.39-3

pve-kernel-5.15.35-1-pve: 5.15.35-3

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph: 16.2.13-pve1

ceph-fuse: 16.2.13-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: not correctly installed

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.4-1

proxmox-backup-file-restore: 2.4.4-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.2

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-6

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.14-pve1I strongly believe that "granting access" to a section that a user should not have access to, simply for the purpose of uploading a file, is not an appropriate behavior. It is vital that we ensure the security of our system.

I'm counting on to get help me keep our system running smoothly and without any issues.

Thanks