Hi All,

I'm just wondering if I am following best practice here with regards to my uplinks from Proxmox to a LACP trunk on my switch ?

Currently ports on the switch are aggregated (lacp) and the trunk has no default vlan, only tagged networks.

On Proxmox I have created a linux bond (lacp) with my 2 nics as slaves.

I have created a linux vlan for the management network bond0.10

I have created another linux vlan for the cluster network bond0.5

All above works great.

Now for VM and Container traffic I have created a VLAN aware bridge and its slave is bond0 also set vlan id's to 6-9 11-99 as to not have the proxmox management and cluster network available on the bridge.

Something I did notice is that after a reboot my default root from bond0.10 is missing from the routing table, I have to ssh in and manually add it : ip route add default via 10.0.10.254 dev bond0.10

I feel there is something on the networking side that I may have missed somewhere, any tips appreciated

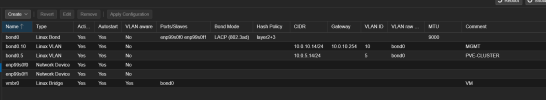

Here is my network config:

I'm just wondering if I am following best practice here with regards to my uplinks from Proxmox to a LACP trunk on my switch ?

Currently ports on the switch are aggregated (lacp) and the trunk has no default vlan, only tagged networks.

On Proxmox I have created a linux bond (lacp) with my 2 nics as slaves.

I have created a linux vlan for the management network bond0.10

I have created another linux vlan for the cluster network bond0.5

All above works great.

Now for VM and Container traffic I have created a VLAN aware bridge and its slave is bond0 also set vlan id's to 6-9 11-99 as to not have the proxmox management and cluster network available on the bridge.

Something I did notice is that after a reboot my default root from bond0.10 is missing from the routing table, I have to ssh in and manually add it : ip route add default via 10.0.10.254 dev bond0.10

I feel there is something on the networking side that I may have missed somewhere, any tips appreciated

Here is my network config:

Code:

cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto enp99s0f0

iface enp99s0f0 inet manual

auto enp99s0f1

iface enp99s0f1 inet manual

auto bond0

iface bond0 inet manual

bond-slaves enp99s0f0 enp99s0f1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

mtu 9000

auto bond0.10

iface bond0.10 inet static

address 10.0.10.14/24

gateway 10.0.10.254

#MGMT

auto bond0.5

iface bond0.5 inet static

address 10.0.5.14/24

#PVE-CLUSTER

auto vmbr0

iface vmbr0 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 6-9 11-99

#VM

source /etc/network/interfaces.d/*

Last edited: