Hello together

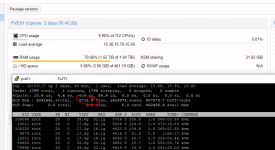

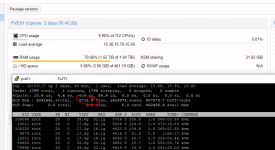

I have the following problem. On a PVE host version 7.2-7 the RAM usage is displayed incorrectly in the GUI. The GUI shows that 1.6 TB of RAM is used, but only 5 GB are effectively free.

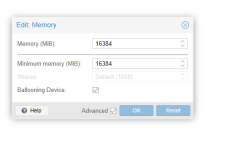

This 1.6 TB would also match with the RAM allocation of the virtual machines. Ballooning is turned on. I have the following number of VMs and RAM allocations:

3 VMs * 4 GB = 12 GB

11 VMs * 8 GB = 88 GB

35 VMs * 16 GB = 560 GB

24 VMs * 24 GB = 576 GB

12 VMs * 32 GB = 384 GB

--------------------------------------

Total: 1620 GB

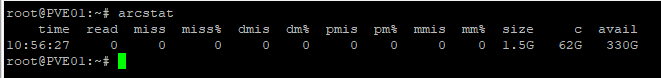

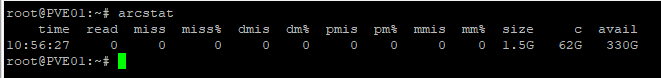

ZFS does run, but requires almost no RAM:

The problem is, that every time some VMs shut down automatically because there is too less RAM available.

Does anyone have an idea what this could be?

Thank you and best regards

I have the following problem. On a PVE host version 7.2-7 the RAM usage is displayed incorrectly in the GUI. The GUI shows that 1.6 TB of RAM is used, but only 5 GB are effectively free.

This 1.6 TB would also match with the RAM allocation of the virtual machines. Ballooning is turned on. I have the following number of VMs and RAM allocations:

3 VMs * 4 GB = 12 GB

11 VMs * 8 GB = 88 GB

35 VMs * 16 GB = 560 GB

24 VMs * 24 GB = 576 GB

12 VMs * 32 GB = 384 GB

--------------------------------------

Total: 1620 GB

ZFS does run, but requires almost no RAM:

The problem is, that every time some VMs shut down automatically because there is too less RAM available.

root@PVE01:~# pveversion --verbose

proxmox-ve: 7.2-1 (running kernel: 5.15.39-1-pve)

pve-manager: 7.2-7 (running version: 7.2-7/d0dd0e85)

pve-kernel-5.15: 7.2-6

pve-kernel-helper: 7.2-6

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.4.174-2-pve: 5.4.174-2

ceph-fuse: 15.2.14-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-7

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.5-1

proxmox-backup-file-restore: 2.2.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.5-1

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 6.2.0-11

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

proxmox-ve: 7.2-1 (running kernel: 5.15.39-1-pve)

pve-manager: 7.2-7 (running version: 7.2-7/d0dd0e85)

pve-kernel-5.15: 7.2-6

pve-kernel-helper: 7.2-6

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.4.174-2-pve: 5.4.174-2

ceph-fuse: 15.2.14-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-7

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.5-1

proxmox-backup-file-restore: 2.2.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.5-1

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 6.2.0-11

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

top - 11:32:35 up 2 days, 1:19, 1 user, load average: 13.09, 13.88, 13.66

Tasks: 1793 total, 2 running, 1791 sleeping, 0 stopped, 0 zombie

%Cpu(s): 6.8 us, 3.3 sy, 0.0 ni, 89.9 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

MiB Mem : 2031661.+total, 5417.0 free, 1618235.+used, 408009.1 buff/cache

MiB Swap: 0.0 total, 0.0 free, 0.0 used. 403096.7 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

14011 root 20 0 17.6g 16.1g 5020 S 150.2 0.8 630:14.46 kvm

8678 root 20 0 17.3g 16.1g 4632 S 43.6 0.8 1182:53 kvm

12501 root 20 0 17.3g 16.1g 4556 S 33.7 0.8 340:24.76 kvm

146142 root 20 0 33.6g 32.1g 4580 S 14.9 1.6 615:43.49 kvm

49528 root 20 0 25.7g 24.1g 5000 S 14.5 1.2 469:09.66 kvm

75427 root 20 0 33.6g 32.1g 4640 S 14.5 1.6 575:12.51 kvm

113566 root 20 0 33.6g 32.1g 4796 S 14.5 1.6 550:06.77 kvm

155931 root 20 0 33.7g 32.1g 4680 S 14.5 1.6 538:04.31 kvm

159143 root 20 0 33.7g 32.1g 4684 S 14.5 1.6 521:38.96 kvm

84864 root 20 0 33.6g 32.1g 4668 S 14.2 1.6 560:48.03 kvm

109066 root 20 0 33.6g 32.1g 4764 S 14.2 1.6 572:59.21 kvm

118325 root 20 0 33.6g 32.1g 4732 S 14.2 1.6 575:46.44 kvm

79996 root 20 0 33.6g 32.1g 4640 S 13.9 1.6 560:18.00 kvm

120438 root 20 0 33.6g 32.1g 4740 S 13.9 1.6 563:16.42 kvm

152378 root 20 0 33.6g 32.1g 4720 S 13.9 1.6 559:35.08 kvm

47372 root 20 0 25.4g 24.1g 4760 S 13.5 1.2 462:59.74 kvm

58204 root 20 0 25.5g 24.1g 4628 S 12.9 1.2 465:12.88 kvm

45802 root 20 0 25.7g 24.1g 4776 S 12.5 1.2 463:46.83 kvm

2809413 root 20 0 33.5g 32.0g 7248 S 12.5 1.6 57:08.78 kvm

42488 root 20 0 25.4g 24.1g 4700 S 12.2 1.2 475:00.12 kvm

44665 root 20 0 25.4g 24.1g 4760 S 12.2 1.2 464:34.71 kvm

65308 root 20 0 25.4g 24.1g 4692 S 12.2 1.2 465:32.47 kvm

48392 root 20 0 25.5g 24.1g 4700 S 11.9 1.2 483:05.40 kvm

51795 root 20 0 25.4g 24.1g 4812 S 11.9 1.2 482:51.92 kvm

64123 root 20 0 25.6g 24.1g 4776 S 11.9 1.2 473:53.08 kvm

67611 root 20 0 25.5g 24.1g 4668 S 11.9 1.2 462:32.43 kvm

54395 root 20 0 25.5g 24.1g 4736 S 11.6 1.2 475:41.91 kvm

59306 root 20 0 25.4g 24.1g 4704 S 11.6 1.2 456:15.25 kvm

259429 root 20 0 25.5g 24.1g 4696 S 11.6 1.2 433:12.09 kvm

56967 root 20 0 25.5g 24.1g 4644 S 11.2 1.2 465:30.25 kvm

60550 root 20 0 25.6g 24.1g 4948 S 11.2 1.2 459:07.97 kvm

307452 root 20 0 25.5g 24.1g 4852 S 11.2 1.2 337:22.31 kvm

346354 root 20 0 25.5g 24.1g 4796 S 10.9 1.2 324:30.58 kvm

7481 root 20 0 9470220 8.0g 4656 S 10.6 0.4 440:53.90 kvm

61678 root 20 0 25.6g 24.1g 4652 S 10.6 1.2 471:31.75 kvm

62946 root 20 0 25.4g 24.1g 4664 S 10.6 1.2 457:44.52 kvm

9676 root 20 0 17.4g 16.1g 4668 S 10.2 0.8 511:16.63 kvm

96810 root 20 0 25.6g 24.1g 4688 S 10.2 1.2 460:33.58 kvm

3158798 root 20 0 25.4g 24.0g 7732 S 10.2 1.2 7:42.20 kvm

3200148 root 20 0 229000 70352 14828 R 10.2 0.0 0:00.31 qm

357781 root 20 0 25.4g 24.1g 4796 S 9.9 1.2 325:34.65 kvm

24781 root 20 0 17.4g 16.1g 4708 S 9.6 0.8 397:06.92 kvm

29419 root 20 0 17.3g 16.1g 4648 S 9.6 0.8 400:59.80 kvm

258640 root 20 0 25.4g 24.1g 4760 S 9.6 1.2 334:55.11 kvm

26676 root 20 0 17.3g 16.1g 4712 S 9.2 0.8 392:40.30 kvm

31208 root 20 0 17.3g 16.1g 4632 S 9.2 0.8 401:32.97 kvm

33919 root 20 0 17.3g 16.1g 4736 S 9.2 0.8 379:22.02 kvm

18022 root 20 0 17.5g 16.1g 5348 S 8.9 0.8 360:01.16 kvm

19215 root 20 0 17.5g 16.1g 4708 S 8.9 0.8 357:42.63 kvm

34740 root 20 0 17.3g 16.1g 4636 S 8.9 0.8 384:19.59 kvm

39248 root 20 0 17.5g 16.1g 5064 S 8.9 0.8 382:24.34 kvm

15731 root 20 0 17.3g 16.1g 4644 S 8.6 0.8 317:42.68 kvm

22272 root 20 0 17.3g 16.1g 4804 S 8.6 0.8 361:04.52 kvm

27674 root 20 0 17.3g 16.1g 4600 S 8.6 0.8 372:21.58 kvm

33057 root 20 0 17.2g 16.1g 4712 S 8.6 0.8 386:48.01 kvm

36488 root 20 0 17.7g 16.1g 4776 S 8.6 0.8 347:10.25 kvm

40257 root 20 0 17.5g 16.1g 4732 S 8.6 0.8 383:11.69 kvm

16630 root 20 0 17.4g 16.1g 4748 S 8.3 0.8 367:25.77 kvm

21220 root 20 0 17.3g 16.1g 4652 S 8.3 0.8 369:48.86 kvm

38306 root 20 0 17.4g 16.1g 4612 S 8.3 0.8 368:24.47 kvm

41417 root 20 0 17.9g 16.1g 4744 S 8.3 0.8 372:44.72 kvm

20182 root 20 0 17.4g 16.1g 4672 S 7.9 0.8 355:27.87 kvm

166011 root 20 0 17.3g 16.1g 4692 S 7.9 0.8 268:54.04 kvm

37395 root 20 0 17.4g 16.1g 4652 S 7.6 0.8 375:38.41 kvm

3144237 root 20 0 17.3g 16.0g 7632 S 7.6 0.8 6:20.45 kvm

14854 root 20 0 17.4g 16.1g 4672 S 7.3 0.8 290:48.51 kvm

108230 root 20 0 17.4g 16.1g 4636 S 7.3 0.8 273:26.71 kvm

1421853 root 20 0 17.2g 16.1g 5940 S 7.3 0.8 157:14.12 kvm

1763679 root 20 0 17.2g 16.1g 6152 S 7.3 0.8 131:35.48 kvm

1747213 root 20 0 17.3g 16.1g 6084 S 6.9 0.8 134:58.40 kvm

6861 root 20 0 0 0 0 S 5.3 0.0 139:10.77 nv_queue

7189 root 20 0 0 0 0 S 5.0 0.0 144:34.94 nv_queue

Tasks: 1793 total, 2 running, 1791 sleeping, 0 stopped, 0 zombie

%Cpu(s): 6.8 us, 3.3 sy, 0.0 ni, 89.9 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

MiB Mem : 2031661.+total, 5417.0 free, 1618235.+used, 408009.1 buff/cache

MiB Swap: 0.0 total, 0.0 free, 0.0 used. 403096.7 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

14011 root 20 0 17.6g 16.1g 5020 S 150.2 0.8 630:14.46 kvm

8678 root 20 0 17.3g 16.1g 4632 S 43.6 0.8 1182:53 kvm

12501 root 20 0 17.3g 16.1g 4556 S 33.7 0.8 340:24.76 kvm

146142 root 20 0 33.6g 32.1g 4580 S 14.9 1.6 615:43.49 kvm

49528 root 20 0 25.7g 24.1g 5000 S 14.5 1.2 469:09.66 kvm

75427 root 20 0 33.6g 32.1g 4640 S 14.5 1.6 575:12.51 kvm

113566 root 20 0 33.6g 32.1g 4796 S 14.5 1.6 550:06.77 kvm

155931 root 20 0 33.7g 32.1g 4680 S 14.5 1.6 538:04.31 kvm

159143 root 20 0 33.7g 32.1g 4684 S 14.5 1.6 521:38.96 kvm

84864 root 20 0 33.6g 32.1g 4668 S 14.2 1.6 560:48.03 kvm

109066 root 20 0 33.6g 32.1g 4764 S 14.2 1.6 572:59.21 kvm

118325 root 20 0 33.6g 32.1g 4732 S 14.2 1.6 575:46.44 kvm

79996 root 20 0 33.6g 32.1g 4640 S 13.9 1.6 560:18.00 kvm

120438 root 20 0 33.6g 32.1g 4740 S 13.9 1.6 563:16.42 kvm

152378 root 20 0 33.6g 32.1g 4720 S 13.9 1.6 559:35.08 kvm

47372 root 20 0 25.4g 24.1g 4760 S 13.5 1.2 462:59.74 kvm

58204 root 20 0 25.5g 24.1g 4628 S 12.9 1.2 465:12.88 kvm

45802 root 20 0 25.7g 24.1g 4776 S 12.5 1.2 463:46.83 kvm

2809413 root 20 0 33.5g 32.0g 7248 S 12.5 1.6 57:08.78 kvm

42488 root 20 0 25.4g 24.1g 4700 S 12.2 1.2 475:00.12 kvm

44665 root 20 0 25.4g 24.1g 4760 S 12.2 1.2 464:34.71 kvm

65308 root 20 0 25.4g 24.1g 4692 S 12.2 1.2 465:32.47 kvm

48392 root 20 0 25.5g 24.1g 4700 S 11.9 1.2 483:05.40 kvm

51795 root 20 0 25.4g 24.1g 4812 S 11.9 1.2 482:51.92 kvm

64123 root 20 0 25.6g 24.1g 4776 S 11.9 1.2 473:53.08 kvm

67611 root 20 0 25.5g 24.1g 4668 S 11.9 1.2 462:32.43 kvm

54395 root 20 0 25.5g 24.1g 4736 S 11.6 1.2 475:41.91 kvm

59306 root 20 0 25.4g 24.1g 4704 S 11.6 1.2 456:15.25 kvm

259429 root 20 0 25.5g 24.1g 4696 S 11.6 1.2 433:12.09 kvm

56967 root 20 0 25.5g 24.1g 4644 S 11.2 1.2 465:30.25 kvm

60550 root 20 0 25.6g 24.1g 4948 S 11.2 1.2 459:07.97 kvm

307452 root 20 0 25.5g 24.1g 4852 S 11.2 1.2 337:22.31 kvm

346354 root 20 0 25.5g 24.1g 4796 S 10.9 1.2 324:30.58 kvm

7481 root 20 0 9470220 8.0g 4656 S 10.6 0.4 440:53.90 kvm

61678 root 20 0 25.6g 24.1g 4652 S 10.6 1.2 471:31.75 kvm

62946 root 20 0 25.4g 24.1g 4664 S 10.6 1.2 457:44.52 kvm

9676 root 20 0 17.4g 16.1g 4668 S 10.2 0.8 511:16.63 kvm

96810 root 20 0 25.6g 24.1g 4688 S 10.2 1.2 460:33.58 kvm

3158798 root 20 0 25.4g 24.0g 7732 S 10.2 1.2 7:42.20 kvm

3200148 root 20 0 229000 70352 14828 R 10.2 0.0 0:00.31 qm

357781 root 20 0 25.4g 24.1g 4796 S 9.9 1.2 325:34.65 kvm

24781 root 20 0 17.4g 16.1g 4708 S 9.6 0.8 397:06.92 kvm

29419 root 20 0 17.3g 16.1g 4648 S 9.6 0.8 400:59.80 kvm

258640 root 20 0 25.4g 24.1g 4760 S 9.6 1.2 334:55.11 kvm

26676 root 20 0 17.3g 16.1g 4712 S 9.2 0.8 392:40.30 kvm

31208 root 20 0 17.3g 16.1g 4632 S 9.2 0.8 401:32.97 kvm

33919 root 20 0 17.3g 16.1g 4736 S 9.2 0.8 379:22.02 kvm

18022 root 20 0 17.5g 16.1g 5348 S 8.9 0.8 360:01.16 kvm

19215 root 20 0 17.5g 16.1g 4708 S 8.9 0.8 357:42.63 kvm

34740 root 20 0 17.3g 16.1g 4636 S 8.9 0.8 384:19.59 kvm

39248 root 20 0 17.5g 16.1g 5064 S 8.9 0.8 382:24.34 kvm

15731 root 20 0 17.3g 16.1g 4644 S 8.6 0.8 317:42.68 kvm

22272 root 20 0 17.3g 16.1g 4804 S 8.6 0.8 361:04.52 kvm

27674 root 20 0 17.3g 16.1g 4600 S 8.6 0.8 372:21.58 kvm

33057 root 20 0 17.2g 16.1g 4712 S 8.6 0.8 386:48.01 kvm

36488 root 20 0 17.7g 16.1g 4776 S 8.6 0.8 347:10.25 kvm

40257 root 20 0 17.5g 16.1g 4732 S 8.6 0.8 383:11.69 kvm

16630 root 20 0 17.4g 16.1g 4748 S 8.3 0.8 367:25.77 kvm

21220 root 20 0 17.3g 16.1g 4652 S 8.3 0.8 369:48.86 kvm

38306 root 20 0 17.4g 16.1g 4612 S 8.3 0.8 368:24.47 kvm

41417 root 20 0 17.9g 16.1g 4744 S 8.3 0.8 372:44.72 kvm

20182 root 20 0 17.4g 16.1g 4672 S 7.9 0.8 355:27.87 kvm

166011 root 20 0 17.3g 16.1g 4692 S 7.9 0.8 268:54.04 kvm

37395 root 20 0 17.4g 16.1g 4652 S 7.6 0.8 375:38.41 kvm

3144237 root 20 0 17.3g 16.0g 7632 S 7.6 0.8 6:20.45 kvm

14854 root 20 0 17.4g 16.1g 4672 S 7.3 0.8 290:48.51 kvm

108230 root 20 0 17.4g 16.1g 4636 S 7.3 0.8 273:26.71 kvm

1421853 root 20 0 17.2g 16.1g 5940 S 7.3 0.8 157:14.12 kvm

1763679 root 20 0 17.2g 16.1g 6152 S 7.3 0.8 131:35.48 kvm

1747213 root 20 0 17.3g 16.1g 6084 S 6.9 0.8 134:58.40 kvm

6861 root 20 0 0 0 0 S 5.3 0.0 139:10.77 nv_queue

7189 root 20 0 0 0 0 S 5.0 0.0 144:34.94 nv_queue

Does anyone have an idea what this could be?

Thank you and best regards