Hi,

I'm trying to find why one of my PVE host is crashing randomly.

My configuration is a cluster of 3x nodes + Ceph

dmesg part 1 :

Then many many lines with

And then the server hang...

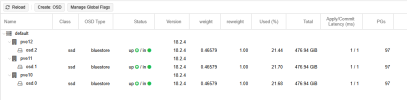

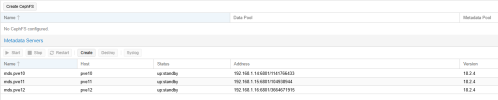

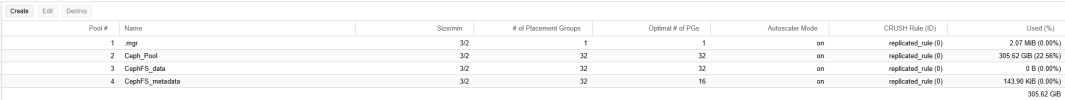

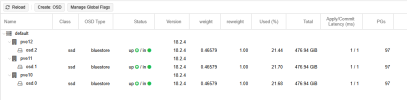

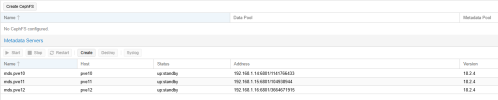

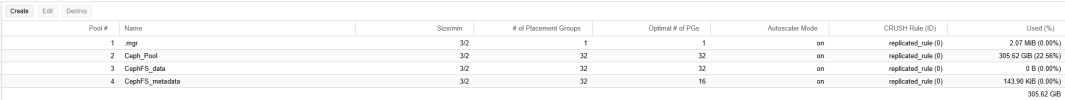

Cepth configuration on this host (pve12) :

I'm trying to find why one of my PVE host is crashing randomly.

My configuration is a cluster of 3x nodes + Ceph

dmesg part 1 :

Code:

Feb 18 10:57:48 pve12 ceph-osd[1136]: 2025-02-18T10:57:48.853+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 10:57:48 pve12 systemd[1]: /lib/systemd/system/ceph-volume@.service:8: Unit uses KillMode=none. This is unsafe, as it disables systemd's process lifecycle management >

Feb 18 10:57:48 pve12 systemd[1]: Mounting mnt-pve-CephFS.mount - /mnt/pve/CephFS...

Feb 18 10:57:48 pve12 kernel: libceph: mon1 (1)192.168.1.15:6789 session established

Feb 18 10:57:48 pve12 mount[388122]: mount error: no mds (Metadata Server) is up. The cluster might be laggy, or you may not be authorized

Feb 18 10:57:48 pve12 kernel: libceph: client394795 fsid dd2f620b-222c-4f2b-af63-77c37ca60b85

Feb 18 10:57:48 pve12 kernel: ceph: No mds server is up or the cluster is laggy

Feb 18 10:57:48 pve12 systemd[1]: mnt-pve-CephFS.mount: Mount process exited, code=exited, status=32/n/a

Feb 18 10:57:48 pve12 systemd[1]: mnt-pve-CephFS.mount: Failed with result 'exit-code'.

Feb 18 10:57:48 pve12 systemd[1]: Failed to mount mnt-pve-CephFS.mount - /mnt/pve/CephFS.

Feb 18 10:57:48 pve12 pvestatd[1102]: mount error: Job failed. See "journalctl -xe" for details.

Feb 18 10:57:49 pve12 ceph-osd[1136]: 2025-02-18T10:57:49.806+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 10:57:50 pve12 ceph-osd[1136]: 2025-02-18T10:57:50.849+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 10:57:51 pve12 ceph-osd[1136]: 2025-02-18T10:57:51.829+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 10:57:52 pve12 ceph-osd[1136]: 2025-02-18T10:57:52.879+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 10:57:53 pve12 ceph-osd[1136]: 2025-02-18T10:57:53.898+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 10:57:54 pve12 ceph-osd[1136]: 2025-02-18T10:57:54.856+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 10:57:55 pve12 kernel: INFO: task kmmpd-rbd0:2016 blocked for more than 122 seconds.

Feb 18 10:57:55 pve12 kernel: Tainted: P O 6.8.12-8-pve #1

Feb 18 10:57:55 pve12 kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Feb 18 10:57:55 pve12 kernel: task:kmmpd-rbd0 state:D stack:0 pid:2016 tgid:2016 ppid:2 flags:0x00004000

Feb 18 10:57:55 pve12 kernel: Call Trace:

Feb 18 10:57:55 pve12 kernel: <TASK>

Feb 18 10:57:55 pve12 kernel: __schedule+0x42b/0x1500

Feb 18 10:57:55 pve12 kernel: schedule+0x33/0x110

Feb 18 10:57:55 pve12 kernel: io_schedule+0x46/0x80

Feb 18 10:57:55 pve12 kernel: bit_wait_io+0x11/0x90

Feb 18 10:57:55 pve12 kernel: __wait_on_bit+0x4a/0x120

Feb 18 10:57:55 pve12 kernel: ? __pfx_bit_wait_io+0x10/0x10

Feb 18 10:57:55 pve12 kernel: out_of_line_wait_on_bit+0x8c/0xb0

Feb 18 10:57:55 pve12 kernel: ? __pfx_wake_bit_function+0x10/0x10

Feb 18 10:57:55 pve12 kernel: __wait_on_buffer+0x30/0x50

Feb 18 10:57:55 pve12 kernel: write_mmp_block_thawed+0xfa/0x120

Feb 18 10:57:55 pve12 kernel: write_mmp_block+0x46/0xd0

Feb 18 10:57:55 pve12 kernel: kmmpd+0x1ab/0x430

Feb 18 10:57:55 pve12 kernel: ? __pfx_kmmpd+0x10/0x10

Feb 18 10:57:55 pve12 kernel: kthread+0xef/0x120

Feb 18 10:57:55 pve12 kernel: ? __pfx_kthread+0x10/0x10

Feb 18 10:57:55 pve12 kernel: ret_from_fork+0x44/0x70

Feb 18 10:57:55 pve12 kernel: ? __pfx_kthread+0x10/0x10

Feb 18 10:57:55 pve12 kernel: ret_from_fork_asm+0x1b/0x30

Feb 18 10:57:55 pve12 kernel: </TASK>Then many many lines with

Code:

Feb 18 12:24:59 pve12 ceph-osd[1136]: 2025-02-18T12:24:59.529+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 12:25:00 pve12 ceph-osd[1136]: 2025-02-18T12:25:00.500+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 12:25:01 pve12 ceph-osd[1136]: 2025-02-18T12:25:01.477+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 12:25:02 pve12 ceph-osd[1136]: 2025-02-18T12:25:02.494+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 12:25:03 pve12 ceph-osd[1136]: 2025-02-18T12:25:03.519+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 12:25:04 pve12 ceph-osd[1136]: 2025-02-18T12:25:04.470+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 12:25:05 pve12 ceph-osd[1136]: 2025-02-18T12:25:05.436+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:109>

Feb 18 12:25:06 pve12 ceph-osd[1136]: 2025-02-18T12:25:06.427+0100 7a167f8006c0 -1 osd.2 1118 get_health_metrics reporting 256 slow ops, oldest is osd_op(client.364115.0:10Cepth configuration on this host (pve12) :