Hi everyone,

I'm planning on replacing my old file server with a Proxmox environment. My actual home server is running Ubuntu 20.04 with 1TB HDD, 4x 4TB Raid 5 (mdraid) and 4 x 2 TB Raid 10 (mdraid). On top of that, I have a small QNAP TS-212P with 2x 8TB Raid 0 that is use for backing up the file server. The new server will be a Dell T630 with 4 x 1TB and 4 x 6 TB. Note: All disks mentioned are WD Red 3.5".

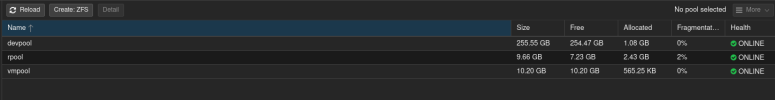

In order to familiarize myself with Proxmox, I create a VM inside VMWare Workstation to play with it. I created it similarly as it will be: 4 x 10GB and 4 x 60 GB disk. I partitioned the disks as follow:

2 x 10GB ZFS mirror (Proxmox itself)

2 x 10GB ZFS mirror (VM config, ISO image, etc)

4 x 60 GB RAIDZ1 (VM disks)

The way I understand ZFS, is that RAIDZ1 is equivalent to raid 5 and RAIDZ2 is equivalent to raid; but better. One of the problem I have is that my current raid 5 setup reports a total disk size of 11(ish) TB (which make sense since since 1 disk is used for parity) but, RAIDZ1 reports the total disk size as 255 GB. I was expecting something around 180 GB (4-1 x 60) since the equivalent of 1 disk is used to store parity data (or whatever ZFS calls it). Is there something I'm missing, here?

Eventually, my old server's disks will be moved to the new Proxmox server. I read somewhere (can't remember where exactly) that expanding a RAIDZ can be a pain sometimes, if at all possible. I'm thinikning of creating 2 extra RAIDZ1 pool for those. Any other suggestions you might have are welcome.

Thanks,

P.S.: This is a home server environnement. Top performance is a nice to have, not a must. Most of the data are not critical with a few exception (<500 GB) which is taken care of with an offsite daily backup.

I'm planning on replacing my old file server with a Proxmox environment. My actual home server is running Ubuntu 20.04 with 1TB HDD, 4x 4TB Raid 5 (mdraid) and 4 x 2 TB Raid 10 (mdraid). On top of that, I have a small QNAP TS-212P with 2x 8TB Raid 0 that is use for backing up the file server. The new server will be a Dell T630 with 4 x 1TB and 4 x 6 TB. Note: All disks mentioned are WD Red 3.5".

In order to familiarize myself with Proxmox, I create a VM inside VMWare Workstation to play with it. I created it similarly as it will be: 4 x 10GB and 4 x 60 GB disk. I partitioned the disks as follow:

2 x 10GB ZFS mirror (Proxmox itself)

2 x 10GB ZFS mirror (VM config, ISO image, etc)

4 x 60 GB RAIDZ1 (VM disks)

The way I understand ZFS, is that RAIDZ1 is equivalent to raid 5 and RAIDZ2 is equivalent to raid; but better. One of the problem I have is that my current raid 5 setup reports a total disk size of 11(ish) TB (which make sense since since 1 disk is used for parity) but, RAIDZ1 reports the total disk size as 255 GB. I was expecting something around 180 GB (4-1 x 60) since the equivalent of 1 disk is used to store parity data (or whatever ZFS calls it). Is there something I'm missing, here?

Eventually, my old server's disks will be moved to the new Proxmox server. I read somewhere (can't remember where exactly) that expanding a RAIDZ can be a pain sometimes, if at all possible. I'm thinikning of creating 2 extra RAIDZ1 pool for those. Any other suggestions you might have are welcome.

Thanks,

P.S.: This is a home server environnement. Top performance is a nice to have, not a must. Most of the data are not critical with a few exception (<500 GB) which is taken care of with an offsite daily backup.